Telematics in automobile insurance : Are you giving too much for little in return?

By Aditya Mengani | July 9, 2021

Telematics based programs like “Pay as you drive” have been dramatically transforming the automobile insurance industry over the past few years. Many traditional insurance providers like AllState, Progressive, Geico etc. and newer startups like Metromile, Root and even car manufacturers like Tesla have been introducing programs or planning new ones centered around “Pay as you drive” type of model where a user consents to providing his driving data using telematics devices.

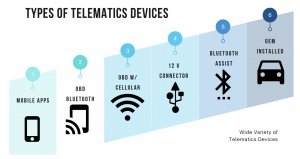

The data collected is used to determine the risk of the customer and provide discounts or other offers tailored in a personalized way to the customer based on his driving habits. The only caveat being the user subscribes to a continuous feedback of data points from the vehicle using telematics that can be embedded in the car using built-in sensors or plug in devices using GPS or mobile phones. This could open up a can of worms, with regards to issues of privacy and ethics surrounding the collected data. Many consumers were skeptical of enrolling to these programs, but recently due to the pandemic, a lot has been changing with the perception of users as many more consumers are enrolling hoping to reduce their insurance costs due to lesser travelling habits. This trend is expected to continue in future with more and more consumers opting in for these services.

There is a lack of transparency as to what gets collected by these telamatics as many insurance providers provide a vague definition of various metrics and what constitutes driving behaviour. In traditional insurance multiple factors affect the risk and in turn the premium paid by the customer like location, age, gender, marital status, years of driving experience, driving and claims history, vehicle information etc. With the “Pay as you drive”, insurance providers claim that, additionally, they track real time metrics related to driving habits which include speed, acceleration, braking, miles driven, time of the day etc and what gets collected varies by each insurance provider. For example, In 2015 AllState obtained a patent that can use sensors and cameras to detect potential sources of driver distraction within a vehicle and also has potential to evaluate heart rate, blood pressure, and electrocardiogram signals that are recorded from steering wheel sensors. Concerns similar to these have made consumers skeptical about enrolling in these programs.

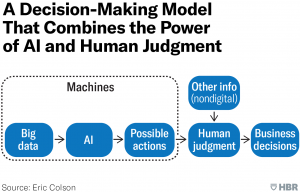

Another challenging aspect is algorithmic transparency. With wide spread consumption of telematics data, insurance providers and regulators need to define a clear set of factors collected for actuarial justification of rating premiums and underwriting the policies. Most of the algorithms are proprietary and insurance providers do not release data as to how their algorithms use the factors to derive a score. From a fairness and transparency perspective, there is very less choice and information available for consumers to decide before opting in to these programs.

Widespread usage of artificial intelligence based telematics in predicting risks can pose predictive privacy harms to the consumer and without proper regulations insurers can collect a variety of data that does not have any causative effect on the factors used for predicting risk scores. Currently such regulations are not enforced across many countries. This can also lead to discriminatory practices and create unintended biases during the data collection and exploration processes.

Who gets to build these telematics services is another thing to worry about. Most of the telematics services are created by third party vendors like verisk who are non-insurance firms providing software and analytical solutions for these programs. Thus these providers escape the radar of regulators who only scrutinize the insurance providers but do not have similar protocols established over these non-insurance vendors.

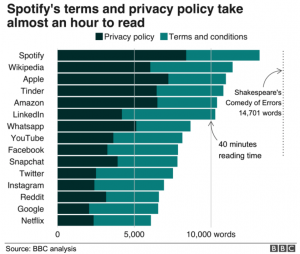

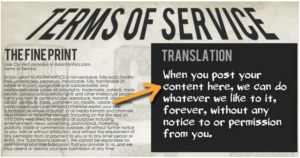

With chances of collecting a myriad amount of data and lack of transparency and regulations, there is a chance that the data can be used for advertising or can be attempted to sell to third parties for monetization purposes. Even though regulations like CCPA enforce companies to provide information regarding what they do with the data, there is a chance that these regulations might change over time in future, and consumers who are already enrolled in programs might not be aware of the changes to the policy terms.

Finally privacy would be another major issue that could pose many dangers to the consumer. Many companies retain the data collected forever or won’t state clearly in their privacy policies what they intend to do with the data collected after a period of time or what is their retention policy for the data. If there are no proper measures taken to protect the data collected, this could lead to privacy breaches.

With all the above risks laid out, for a consumer it all comes down to doing a risk vs return decision. Will a consumer give a lot of his information for little in return is something that varies by an individual’s perception of the processes practiced and his needs with respect to insurance prices. As far as it goes with the regulators and law, they are still evolving and embracing this new arena and still need to do a lot of catching up. All we can do for now is to hope that in future, a comprehensive set of laws and regulations can help this new area of insurance thrive to success and make a conscious choice on our needs.

References:

https://www.forbes.com/advisor/car-insurance/usage-based-insurance/

https://www.insurancethoughtleadership.com/are-you-ready-for-telematics/

https://content.naic.org/cipr_topics/topic_telematicsusagebased_insurance.htm

https://www.chicagotribune.com/business/ct-allstate-car-patent-0827-biz-20150826-story.html.

https://dataethics.eu/insurance-companies-should-balance-personalisation-and-solidarity/

https://consumerfed.org/reports/watch-where-youre-going/

https://www.internetjustsociety.org/challenges-of-gdpr-telematics-insurance