If you’re anything like me, it may be tough to imagine a world without the personalized product advances that we’ve seen.

I rely on the recommendations they provide, like in my personalized shopping experience with Amazon, in the relevant material on my Facebook news feed, and through highly pertinent search results by Google. These companies collect information about my product usage and combine it with relevant characteristics gathered about me, running these through a set of learning algorithms to provide a highly relevant output. It’s the unique personalization that makes these products so “sticky”, typically creating a mutually beneficial relationship between the company and for users.

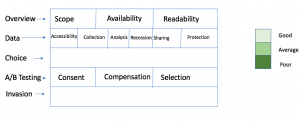

However, there are certain types of offers and uses of machine learning that create serious drawbacks for consumer groups – particularly, with targeted advertising and offerings for things like job postings and access to financial services that create characteristic-based reinforcement, which often disproportionally impacts disadvantaged groups. Furthermore, misplaced incentives entice companies to overextend data collection practices and invade privacy. I’ll briefly lead you through the framework that allows to happen, then will show you how certain groups can be harmed. While there are some proposed solutions, I hope you’ll also see why it’s so important to begin addressing these issues now.

Companies seek profit maximization by maximizing revenue and minimizing costs. This can result in real consumer benefit through firms trying to attract consumers by creating innovative consumer products, or otherwise through lower consumer prices caused by competition entering new markets. Nonetheless, there can also be negative impacts, which are especially pronounced when companies use learning algorithms within targeted advertising and offers.

In this case, consumer privacy impact gets treated as a negative third-party externality where the associated cost is not picked up by society instead of by the company. Academic work has shown that consumers value privacy enough to assign a monetary value to it when making purchases. However, more consumer data creates better personalized products and more accurate models; important for targeted advertising and offers. However, in collecting as much data as possible, consumer desire to protect privacy is wholly ignored.

Moreover, companies have extra leeway regarding consumer data collection due to monopolistic markets and consumer information disparities. Monopolistic markets occur when one company dominates a market, leaving consumers with high switching costs and low consumer choice. Many tech markets (e.g., Search – Google, Social Media – Facebook, Online shopping – Amazon) are dominated by one company, and while consumers can choose to stop using the products, quality switching choices are very limited. Furthermore, consumers may not know what data is collected or how it is used, creating a veritable “black box” where consumers may be vaguely aware of privacy intrusion, but without any specific examples, won’t discontinue product use.

Perhaps more worrisome are some of the reinforcement mechanisms created by some targeted advertising and offers. Google AdWords provides highly effective targeted advertising; however, it is problematic when these ads pick up on existing stereotypes. In fact, researchers at Carnegie Mellon and the International Computer Science Institute proved that AdWords showed high-income job offers more often to otherwise equivalent male candidates. Machine learning-based models are typically formed on existing “training” data, providing an outcome or recommendation based on gathered usage patterns, inferred traits, etc. While typically creating consumer benefit, re-training can create an undesirable reinforcement mechanism if the results from the prior model impact outcomes in the next round of training data. While a simplified explanation, this effectively works as follows:

- Model shows fewer women ads for high-income jobs

- Less women take high-income jobs (same bias that helped inform original model)

- Subsequent model re-training shows men as even better candidates to show ads to than prior model

Nonetheless, targeted ad firms are incented to prioritize ad clicks over fairness, and even if looking to fix this specific issue, the interconnectedness of the data inputs and the complexity of learning algorithms may make this a more difficult issue to solve than it seems.

Furthermore, other high societal impact industries are increasingly using machine learning. In particular, the banking and financial services sector now typically uses machine learning in credit risk assessment offer decisions, in order to lower costs by reducing probability of consumer default. However, a troubling trend is now showing up in these targeted models. Rather than making offer decisions on behaviors, credit decisions are being made on characteristics. Traditional credit scoring models compared known credit behaviors of a consumer—such as past late payments—with data that shows how people with the same behaviors performed in meeting credit obligations. However, many banks now purchase predictive analytics products for eligibility determinations, basing decisions on characteristics like store preferences and purchases (FTC Report Reference). While seemingly innocuous, characteristics can serve be highly correlated with protected traits like gender, age, race, or family status. By bringing in characteristics into predictive models, financial services can often make their decision criteria models more accurate as a whole. However, this is often at the expense of people from disadvantaged groups, who may be rejected from an offer even though they have exhibited the exact same behavior as another, thereby setting the individual and group further back. Depending on the circumstance, those companies leveraging characteristics most heavily may end up with more accurate models, giving them an unethical “leg up” on competition.

However, the complexity of data collection, transformation, and algorithms make these issues very difficult to regulate. Additionally, it is critical to also not stifle innovation. As such, industry experts have recommended adapting existing industry regulation to account for machine learning, rather than creating a set of all-encompassing rules (FTC Report Reference); an approach that fits well within the contextual integrity of existing frameworks. For example, motor vehicle regulation should adapt to AI within self-driving cars (FTC Report Reference). Currently, statutes like the Fair Credit Reporting Act, Equal Credit Opportunity Act, and Title VII prohibit overt discrimination of protected groups. As such, much of the statutory framework already exists, but may need to be strengthened to include characteristic-based exclusion language.

Moreover, the British Information Commissioner’s Office recommends that project teams perform a Privacy Impact Assessment detailing noteworthy data collection, identifying data needs, describing information flows, and identifying privacy risks; all helping to reduce the scope of privacy intrusion. Similarly, I recommend that teams perform an Unintended Outcome Assessment before implementing a targeted advertising campaign or learning-based offer, gathering team project managers and data scientists to brainstorm unintended discriminatory reinforcement consequences and propose mitigating procedures or model updates.