The TikTok Hubbub: What’s Different This Time Around?

By Anonymous | September 25, 2020

Barely three years since its creation, TikTok is the latest juggernaut to emerge in the social media landscape. With over two billion downloads (over 600 million of which occurred just this year), the short video sharing app that allows users to lip sync and share viral dances finds itself among the likes of Facebook, Twitter, and Instagram in both the size of its user base and ubiquitousness in popular culture. Along with this popularity has come a firestorm of criticism related to privacy concerns, as well as powerful players in the U.S. government categorizing the app as a national security threat.

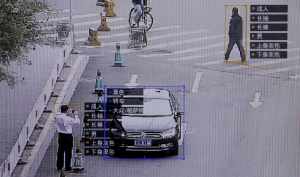

Image from: https://analyticsindiamag.com/is-tiktok-really-security-risk-or-america-being-paranoid/

Censorship

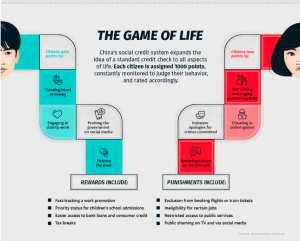

The largest reason TikTok seems to garner such scrutiny is the app’s parent company, ByteDance, is a Chinese company, and as such is governed by Chinese laws. Early criticisms of the company noted possible examples of censorship, including the removal of a teen’s account who was critical of human rights abuses by the Chinese government, and a German study that found TikTok hid posts made by LGBTQ users and those with disabilities. Exclusion of these viewpoints from the platform certainly raises censorship concerns. It is worth noting TikTok is not actually available in China, and the company maintains that they “do not remove content based on sensitivities related to China”.

Data Collection

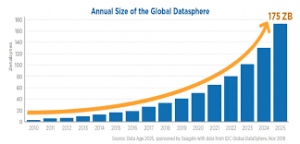

Like many of its counterparts, TikTok collects a vast amount of data from its users, including location, IP addresses, and browsing history. In the context of social media apps, this seems to be the norm. It is the question of where this data might ultimately flow that garners the most criticism. The Wall Street Journal notes “concerns grow that Beijing could tap the social-media platform’s information to gather data on Americans.” The idea that this personal information could be shared with a foreign government is indeed alarming, but might have one wondering why regulators have been fairly easy on U.S. based companies like Facebook, whose role in 2016’s election interference is still up for debate, or why citizens do not find it more problematic that the U.S. government frequently requests user information from Facebook and Google. In contrast to the U.S. Government, the European Union has been at the forefront of protecting user privacy and took preemptive steps by implementing the GDPR so that foreign companies, such as Facebook, could not misuse user data without consequence. It seems evident that control of personal data is a concern globally, but one that the U.S. is only selectively taking seriously if it stems from a foreign company.

The Backlash

In November 2019, with bipartisan support, a U.S. national security probe of TikTok was initiated over concerns of user data collection, content censorship, and the possibility of foreign influence campaigns. In September 2020, President Trump went so far as to implement a ban on TikTok in the U.S. Currently, it appears that Oracle has become TikTok’s “trusted tech partner” in the United States, possibly allaying some fears of where data is stored and processed for the application, and under whose authority, providing a path for TikTok to keep operating within the U.S.

For its part, TikTok is attempting to navigate very tricky geopolitical demands (the app has also been banned in India, and Japan and others may follow), even establishing a Transparency Center to “evaluate [their] moderation systems, processes and policies in a holistic manner”. Whether their actions will actually be able to assuage both the public and government’s misgivings is anyone’s guess, and it can also be argued that where the data they collect is purportedly stored and who owns the company are largely irrelevent to the issues raised.

As the saga over TikTok’s platform and policies continues to play out, hopefully the public and lawmakers will not miss the broader issues raised over privacy practices and user data. It is somewhat convenient to scrutinize a company from a nation with which the U.S. has substantive human rights, political, and trade disagreements. While TikTok’s policies should indeed raise concern, we would do well to ask many of the same questions of the applications we use, regardless of where they were founded.