Are we ready to trust machines and algorithms to decide, for all?

By Naga Chandrasekaran | July 9, 2021

Science Fiction to Reality:

I wake up to soothing alarm music and mutter, “Alexa, turnoff!” I pickup espresso from automated coffee machine and begin my zoom workday. The Ring doorbell alerts me about prepaid lunch, delivered by Uber Eats. After lunch, I interview candidates recommended to me by an algorithm. After work, I ride an autonomous Tesla car to my date I had met on Tinder. Back home, I share my day on social media accounts and watch a Netflix recommended movie. Around midnight, I ask Alexa to switch off the lights and set an alarm for the morning. Machines are becoming our life partner!

Digital Transformation Driving Data Generation Imbalance:

Through seamless integration of technology into every aspect of life, we share personally identifiable information (PII) and beyond, generating over 2.5 exabytes of data per day [1]. Advances in semiconductors, algorithmic power, and availability of big data has led to significant progress in data science, artificial intelligence (AI), and machine learning (ML). This progress is helping solve cosmetic, tactical, and strategic issues impacting individuals and societies universally. But, is it making the world a better place, for all?

Digital transformation is influencing only part of the world’s population. In January 2021, 59.5% of the global population were active internet users [3]. The number dropped further for usage of digital devices at edge. These users contribute to data generation. The categories and classifications created by data scientists is only a representation of wealthy individuals and developed nations that created the data. So, such classifications are incomplete.

Interconnected world also generates data from unassuming participants who are thrust into a system through surveillance and interrogation [4, 6]. Even for willing participants, privacy challenges emerge when their data is used outside the original context [5]. Data providers share a high degree of risk to harm vs benefit, from data leaks [6]. Privacy policies, established by organizations that collect such data, is focused on defensive measures instead of ethics. These issues drive users to avoid participation or provide limited information, which leads to inaccuracies in the dataset.

As pointed out by Sandra Harding, “all scientific knowledge is always socially situated [7]”. Knowledge generated by data has inbuilt bias and exclusions. In addition, timely access to this data is limited to few. The imbalance generated from power position, social settings, data inaccuracy, and incomplete datasets create bias in our accumulated knowledge.

AI Cannot be Biased!

We apply this imbalanced knowledge to further our wisdom, which is to discern and make decisions. This is the field of AI/ML, also termed predictive analytics. When our data and knowledge are inaccurate and biased, our algorithms and decisions reconfirm our bias (Amazon recruiting). When decisions have limited impact (e.g., movie recommendations), we have the opportunity to explore algorithmic decision making. However, when decisions have deep societal impact (e.g., crime sentencing), would we turn our decision making to AI? [8, 9]

Big data advocates claim that with sufficient data we can reach same conclusions as scientific inquiry, however, data is just an input with inherent issues. There are other external factors that shape reality. We have to interrogate how the data was generated: Who are included and excluded? Does the variance count for diversity? Whose interests are represented? Without such exploration of the input data, the outputs do not represent the true world. To become wiser, we have to recognize that our knowledge is incomplete and algorithms are biased.

Collaborative Human – Machine Model:

In the scene enacted at the beginning of this article, it appeared that humans are making decisions while enjoying technological benefits. However, it is possible that our decisions are influenced by hidden technology bias. As depicted in Disney-Pixar movie Wall-E, are we creating a world where humans will forget their purpose?

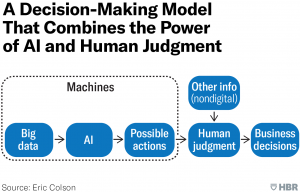

With these identified issues in the digitally transforming world and associated dataset, how can we progress? Technology is always a double edged sword. It can force change in the world, despite the social systems as well as its converse. The interplay between technology and people who interact with it is extremely critical in making sure the social fabric is protected and moving in the right direction. We cannot delegate all our decisions to algorithms and machines with the identified data issues. We need to continue to optimize our data and algorithms with human judgment [10]. Data scientists have a role to play beyond data analysis. Power delegation and distribution between humans and machines are extremely important in making the digitally transformed world a better place for all.

References:

[1] Jacquelyn Bulao, 2021, How much data is created everyday in 2021, Link

[2] https://www.securehalo.com/improve-third-party-risk-management-program-four-steps/

[3] Global digital population, Statista analysis, 2021, Link

[4] Daniel Solove, 2006, A Taxonomy of Privacy, Link

[5] Helen Nissenbaum, 2004, Privacy as Contextual Integrity, Link

[6] The Belmont Report, 1979, Link

[7] Sandra Harding, 1986, The Science Question in Feminism, Link

[8] Ariel Conn, 2017, When Should Machines Make Decisions, Link

[9] Janssen et al., 2019, History and Future of Human Automation Interaction, Link

[10] Eric Colson, 2019, What AI Driven Decision Making Looks Like, Link