The Appeal and the Dangers of Digital ID for Refugees Surveillance

By Joshua Noble | October 29, 2021

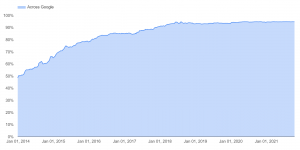

Digitization of national identity is growing in popularity as governments across the world seek to modernize access to services and streamline their own data stores. Refugees, especially those coming from war-torn areas where they have had to flee at short notice with few belongings or those who have made dangerous or arduous journeys, often lack any form of ID. Governments are often unwilling to provide ID to the stateless since they often have not determined whether they will allow a displaced person to stay and in some cases the stateless person may not want to stay in that country. Many agencies are beginning to explore non-state Digital ID as a way of providing some identity to stateless persons, among them the UNHCR, Red Cross, International Rescue Committee, and the UN Migration Agency. For instance, a UNHCR press release states: “UNHCR is currently rolling out its Population Registration and Identity Management EcoSystem (PRIMES), which includes state of the art biometrics.”

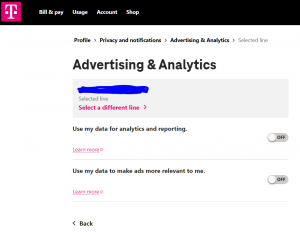

The need for a way for a stateless person to identify themselves is made all the more urgent by approaches that governments have begun to take to identifying refugees. Governments are increasingly using migrants’ electronic devices as verification tools. This practice is made easier with the use of mobile extraction tools, which allow an individual to download key data from a smartphone, including contacts, call data, text messages, stored files, location information, and more. In 2018, the Austrian government approved a law forcing asylum seekers to hand over their phones so authorities could check their origin, with the aim of determining if their asylum request should be invalidated if they were found to have previously entered another EU country.

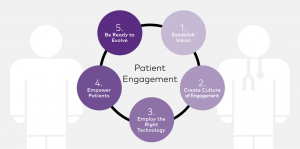

NGO provided ID initiatives may convince governments to abandon or curtail these highly privacy invasive strategies. But while the intention of these initiatives is often charitable and seeking to help provide assistance to refugees, they have the challenge of many attempts to uniquely identify users or persons: access to services is often tied to the creation of the ID itself. For a person who is stateless, homeless, and in need of aid, arriving in a new country and shuttled to a camp, this can feel like coercion. There is an absence of informed consent on the part of refugees. The agencies creating these data subjects often fail to adequately educate them on what data is being collected and how it will be stored. Once data is collected, refugees face extensive bureaucratic challenges if they want to change or update that data. Agencies creating the data offer little in the way of transparency around how data is stored, used, and offered and most importantly, with whom it might be shared both inside and outside of the organizations collecting the data.

Recently as NGOs and aid agencies fled Afghanistan as the US military abandoned the country, thousands of Afghans who had worked with those organization agencies began to worry that biometric databases and their own digital history might be used by the Taliban to track and target them. In another example of the risks of using biometric data, the UNHCR shared information on Rohingya refugees with the government of Bangladesh. The Bangladeshi government then sent that same data to Myanmar to verify people for possible repatriation. Both of these cases identify the real and present risk that creating and storing biometric data and ID can pose.

While the need for ID and the benefits that it can provide are both valid concerns, the challenge of ad hoc and temporary institutions providing those IDs and collecting and storing data associated with them presents not only privacy risks to refugees but often real and present physical danger as well.

UNHCR. 2018. “UNHCR Strategy on Digital Identity and Inclusion” [https://www.unhcr.org/blogs/wp-content/uploads/sites/48/2018/03/2018-02-Digital-Identity_02.pdf](https://www.unhcr.org/blogs/wp-content/uploads/sites/48/2018/03/2018-02-Digital-Identity_02.pdf)

IOM & APSCA. 2018. 5th border management and identity conference (BMIC) on technical cooperation and capacity building. Bangkok: BMIC. [http://cb4ibm.iom.int/bmic5/assets/documents/5BMIC-Information-Brochure.pdf](http://cb4ibm.iom.int/bmic5/assets/documents/5BMIC-Information-Brochure.pdf).

Red Cross 510. 2018 An Initiative of the Netherlands Red Cross Is Exploring the Use of Self Managed Identity in Humanitarian Aid with Tykn.Tech. [https://www.510.global/510-x-tykn-press-release/](https://www.510.global/510-x-tykn-press-release/)

UNHCR. 2018. Bridging the identity divide – is portable user-centric identity management the answer? [https://www.unhcr.org/blogs/bridging-identity-divide-portable-user-centric-identity-management-answer/](https://www.unhcr.org/blogs/bridging-identity-divide-portable-user-centric-identity-management-answer/)

Data&Society 2020, “Digital Identity in the Migration & Refugee Context” [https://datasociety.net/wp-content/uploads/2019/04/DataSociety_DigitalIdentity.pdf](https://datasociety.net/wp-content/uploads/2019/04/DataSociety_DigitalIdentity.pdf)