How to seek abortion care with a minimal data trail

Jenna Morabito | July 7, 2022

Thinking like a criminal in a post Roe v. Wade country

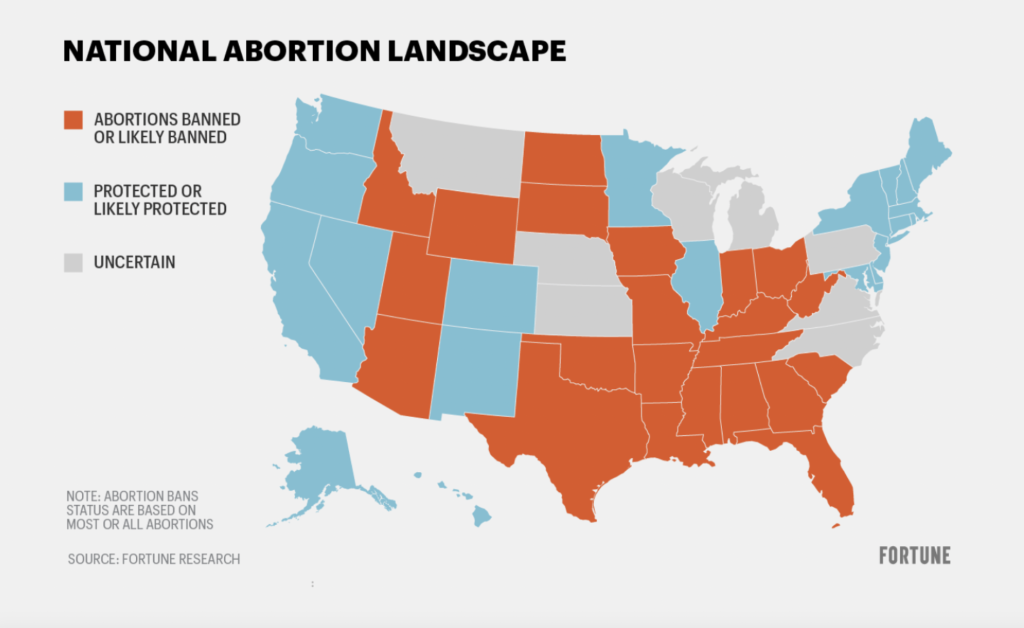

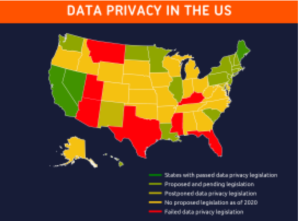

America is starting to roll back human rights: law enforcement is already using facial recognition to arrest protestors, and could start using location and menstruation data to prosecute women seeking an abortion. It is important that people know what options they have to stay private, and are aware that privacy comes with significant challenges and inconveniences.

Step 1: Remove app permissions

This advice has been all over the internet since the Supreme Court repealed Roe v. Wade, but it bears repeating: request that your period tracking app deletes your data, close your account, and delete the app. Cycle tracking apps and other businesses have been caught selling health data to Facebook and other companies, giving law enforcement more places to find your data. Even if companies don’t sell your data, they will be bound by subpoena to turn it over to law enforcement in the event of an investigation. I am not a law professional, but my guess is that using a foreign-owned app isn’t enough either: the European-based cycle tracking app Clue might have to relinquish your data to US law enforcement under the EU-US Data Protection Umbrella Agreement. How to request erasure varies by app, but a tutorial on deleting your data from Flo can be found here and one method of tracking your cycles by hand can be found here.

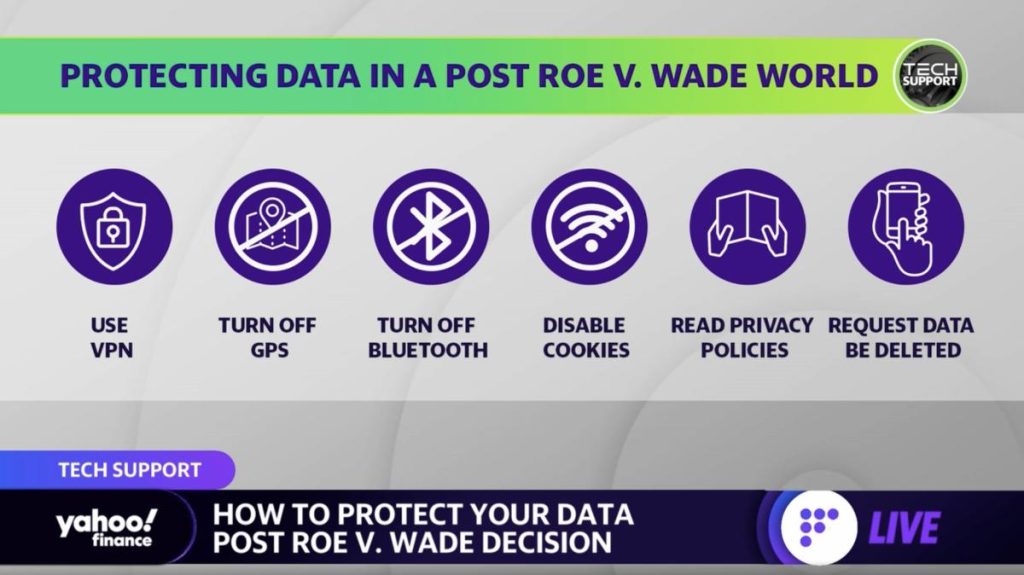

Then, go through all your apps and remove as many permissions as you can. This isn’t a trivial task; I found multiple places in my phone settings to restrict apps’ data access, and had to go through each one individually. As part of removing permissions I turned off location tracking on my phone, but location can still be very accurately pinpointed with Wi-Fi location tracking (your phone communicating with every network on the street you’re walking down), and your general location is known to the sites that you visit through your IP address – which is a unique identifier to your device. The best way to disable Wi-Fi tracking is to turn off Wi-Fi on your phone, and hiding your IP address can be done by using a Virtual Private Network.

Step 2: Secure your web browsing

Step 2.1: Get a VPN

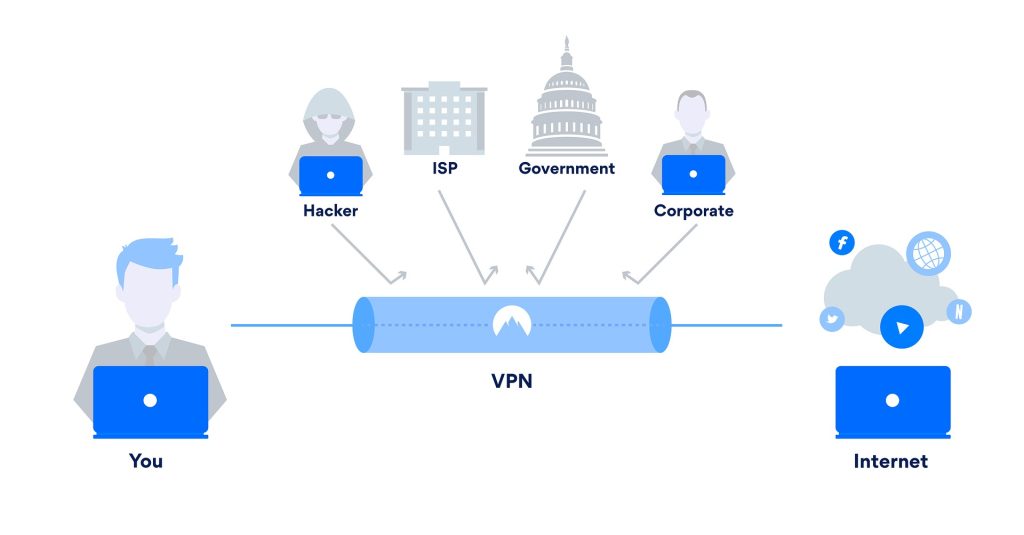

A Virtual Private Network, or VPN, is a privacy heavyweight. Without a VPN, the government, businesses, and other entities can track your IP address across websites and even see your activity on a website, depending on the security protocol of the specific site, meaning that law enforcement could see that you visited websites having to do with pregnancy or abortion. A VPN is your shield: you (and your unique IP address) connect to the VPN’s server, and the VPN forwards your request on, but with their IP address for the website to send information back to. In addition, they encrypt everything about your request. No one can see your IP address, what websites you visit, or what you do on those websites.

Not all VPNs are created equal, though. As your shield they can see what websites you go to, and some VPNs turn around and sell that information. Others don’t sell it but do keep records of your activity, which means that we have the same subpoena problem as before, and so it’s important to use a VPN that doesn’t keep logs. There are a few; the one I use is NordVPN. I encourage you to do some research and find a good service for you, but Nord doesn’t keep logs, has a variety of security features, and can be bought with cash at places like Best Buy or Target, making it an anonymous purchase. They also have seamless integration with the collection of technologies called Tor, which makes the next step simpler. One thing to note though, is that if you are logged in to your Google account at the browser or device level, or use any Google apps on your phone, then Google sees and stores all your browsing data before it gets encrypted, bypassing the privacy that the VPN offers. Therefore, I suggest using Google services only when strictly necessary, and suggest using more privacy-focused services like the ones that I recommend in later sections.

Step 2.2: Use Tor

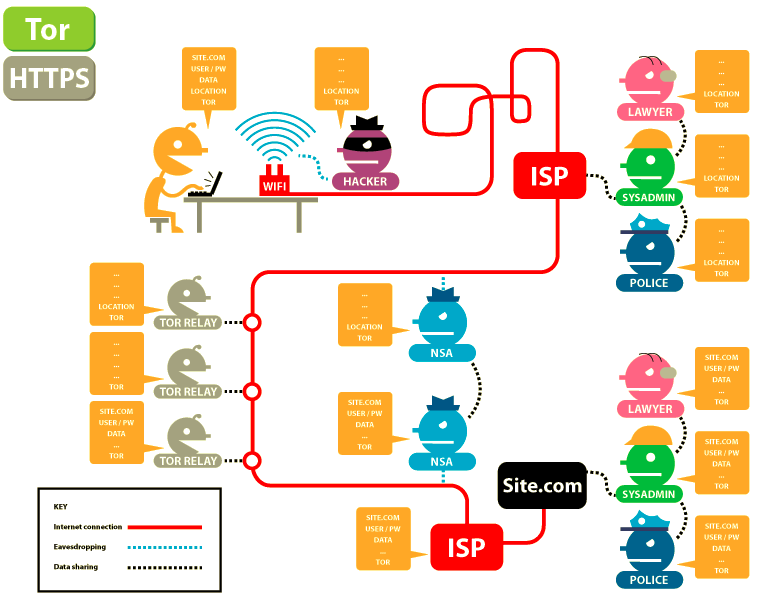

Tor is two things: a networking system, and a special browser. The networking system works by bouncing your request around between three random servers, where one layer of encryption gets peeled off at each stop so that upon exiting the network, the website knows what your request is. Then, the information you requested is triple-encrypted and sent back to you along a different route.

To access the network system you download the Tor browser, or use the network through NordVPN or another service with Tor integration. Though Tor is a sophisticated technology that makes tracking people a headache, it’s not perfect. Cybernews.com puts the additional steps that should be taken when using Tor succinctly:

- Don’t log into your usual accounts – especially Facebook or Google

- Try not to follow any unique browsing patterns that may make you personally identifiable.

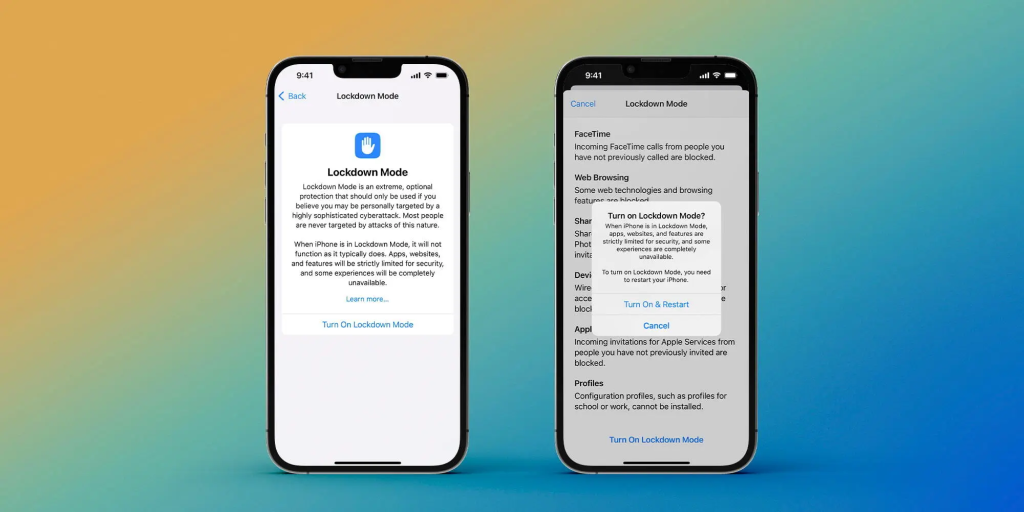

- Turn the Tor Browser’s security level up to the max. This will disable JavaScript on all sites, disable many kinds of fonts and images, and make media like audio and video click-to-play. This level of security significantly decreases the amount of browser code that runs while displaying a web page, protecting you from various bugs and fingerprinting techniques.

- Use the HTTPS Everywhere extension. This will ensure you’re only browsing HTTPS websites and protect the privacy of your data as it goes between the final node and the destination server.

- As a general rule, never use BitTorrent over Tor. Although people illegally pirating copyrighted content may wish to obscure their real identity, BitTorrent is extraordinarily difficult to use in a way that does not reveal your real IP address. Tor is relatively slow, so BitTorrent is hardly worth using over Tor anyway.

- Most importantly, always keep Tor Browser (and any extensions) updated, reducing your attack surface.

You shouldn’t log in to Facebook or Google because those sites are notorious for tracking you, but also because logging into any site with personally identifiable information is a clear indication that you’ve been there, much like using your keycard to enter your office building. I will discuss this more later, but this means that special precautions should be taken when ordering abortion pills online, as your shipping and payment information lead straight to you. In short you don’t need to use Tor every day, only when searching for sensitive information. For suggestions on a more casual privacy-focused browser, check out this list.

Additionally, you should always turn your VPN on before using Tor, otherwise you may attract your internet service provider’s suspicion. While Tor is legal to use and the New York Times even has a Tor-specific website so that people living under oppressive regimes can access outside news, Tor is known to facilitate serious crime: drug trafficking, weapons sales, even distributing child pornography. I feel significant discomfort with recommending that an average citizen use a technology that is in part sustained by truly evil crime, but what’s the appropriate balance between privacy and public safety if you can’t trust your government to protect basic human rights?

Step 3: Ditch Google

Google is ubiquitous, convenient, and free to use – although since you’re not paying with money, you’re paying with your data. I’m focusing on Google here since it’s so popular, but the type of data that Google collects is likely collected by other big companies, too.

Google Chrome and Google Search each know a surprising amount of information about you: your device’s timezone, language, privacy settings, and even what fonts you have installed; this collection of things comprises your browser’s potentially unique fingerprint, and you can check out yours here. According to RestorePrivacy.com there’s not a lot you can do about your smartphone’s fingerprint, but you can minimize digital fingerprinting on your computer by tinkering with settings and add-ons or changing browsers. The browser Brave offers built-in fingerprint protection as well as easy Tor access and seems like a good out-of-the-box option for those who don’t want to fuss, although I haven’t tried it myself.

Most notably, Chrome and Google Search store your browsing history, which law enforcement might then be able to access with a keyword warrant, identifying individuals who made searches deemed to be suspicious. To avoid getting attention when searching for abortion providers, you should switch to a search engine that logs the minimum amount of data and/or stores the log outside the U.S and EU. There isn’t any one silver bullet, but RestorePrivacy.com suggests a few privacy-focused search engines here.

Google Maps similarly stores, sells, and will share your data with law enforcement, so you may want to use DuckDuckGo’s free map service built on top of Apple Maps, although you lose some of Apple Maps’ functionality. Email providers’ privacy violations are particularly bad, though: Google was caught reading users’ emails in 2018, and Yahoo scanned all users’ incoming emails in real time for the FBI – American companies are bound to under the PRISM Act. Google also scanned users’ emails for receipts and stored them, which would be dangerous for those buying abortion pills.

Beyond just reading your emails, having a Google account signs you up for all sorts of tracking; check it out yourself at myactivity.google.com. I found YouTube videos that I’d watched years ago, every hole in the wall café I’ve ever been in; the information that Google has on me creates a fuller timeline of my life than my journal does. More private email services are less convenient and are all paid subscriptions, but a tutorial on how to evade regressive laws wouldn’t be complete without discussing communication technologies. This article does a good job laying out pros and cons of different email services as well as explaining which jurisdictions to look for: the U.S. and some European companies are subject to invasive requests from law enforcement, so even if Google stopped selling data to advertisers, users would still be at risk.

Finally, be careful where you send messages. Text messages are not encrypted, meaning that they can be read by your service provider. Signal is a free messaging app that only stores three things about you: “the phone number you registered with, the date and time you joined the service, and the date you last logged on” (RestorePrivacy.com). They don’t store who you talk to or what you talk about and they encrypt your message, making it a good place to create sensitive plans.

Step 4: Seek medical care (carefully)

With this knowledge, I hope that you can safely seek the care you need. With today’s level of surveillance I’m not confident that I even know all the ways that our movements are tracked once we leave the house: license plate scanners give our travel path in almost real-time, GPS-enabled cars do the same, so does calling an Uber, and so on. Assuming that you can drive 400+ miles to the nearest abortion clinic without detection, you’ll want to buy a simple burner phone for your travels so that mobile proximity data (what other mobile phones your phone has been near) don’t give you away, and I suppose that you should pay for your hotel room with a prepaid visa card that you bought in cash.

Or, if at all possible, get a friend living in a state where abortion is legal to buy the pills for you and ship them to you, so you can avoid revealing your payment information and address online. Coordinate with them on Signal and pay them back with a prepaid visa card.

Conclusion

It’s dystopian. The American government has abandoned its citizens in a new and exciting way and as always, has disproportionately harmed poor womxn. I know that this tutorial isn’t the most accessible since it requires time, good English, and computer literacy to implement. I know that privacy costs money: NordVPN charges $40-70 a year, a private email account is $20-50 a year, and that’s before the cost of an abortion or travel expenses.

However, I didn’t master all these technologies in a day, either. I’ve implemented – and continue to implement – more private practices around my data one at a time. The world is scary right now, but we can help each other get through it by sharing our expertise and resources. I hope that I’ve helped clarify how to take control of your data, which is a tedious process but empowering. We don’t owe corporations, and certainly not law enforcement, any of our intimate information.

“I can promise you that women working together – linked, informed, and educated – can bring peace and prosperity to this forsaken planet.” — Isabelle Allende

Or at least to my forsaken search history.