Is the GDPR’s Bark Bigger than its Bite?

by Zach Day on 10/21/2018

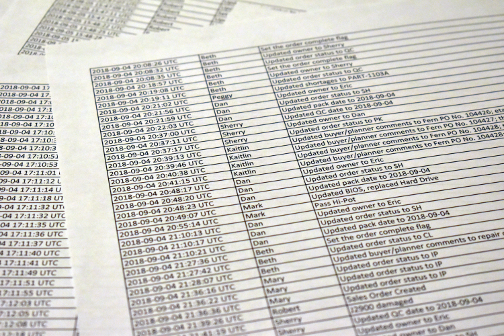

The landmark EU regulation, formally called the General Data Protection Regulation or GDPR, took effect on May 25, 2018. Among other protections, GDPR grants “data subjects” with a bundle of new rights and placed an increased obligation on companies who collect and use the data. Firms were given a two year preemptive notice to implement changes that would bring them into compliance with GDPR by May 2018.

Image Credit: https://www.itrw.net/2018/03/22/what-you-need-to-know-about-general-data-protection-regulation-gdpr/

I don’t think I’m reaching too far to make this claim: some for-profit enterprises won’t do the right thing just because it’s the right thing, especially when the right thing is costly. Do the EU member countries’ respective Data Protection Authorities, also called DPAs, have enforcement tools that are powerful enough to motivate firms to invest in the systems and processes required for compliance?

Let’s compare two primary enforcement tools/consequences, monetary fines and bad press coverage.

Monetary Fines

When the UK Information Commissioner’s Office released their findings on Facebook’s role in the Cambridge Analytica scandal, the fine was capped at 500,000 pounds or $661,000. This is because Facebook’s transgressions occured before the initiation of GDPR and was therefore subject to the regulations of the UK Data Protection Act of 1998, the UK’s GDPR precursor, which specifies a maximum administrative fine of 500,000 pounds. How painful do you think a sub-million dollar fine is for a company that generated $40B of revenue in 2017?

GDPR vastly increases the potential monetary fine amount to a maximum of $20M euros or 4% of the company’s global turnover. For Facebook, this would have amounted to a fine of ~$1.6B. That’s more like it.

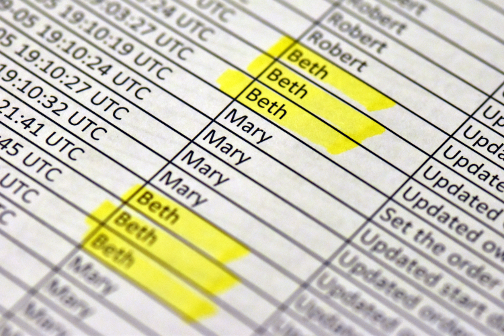

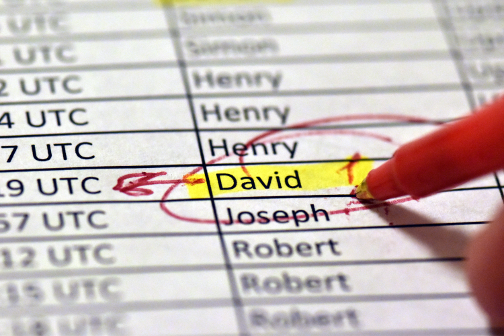

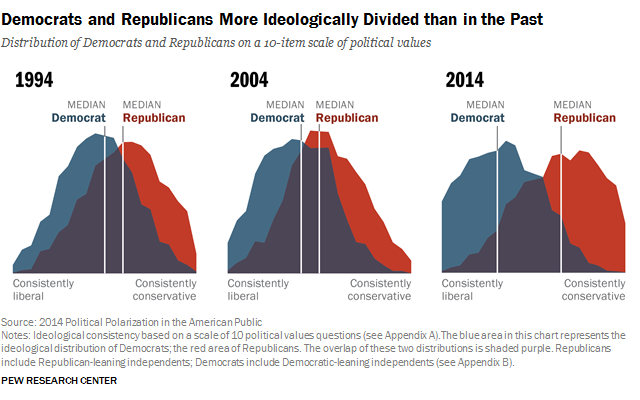

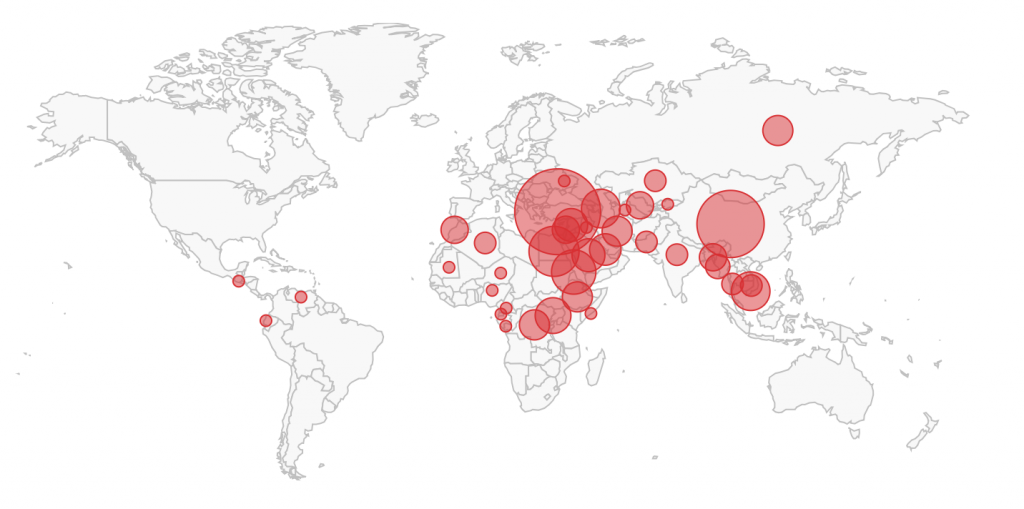

But how effectively can EU countries enforce the GDPR? GDPR enforcement occurs at the national level, with each member country possessing its own Data Protection Authority. Each nation’s DPA has full enforcement discretion. Because of this, there will inevitably be variation in enforcement trends from country to country. Countries like Germany, with a strong cultural value of protecting individual privacy, may enforce the GDPR with far more gusto than a country like Malta or Cyprus.

Monetary fines are not going to be the go-to tool for every enforcement case brought under the GDPR. DPAs have vast investigative powers, such as carrying out audits, obtaining access to stored personal data, accessing the facilities of the data controller or processor then issuing warnings, reprimands, orders, and bans on processing. It’s likely that these methods will be used with much more frequency. Although, the first few cases will be anomalies since, a) media outlets are chomping at the bit to report on the first few enforcement actions taken under the GDPR and b) DPAs will be trying to send a message.

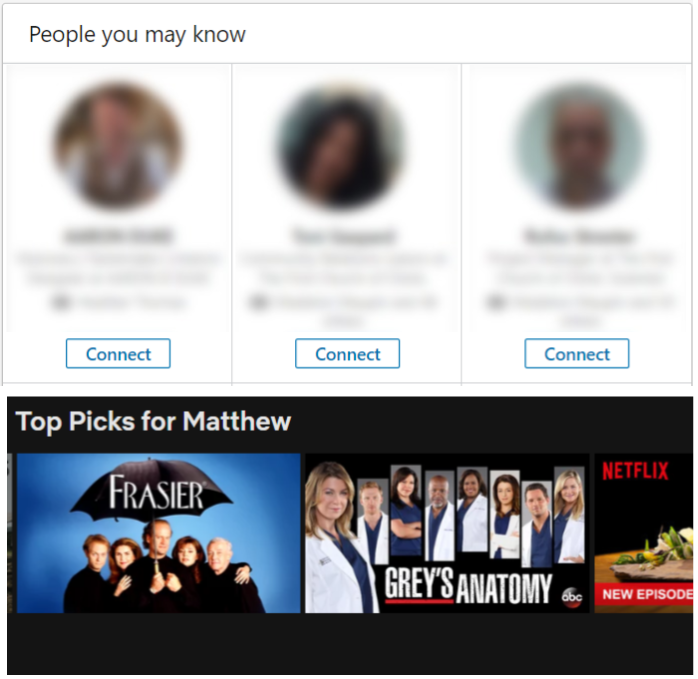

PR Damage

What do you think burned worse to Facebook, a $661,000 fine or the front page of every international media outlet running the story for hundreds of millions of readers to see (imagine for how many this was the last straw, causing them to deactivate their Facebook accounts)? I would argue that the most powerful tool in the GDPR regulators toolbox is the bad press associated with a GDPR violation brought against a company, especially in the early years of the regulation when the topic is still fraught.

A report published in July by TrustArc outlining estimated GDPR compliance rates across the US, UK, and EU noted that 57% of firms are motivated to comply with GDPR by ‘customer satisfaction’, whereas only 39% were motivated by fines. Of course a small business with 100 employees in a suburb of London is chiefly concerned with a potential 20,000,000 euro fine. They’d simply be out of business. On the other hand, large Silicon Valley based tech firms, with armies of experienced attorneys (Facebook attorneys have plenty of litigation experience in this area, by now), have much more to lose from more bad press versus a fine of any amount allowed under GDPR.

Path Forward Firms are going to pursue any path that leads to maximum revenue growth and profitability, even if it means operating in ethical/legal grey areas. If GDPR regulators plan to effectively motivate compliance from companies, they need to focus on the most sensitive pressure points. For some, it’s the threat of monetary penalty. For the tech behemoths, it’s the threat of another negative front-page headline. Regulators will be at a strategic disadvantage if they don’t acknowledge this fact and master their PR strategies.