Are we truly anonymous in public health databases?

By Anonymous | June 18, 2021

Privacy in healthcare data seems to be the baseline when speaking of personal data and privacy issues for many users, even for those who hold a more relaxing attitude toward privacy policy issues. We may think that social media, tech, and financial companies could be selling user data for profit, but people tend to trust hospitals, healthcare institutions, and pharmaceutical companies for at least trying to keep their users’ data safe. Is that really true? How safe is our healthcare data? Can we really be anonymous in the public databases?

As a user and as a patient, we have to share a lot of personal and sensitive information when we see a doctor or healthcare practitioner in order for them to provide precise and useful healthcare services to us. Doctors might know about our blood type, potential genetic risks or diseases in our family, pregnancy experiences, etc. Not only those, the health institutions behind doctors also keep records of our insurance information, home address, zip code, payment information. Healthcare institutions might hold more comprehensive sensitive and private information about you than any of those organizations who also try to retain as much information about you.

What kind of information healthcare providers collect and share with third parties? In fact, most of the healthcare providers should follow HIPAA’s privacy policy guidance. For example, I noticed that Sutter Health said they follow or refer to the HIPAA privacy in their user agreement.

For example, Sutter’s privacy policy talked about its usage of your healthcare. In Sutter’s privacy policy, it is stated that “We can use your health information and share it with other professionals who are treating you. We may use your health information to provide you with medical care in our facilities or in your home. We may also share your health information with others who provide care to you such as hospitals, nursing homes, doctors, nurses or others involved in your care.” Those usage seem reasonable to me.

In addition to above expected usage of your healthcare data, Sutter also mentioned that they are allowed to share your information in “ways to contribute to public good, such as public health and research”. Those ways include, “preventing disease, helping with product recalls, reporting adverse reactions on medications, reported suspected abuse, …”. One concern arising from one of the usage — public health and research, can we really be anonymous in the public database?

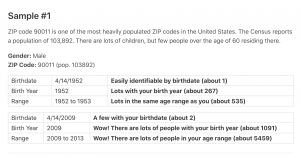

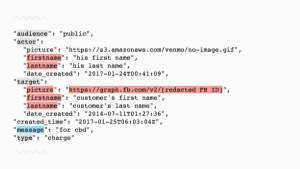

In fact the answer is no. Most of the healthcare records can be de-anonymized through information matching ! “Only a very small amount of data is needed to uniquely identify an individual. Sixty three percent of the population can be uniquely identified by the combination of their gender, date of birth, and ZIP code alone”, according to a post on Georgetown Law Technology Review published in April 2017. Thus, it is totally possible for both people with good intentions such as a research team and data scientists whose true intention is to provide public good, or people with bad intention, such as hackers, to legally or illegally get healthcare information from multiple sources and aggregate together. And in fact they can be-anonymous the data, especially with the help of current computing resources, algorithms, and machine learning.

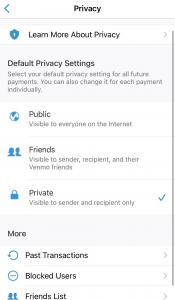

So do companies that hold your healthcare information have to follow some kind of privacy framework? Are there laws out there to regulate companies who have your sensitive healthcare information and protect the vulnerable public like you and me? One guidance that most healthcare providers should follow is the Health Insurance Portability and Accountability Act (HIPAA), which became effective in 1996. This act stated who has rights to access what kind of health information, what information is protected, and how information should be protected. It also covered who must follow these laws, including Health plans, most healthcare providers, and health care clearinghouses, and health insurance companies. Companies that could have your health information and do not have to follow these laws include life insurers, most of the schools and school districts, state agencies like child protective services agencies, law enforcement agencies, and municipal offices.

Normal people like you and me are vulnerable individuals. We don’t have the knowledge, patience, and knowledge to understand every term stated in the long and full-of-law-jargon user agreement and privacy policies. But what we can do and should do is advocate for strong protection for our personal information, especially sensitive healthcare data. And government and policy makers should also establish and enforce more comprehensive privacy policies to protect everyone, to limit the scope and ability of healthcare data sharing thus to prevent de-anonymous events from happening.

________________

Reference:

1. Stanford Medicine. Terms and Conditions of Use. Stanford Medicine. https://med.stanford.edu/covid19/covid-counter/terms-of-use.html.

2. Stanford Medicine. Datasets. Stanford Medicine. https://med.stanford.edu/sdsr/research.html.

3. Stanford Medicine. Medical Record. Stanford Medicine. https://stanfordhealthcare.org/for-patients-visitors/medical-records.html.

4. Sutter Health. Terms and Conditions. Sutter Health. https://mho.sutterhealth.org/myhealthonline/terms-and-conditions.html.

5. Sutter Health. HIPAA and Privacy Practices. Sutter Health. https://www.sutterhealth.org/privacy/hipaa-privacy.

6. Wikipedia (14 May 2021). Health Insurance Portability and Accountability Act. Wikipedia. https://en.wikipedia.org/wiki/Health_Insurance_Portability_and_Accountability_Act.

7. Your Rights Under HIPAA. HHS. https://www.hhs.gov/hipaa/for-individuals/guidance-materials-for-consumers/index.html.

8. Adam Tanner (1 February, 2016). How Data Brokers Make Money Off Your Medical Records. Scientific American. https://www.scientificamerican.com/article/how-data-brokers-make-money-off-your-medical-records/.