Online Security in the Age of Shelter-In-Place

By Percival Chen | March 31, 2020

With the implementation of the shelter-in-place orders around the country, the economy has been continuing to tank. One particular industry that is bucking the trend and is surging upwards is the video conference industry, and nowhere is this more apparent than with Zoom Video Communications, otherwise known simply as Zoom.

According to the New York Times, “[e]ven as the stock market has plummeted, shares of Zoom have more than doubled since the beginning of the year” [1]. Some people might say business is “zoom”-ing with the videoconferencing app, but with the rise of Zoom comes many other concerns, especially with regards to its privacy and security practices.

One recent practice that has gained particular notoriety is the concept of “Zoombombing” by attackers who can potentially hijack a meeting and dump whatever information they want onto the viewers [2]. This often leads to entire meetings getting shut down because once a user shares, there is no way for the host to immediately kick out the user once their screen has been shared.

Another issue that Zoom has had to deal with is the “recent and sudden surge in both the volume and sensitivity of data being passed through its network” [1]. The millions of Americans shifting to this new online reality of life, including the displaced students across the nation, are now using services such as Zoom to carry on with their jobs and with school as before. This sudden surge has led to an increased concern for the potential vulnerabilities of Zoom’s current security practice, a sentiment shared by even New York attorney general’s office. Communication is so vital to the operations of businesses, corporations, schools, and even just our daily lives, and now that all of that traffic is being funneled through a few providers, there is little wonder that this is becoming more of a concern, especially with the increase in sensitive information that is being passed virtually.

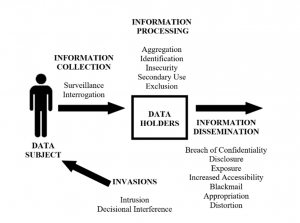

What kind of information is particularly at risk? It’s the personally identifiable information (PII). Think of this as data that can be used to identify a specific individual. This is basic information that Zoom collects, as do many other companies. It’s information like your name, home address, email, and phone number, but it also includes your Facebook profile information, credit/debit card (if it’s linked to your payment of Zoom), information about your job like your title and employer, general information about your product and service preferences, and information about your device, network, and internet connectivity [3]. Now, at this point, identity theft in the form of an information leak or hack attack can lead to serious consequences. I conducted some UserTesting research about a month ago, and some participants voiced that this potential issue was serious enough for them to personally investigate further and perhaps to even look for a different service for their needs rather than to Zoom, given that Zoom had access to a trifecta of personal, social, and financial information of a user.

Even as this blog post is released, I am sure that Zoom’s privacy policy will continue to evolve as different events unfold. It already has been updated several times since February. And while I don’t foresee video communications being shut down given the essential role that they play in the corporate scene, I do expect there to be many (many) new tweaks to the current system in place, and maybe after the pandemic is over, our world will be even more resilient to deal with the shifting landscape of data privacy and security.

Sources:

1. New York Attorney General Looks Into Zoom’s Privacy Practices – The New York Times. https://www.nytimes.com/2020/03/30/technology/new-york-attorney-general-zoom-privacy.html. Accessed 31 Mar. 2020.

2. Lorenz, Taylor. “‘Zoombombing’: When Video Conferences Go Wrong.” The New York Times, 20 Mar. 2020. NYTimes.com, https://www.nytimes.com/2020/03/20/style/zoombombing-zoom-trolling.html.

3. ZOOM PRIVACY POLICY – Zoom. https://zoom.us/privacy