A New Generation of Phone Hackers: The Police

By Anonymous | June 18, 2021

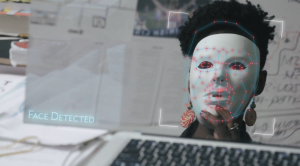

Hackers. I challenge you to create an image of the “prototypical hacker.” What comes to mind? Is it a recluse with a noticeably worn hoodie, sitting alone in the dark hovering over a desktop?

The stated description may have been quite popular at one time, but the constant changes in technology are coupled with the evolution of those who constitute a “hacker.” One group in particular is becoming increasingly associated with this title and emerging into the spotlight: law enforcement.

A report by Upturn has found that more than 2,000 agencies in all 50 states of the U.S. have purchased tools to get into locked, encrypted phones and extract their data. Reports by the researchers at Upturn suggest U.S. authorities have searched 100,000+ phones over the past 5 years. The Department of Justice argues that encrypted digital communications hinder investigations and for protections to exist, there must be a “back door” for law enforcement. Google and Apple have not complied to these requests, but agencies have found the tools needed to hack into suspects’ phones. The use of these tools is justified by its need to aid in investigating serious offenses such as: homicide, child exploitation, and sexual violence.

In July 2020, police in Europe made hundreds of arrests as the result of hacking into an encrypted app called EncroChat. EncroChat is a phone network that provides specially altered phones–no camera, microphone, or GPS– with the ability to immediately erase compromising messages. Authorities in Europe hacked into these devices to detect criminal activity. The New York Times reports the police in the Netherlands were able to seize 22,000 pounds of cocaine, 154 pounds of heroin and 3,300 pounds of crystal methamphetamine as a result of the intercepted messages and conversations.

However, these tools are also being used for offenses that have little to no relationship to a mobile device. There are many logged offenses in the United States which are not digital in nature such as public intoxication, marijuana possession, and graffiti. It is difficult to understand why hacking into a mobile device– an extremely invasive investigative technique– would be necessary for these types of alleged offenses. The findings from the Upturn report suggest many police departments can tap into highly personal and private data with little oversight or transparency. Only half of 110 surveyed large U.S. law enforcement agencies have policies on handling data extracted from smartphones and merely 9 of these contained policies with substantive restrictions.

An important question on this issue remains: what happens to the extracted data after its use in a forensic report? There are few policies clearly defining the limits on how long extracted data may be retained. The lack of clarity and regulation surrounding this “digital evidence” limits the protection of most Americans. More importantly, if the data is extracted from the cloud, there are further challenges. Since law enforcement has access to tools for siphoning and collecting data from cloud-based accounts there is an immensely continuous database they are able to view. Some suggest this continuous flow of data should be treated as a wiretap and require a wiretap order. However, research from Upturn has not been able to find a local agency policy that provides guidance or control over data extracted from the cloud.

Undoubtedly, the ability to hack into phones has given police the necessary leads to making many arrests. However, the lack of regulation and general oversight on these actions can also questionably impede the safety of American citizens. Public institutions have often been thought to be behind with the use of the latest technologies. There are those who argue that if criminals are utilizing digital tools to commit offenses, then law enforcement should now be one step ahead with these technologies. This begs the question: is it fair or just for law enforcement to have the ability to hack into their citizens’ phones?

References:

Benner, K., Markoff, J., Perlroth, N. (2016, March). Apple’s New Challenge: Learning How the U.S. Cracked Its

iPhone. Retrieved from New York Times: https://www.nytimes.com/2016/03/30/technology/apples-new-challenge-learning-how-the-us-cracked-its-iphone.html

Koepke, L., Weil, E., Urmila, J., Dada, T., & Yu, H. (2020, October). Mass Extraction: The Widespread Power of U.S.

Law Enforcement to Search Mobile Phones. Retrieved from Upturn: https://www.upturn.org/reports/2020/mass-extraction/

Nicas Jack (2020, October). The Police Can Probably Break Into Your Phone

Retrieved from New York Times

Nossiter Adam (2020, July). When Police are Hackers: Hundreds Charged as Encrypted Network is Broken

Retrieved from New York Times: