AI technology in my doctor’s office: Have I given consent?

By Anonymous | October 28, 2022

Should patients have the right to know what AI technologies are being used to provide their health care and what their potential risks are? Should they be able to opt out of these technologies without being denied health care services? These questions become extremely critical over the next decade as policy makers start regulating this technology.

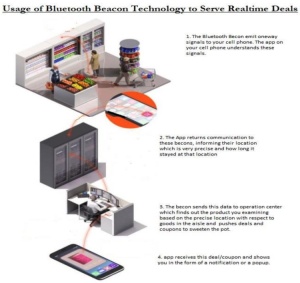

Have you ever wondered how doctors and nurses spend lesser and lesser time with you these days in the examination room but still can make a diagnosis faster than ever before? You can thank the proliferation of AI technology in the healthcare industry. To clarify, we are not referring to the Alexa or Google Home device your doctor may use to set their reminders. We refer to the wide array of AI tools and technology that powers every aspect of the healthcare system, be it for diagnosis and treatments, routine admin tasks, or insurance claims processing.

Image Source: Adobe Stock Image

As explained by Davenport et al. in their Future Heathcare Journal publication, AI technologies in healthcare has the potential to bring tremendous benefits to the healthcare community and patients over the next decade. It can help not only help automate several tasks that previously need intervention of trained medical staff but also significantly reduce costs. The technology clearly has so much potential and can be extremely beneficial to patients. But what are its risks? Will it be equally fair to all patients, or will the technology benefit some groups of people over others? In other words, is the AI technology unbiased and fair?

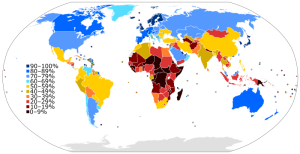

Even though full-scale adoption on AI in healthcare is expected to be 5-10 years away, there are already ample documented evidence to show lack of fairness in healthcare AI. According to this Postively Aware article, a 2019 study showed that an algorithm used by hospitals and insurers to manage 200 million people in US was less likely to refer black people for extra care than white people. Similarly, a Harvard School of Public Health article on algorithmic biases speaks about how the Framingham Heart Study performed much better for Caucasians than Black patients when calculating cardiovascular risk score.

Unsurprisingly there is very little regulation or oversight over these AI tools in healthcare. A recently published ACLU article on how algorithmic decision making in healthcare can deepen racial bias states that unlike medical devices that are regulated by FDA, “algorithmic decision-making tools used in clinical, administrative, and public health settings — such as those that predict risk of mortality, likelihood of readmission, and in-home care needs — are not required to be reviewed or regulated by the FDA or any regulatory body.”

Image Source: Adobe Stock Image

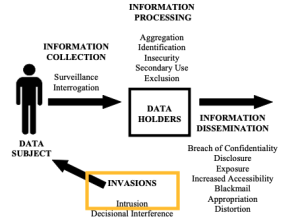

While we trust that the AI researchers and healthcare community will continue to reduce algorithmic biases and strive to make healthcare AI fair for all, the question we ask ourselves today is should the patient have the right to consent, contest, or opt out of AI technologies in their medical care? Given there is so little regulation and oversight about these technologies, we strongly believe that the right way forward is to create policies that treat AI technology’s use in healthcare in the same way as a surgical procedure or drug treatment. Educating customers about the risk of these technologies and getting their informed consent is critical. Heathcare providers should explain to patients clearly about what AI technologies are being used as part of their care, what are their potential risks/drawbacks, and seek informed consent from patients to opt-in. Specific data around algorithmic accuracy against various demographic groups should also be made available to the patient so that they can clearly assess the risk applicable to them. If the patient is not comfortable with these technologies, they should have the right to opt out and still be entitled to alternate course of treatment when possible.