Assessing Clearview AI through a Privacy Lens

By Jonathan Hilton | February 28, 2020

The media has been abuzz lately about the end of privacy driven extensively from the capabilities of Clearview AI. The New York Times drew attention to the start up in an article about Clearview AI called ‘The Secretive Company That Might End Privacy as We Know It’, where the paper details the secretive nature of a company that has expansive capabilities with facial recognition. Privacy is a complicated subject that requires extensive context to understand what is and what is not acceptable in a society. In fact, privacy does not have universal meaning and understanding within society or within the academic community. Privacy in one context is not considered a privacy violation in another context. Since this ambiguity exists, we can deconstruct the context of vast facial recognition capabilities with Solove’s Taxonomy to better understand the privacy concerns with Clearview AI.

Prior to examining Clearview AI’s privacy concerns with Solove’s Taxonomy, we need to address what Clearview AI is doing with their facial recognition capability. Clearview AI CEO, Ton That (see figure 1), started the company back in 2017. The basic premise of the company is that their software can take a photo and use a database comprised of billions of photos to identify the person in the photo. How did they come up with this database with out the consent of each person whose likeness is in the database? Clearview AI has scraped pictures from Facebook, YouTube, Venmo and many others to compile their massive database. The company claims the information is ‘public’ and thus does violate any privacy laws. So who exactly uses this software? Clearview maintains that they work with law enforcement agencies and their product is not meant for public consumption.

Clearview CEO Hoan Ton-That

More information about Clearview AI can also be found at their website: https://clearview.ai/

After the Times published the article bringing Clearview AI’s practices to light, there has been intense backlash from the public over privacy concerns. Solove’s Taxonomy should be able to help us decompose exactly what the privacy concerns are.

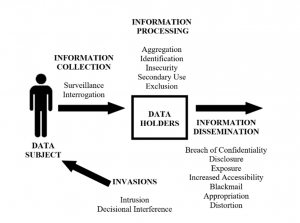

Solove’s Taxonomy was published by Daniel J. Solove in January 2006. The basic components of the Taxonomy can be found in figure 2 below.

Solove’s Taxonomy

In the case of Clearview AI, the entire public is the Data Subject, or at least those who have either posted videos or pictures or were part of videos or pictures posted by others. So even if a person never posted to social media or a video site, their likeness may be part of the database simply because someone else did post their picture or even caught them in the background of their own photo. An obvious case of harm can come from surveillance according to the Solove taxonomy. Locations, personal associations, activities, and preferences could be derived from running a person’s photo through the system and then viewing all of the results. While Clearview AI claims that their product is to search and not surveil according to their website, it is very difficult to see how the data could not be used for surveillance. The distinction between and search and surveillance is a hard one to draw since searches can quickly produce results that achieve surveillance.

In terms of information processing, the entire purpose of the software is to aggregate data to attain personal identification. With this type of information, great concerns start to arise on the use of this type of capability and data usage. While Clearview maintains that its software serves the needs of law enforcement, the question of secondary use brings great concern. How long until law enforcement adjacent organizations gain access to the software, such as bounty hunters, private security, or private investigators. What other government organizations will gain access to the same capability for purposes such as taxes, welfare disbursement, airport surveillance, or government employment checks. The road to secondary uses of data by the government is well paved by historical precedence such as the use of genealogical DNA for criminal investigations or Social Security numbers becoming de-facto government ID numbers. Furthermore, what happens when Clearview AI is purchased and used by governments to repress their people. More authoritarian regimes could use the software to fully track their citizens and use it against anyone advocating human rights or democracy.

Pictures can be used for much more than law enforcement

Information dissemination also poses a risk that Clearview AI’s results could be distributed to others that would use it for nefarious purposes. For instance, if a bad actor in a government agency distributes a search of their friend or co-worker to another, what type of harm could that cause the person whose images were searched. Additionally, what happens when the software is either hacked or reverse engineered so that the general public can do the same searches or searches are sold on the dark web. It is not a far cry to imagine a person being blackmailed for the search results. We have already seen where hackers will encrypt hard drives to hold people ransom for either money or explicit images of the computer owner, so this would be another extension of the same practice.

Finally, invasion is a real threat to all data subjects. A search of a person’s image that reveals more than who they are but more what they are doing, when they are doing it, and with whom could be used in many ways to affect the person’s day to day decision making and activities. For instance, if this information can be sold to retailers or marketers, a person may find that there is a targeted advertisement is made at the right place and time to influence a person’s decision. Furthermore, the aforementioned bad actor scenario could lead to direct stalking or personal residence invasion if significant details are known about a person. A few iterations of this technology could find the software’s capability mounted on a AR / VR IoT device that will identify people and perhaps some level of personal information as they are seen in public. So basically, a person can where the device and see who each person is that passes them in a mall or on a street.

In conclusion, Solove’s Taxonomy does help deconstruct the privacy concerns associated with Clearview AI’s facial recognition capability. The serious concerns with the software is unlikely to go away anytime soon but unfortunately once this type of technology is developed, it usually does not go away. Society will have to continue to grapple more and more with the growing power and capability of facial recognition.

References:

https://www.popularmechanics.com/technology/security/a30613488/clearview-ai-app/

https://en.wikipedia.org/wiki/Clearview_AI

Solove, Daniel J., ‘A Taxonomy of Privacy’, University of Pennsylvania Law Review, Jan 2006.