Disparities faced by people with sickle cell disease: The case for additional research funding.

By Anonymous | September 22, 2021

September is Sickle Cell Awareness Month! How aware are you?

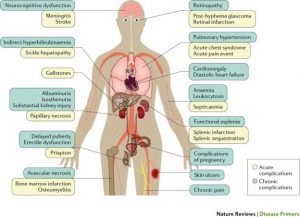

Sickle cell disease (SCD) is a group of inherited red blood cell disorders that lead to sickle-shaped red blood cells. These red blood cells then become hard and sticky, have a decreased ability to take up oxygen and a short life span in the body. As a result, people with SCD suffer many lifelong negative health outcomes and disability, ranging from stroke (even in children), acute/chronic pain crises, mental health issues, organ failure, etc.

Sickle cell disease clinical complications. Source: Kato, G., Piel, F., Reid, C. et al. Sickle cell disease. Nat Rev Dis Primers 4, 18010 (2018). https://doi.org/10.1038/nrdp.2018.10

The issues unfortunately do not end there.

In the U.S., 90% of people living with SCD are Black/African-American and suffer significant health disparities and stigmatization. SCD patients frequently describe poor interactions with the healthcare system and personnel. SCD pain crises (which can be more intense than childbirth) require strong pain relievers such as opioids. Unfortunately, few medical facilities are trained in managing SCD pain crises or other chronic issues related to SCD. There is a serious lack of respect for persons, as people coming in for SCD pain crises are often treated as though they are drug seekers while being denied the care they need. Black people have also expressed substantial mistrust of the U.S. healthcare system, given past offenses in recent history (e.g. lack of consent given to Black participants in the Tuskegee Syphilis Study).

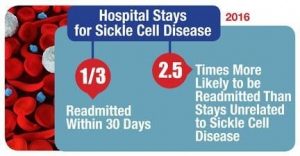

In fact, current estimates place those with SCD at a median life expectancy of 42–47 years (2016 estimate). Even though SCD is a rare disease in the U.S. (approximately 90,000-100,000 people), over $811.4 million in healthcare costs can be attributed to 95,600 hospital stays and readmissions for sickle cell disease (2016 estimate).

Sickle cell disease-related hospital readmission is high in the U.S. Source: AHRQ News Now, September 10, 2019, Issue #679

Why is this happening??

There is not enough research or education surrounding SCD. Sickle cell advocacy groups and networks exist, but they are underfunded or there is less interest from the public compared to other health issues. For example, Farooq et al. (2020) compared the amount of support that goes into SCD with cystic fibrosis (approximately 30,000 people in the U.S.). They found that the U.S. government-funded research was on average $812 per person affected for SCD (which predominantly affects Black people), compared to $2807 per person affected for cystic fibrosis (which predominantly affects Caucasians). What about funding from private foundations? On average $102 per person for SCD and $7690 per person for cystic fibrosis.

The result?

There is lack of research and data surrounding SCD, potential new treatments, factors that affect SCD, etc. To improve quality of care, we need to understand the complex intersectionality at play with SCD… how do available treatments vary by SCD genotype? By sexual/gender minority group? By region? Without such information, there is substantial uncertainty that no data model can make up for, and residuality occurs when people with SCD are just left out of the decisions being made. Without additional funding, a lack of training in SCD will persist, with hidden biases of health care workers negatively affecting the patient-provider experience. Even among well-educated researchers, there is the notion that SCD has been “cured” and is not a priority anymore. Unfortunately, very few people meet the criteria to undergo gene therapy (must have an exact match, a specific SCD genotype, etc.), it is extremely expensive and is a risky procedure with risk of death.

Will more funding for SCD research and advocacy really help? Well, in the same time period, given over 70% more research publications for SCD vs. cystic fibrosis, SCD had one FDA-approved drug between 2008-2018 (to serve a population of 90,000) while cystic fibrosis had four (to serve a population of 30,000).

How can we do better?

Increased advocacy for SCD as a priority and overall awareness by lawmakers, researchers, and the general population is important. Given the huge disparity in private funding between SCD and cystic fibrosis, Farooq et al. suggest that increased philanthropy through private foundations may be a way to improve on SCD advocacy and research. Consider donating to established SCD foundations, or advocate to your public service officials on what SCD is and why it’s important. Share materials such as from World Sickle Cell Day or Sickle Cell Awareness Month (September). Support a summer camp for kids with SCD, where they go have fun and be further empowered while living with SCD. Everything counts. Together, we can reduce disparities and improve health outcomes for people with SCD.

- Sources:

- What is Sickle Cell Disease? https://www.cdc.gov/ncbddd/sicklecell/facts.html

Characteristics of Inpatient Hospital Stays Involving Sickle Cell Disease, 2000-2016 https://hcup-us.ahrq.gov/reports/statbriefs/sb251-Sickle-Cell-Disease-Stays-2016.jsp - Farooq F, Mogayzel PJ, Lanzkron S, Haywood C, Strouse JJ. Comparison of US Federal and Foundation Funding of Research for Sickle Cell Disease and Cystic Fibrosis and Factors Associated With Research Productivity. JAMA Netw Open. 2020;3(3):e201737. doi:10.1001/jamanetworkopen.2020.1737

- Sickle Cell Disease Association of America https://www.sicklecelldisease.org/

- Sickle Cell Disease Summer Camps https://www.cdc.gov/ncbddd/sicklecell/features/sickle-cell-and-summer-camp.html

- World Sickle Cell Day https://www.cdc.gov/ncbddd/sicklecell/features/world-sickle-cell-day.html

- September is Sickle Cell Awareness Month https://www.redcross.org/about-us/news-and-events/news/2021/september-is-sickle-cell-awareness-month.html

- The U.S. Public Health Service Syphilis Study at Tuskegee https://www.cdc.gov/tuskegee/timeline.htm