A Problem to A Dress: Algorithmic Transparency and Appeal

By Adam Johns | April 13, 2020

Once upon a time, a million years ago (Christmas 2019), people cared about things buying fashionable gifts for their friends and family, rather than access to bleach. At this time, I was in the process of attempting to purchase a dress for my partner from an online store I’d shopped with in the past. Several days before the delivery cutoff for Christmas delivery, I received an unceremonious computer-generated email that my order had been cancelled. No sweat, I thought, and repeated the purchase. Cancelled again. As the deadline for the holidays approached, I called the particular merchant, who informed me that my order had been flagged by an algorithm as a security risk, my purchase had been cancelled, and there was in fact nobody I could speak to to appeal to, and no possibility of determining what factors had contributed to this verdict. I hung up the phone, licked my wounds, and moved on to other merchants for my last-minute shopping.

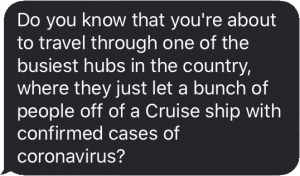

Upon later reflection, chastened by a nearly missed holiday gift deadline, I mused at what could have possibly resulted in the rejection. Looking back over my past purchases, it became apparent that in a year or two of shopping with this particular retailer, I hadn’t actually bought any women’s clothes. Perhaps it was the sudden change from menswear to dresses that led the algorithm to flag me (a not very progressive criteria for an otherwise progressive-seeming retailer). Whatever the reason, this frivolous example got me thinking about some very serious aspects of algorithmic decision making. What made this particular example so grating? Firstly, the decision was not transparent—I wasn’t informed that an algorithm had flagged my purchase until a number of calls to customer service. Secondly, I had no recourse to appeal—even after calling up, credit card info and personal identification in hand, nobody at the company was willing or able to overturn the decision. While such an algorithmic “hard no” was easy to shake off for a gift purchase, imagining such an approach applied to a credit decision, an insurance purchase, or a college application was disconcerting.

In 2020, algorithmic adjudication is becoming an increasingly frequent part of life. Machine learning may be broadly accurate in the aggregate, but individual decisions can always suffer from false positives and false negatives. When such a decision is applied to customer service or security, bad decisions can alienate customers and lead previously loyal customers to take their business elsewhere. When algorithms impact more consequential social matters like person’s access to health care, housing, or education, the consequences of a poor prediction take on higher stakes. Instead of just resulting in disappointed customers writing snarky blog posts, such decision making can amplify inequity, reinforce detrimental trends in society, and lead to self-reinforcing feedback loops of diminished individual and societal potential.

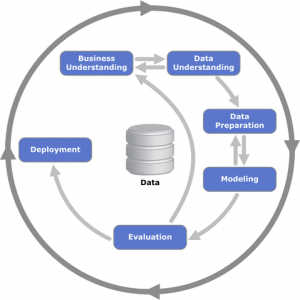

The growing importance of machine learning in commercial and government decision making isn’t likely to decline any time in the future. But to apply algorithms for maximum benefit, organizations should ensure that algorithmic decision making embeds transparency and a right to appeal. Let somebody know when they’ve been flagged, and what factored into the decision. Give them the right to speak to a person and correct the record if the decision is wrong (Crawford and Schultz’s concept of algorithmic due process offers a solid base for any organization trying to apply algorithms fairly). As a bonus, letting subjects of algorithmic decision making appeal offers a tantalizing opportunity to the data scientist: More training data to improve the algorithm. While it requires more investment, and a person on the other end of a phone, transparency and right to appeal can result in a rare win-win for algorithmic designers and the people to whom those algorithms are being applied, and ultimately lead us toward a more perfect future of algorithmic coexistence.

Reference:

Kate Crawford & Jason Schultz, Big Data and Due Process: Toward a Framework to Redress Predictive Privacy Harms, 55 B.C.L. Rev. 93 (2014), https://lawdigitalcommons.bc.edu/bclr/vol55/iss1/4