Data-driven policy making in the Era of ‘Truth Decay’

By Silvia Miramontes-Lizarraga

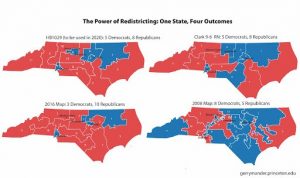

Through the advances of digital technology, it has been made possible to collect, store and analyze large amounts of data that contain information of various subjects of interest, otherwise known as Big Data. One of the effects of this field is the increase in data-driven methods for decision making in businesses, technology, and sports; as these methods have been proven to boost innovation, productivity and economic growth. But if the availability of data has been significantly increasing, why do we lack data-driven methods in policy-making to target issues of social value?

Background on Policy-making:

Society expects the government to deliver solutions to address social issues. Thus, its challenge is to improve the quality of life of its constituents. Public policy is a goal-oriented course of action which encompasses a series of steps: 1) Recognition of the Problem, 2) Agenda Setting, 3) Policy Formulation, 4) Adopting of Policy, 5) Policy Implementation, and 6) Policy Evaluation. This type of decision-making involves numerous participants. Consequently, the successful implementation of these policies cannot be ideologically driven. The process requires government officials to be transparent, accountable, and effective.

So how could these methods help?

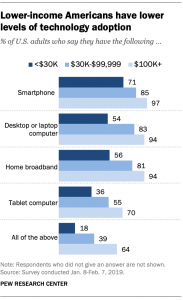

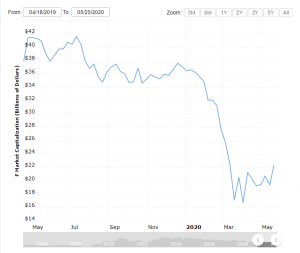

The lack of utilization of data-driven methods is conspicuous when addressing the many problems of our educational system. For example, government officials could utilize data to efficiently locate the school districts in need of more resources. Similarly, when addressing healthcare, they can successfully compare plans to determine the best procedures and most essential expenditures to complete in the middle of a global pandemic. Thus, by successfully adopting these new technologies, our officials can begin closing ‘data gaps that have long impeded effective policy making’. However, in order to achieve this, government officials and their constituents must develop the awareness and appreciation of concrete and unbiased data.

Why ‘Truth Decay’ complicates things

Although there is potential in implementing data-driven methods to better inform policy makers, we have stumbled upon a hurdle: the on-going rise of ‘Truth Decay’, a phenomena described in a RAND initiative which aims to restore the role of facts and analysis in public life.

In recent years, we have heard about the problem with misinformation and fake news, but most importantly, we have reached a point where people no longer agree on basic facts. And if we do not agree on basic facts how can we possibly address social issues such as education, healthcare, and the economy?

Whether we have heard it from a friend in the middle of a Facebook political comment war, or from a random conversation on the street, we have come to realize that people tend to disagree on basic objective facts. More often than not, we get very long texts from friends filling us in on their latest social media comment debacle with ‘someone’ who does not seem to ‘agree’ with the presented facts – the facts are drowned out by their opinions. The line between opinion and facts fades to the point where facts are no longer disputed, but instead rejected or simply ignored.

So what now? How do we actively fight this decay to keep the validity of facts afloat and demystify quantitative methods to influence our representatives, and possibly transform them into better informed policy makers?

First Steps

Whenever we find someone with distinct political views, say from an opposing political party, we could try to convince them to look at the issue from another perspective. Perhaps, we can point out the disparity between facts and beliefs in an understated way.

We can also actively avoid tribalization. Rather than secluding ourselves from groups with opposing political views, we can try to build understanding and empathy.

Additionally, we can also change our attitude toward ourselves and others. We must acknowledge that sometimes we need to change our beliefs in order to grow. Meaning that making our beliefs part of our identity is not the optimal way to fight this ongoing ‘Truth Decay’. It is important to remember that our beliefs may be inconsistent over time, and thus, we are not defined by them.

Lastly, we can embrace a new attitude: call yourself a truth seeker, try your best to remain impartial, and be curious. Keeping your mind open might allow you to learn more about yourself and others.

Sources:

RAND Study