Air Tags: Finding your Keys or your Sensitive Information?

By Chelsea Shu | February 7, 2020

With people becoming more reliant on features such as Apple’s Find My iPhone to help them find their phone or Mac laptop, people wish there was also a way to find other commonly misplaced items such as their wallet and keys. Apple may have a solution.

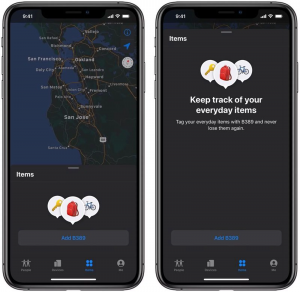

Apple is supposedly creating a new product called Air Tags that will track items through Bluetooth technology. The Air Tags will be small, white tags that attach to important items with an adhesive.

They have tracking chips in them that will connect them to an iPhone app called “Find My.” This will enable users to locate and track all of their lost items through an app on their iPhone. The Air Tags will also have a feature allowing a person to press a button to emit a sound from the Air Tag, allowing the user to locate their item easily.

Privacy Concerns

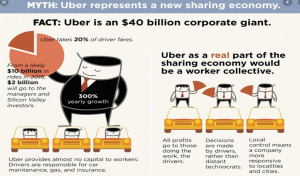

While this product may offer consumers an opportunity to seamlessly keep track of their items, it may be too good to be true. Apple already collects a multitude of personal data: what music we listen to, what news we read, and what apps we use most often. Introducing more items such as wallets and keys into Apple’s tracking system means Apple has increased surveillance on a person’s daily activities and their locations throughout the day. Increased surveillance means more access to a person’s personal life and possibly, sensitive information.

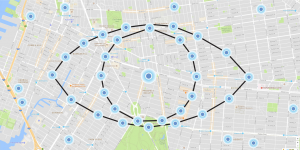

While Apple claims that it does not sell location information to advertisers, its privacy policy states that its Location Services function “allows third-party apps and websites to gather and use information based on current location.” This raises a concern because it enables third parties to collect this data for their own purposes and it is unclear what they will do with this data. Given this data, these third party companies can now track when Air Tag users arrive and leave places as well as monitor their tendencies.

Furthermore, there are large consequences if this data lands in the hands of a malicious person. Creating a product like Air Tags opens up the possibility for data to be shown or accessed by someone it was unintended for. This can lead to unwanted information being exposed or used against the person whose data is being tracked.

Apple also claims that it scrambles and encrypts the data it collects. But in reality, the anonymization of data is quite difficult. While Apple claims that the location data they collect “does not personally identify you,” combining the magnitude of data that Apple has on each person could make it possible to fit the puzzle pieces together and identify a person, violating that person’s privacy and enabling that persons sensitive information to be exposed.

In summary, the addition of Apple Air Tags might seem like a convenient, useful idea, but what people do not realize is that opting in to such a product opens up the doors for increased data surveillance.

References

https://www.macrumors.com/guide/airtags/

https://www.businessinsider.com/apple-airtags-new-iphone-product-rumors-release-date-features-2020-2

https://www.usatoday.com/story/tech/talkingtech/2018/04/17/apple-make-simpler-download-your-privacy-data-year/521786002/

https://support.apple.com/en-us/HT207056