When humans lose from “AI Snake Oil”

By Joe Butcher | December 6, 2019

No matter where you work or live, you don’t have to go far to hear someone talk about the benefits of AI (yes, that’s Artificial Intelligence I’m referring to). What do I mean exactly by AI? Well, for the most part machine learning (ML for all you acronym lovers), but that’s a topic for another blog post. While everyone loves to talk about creating AI tools and systems, one can argue we aren’t talking enough about the human lives impacted by AI-aided decisions.

While I am two years into UC Berkeley’s Master of Information and Data Science (MIDS) program, I realize I have far more to learn about AI and data science. In fact, the more I learn, the more I realize I don’t know. What’s frightening to me is the amount of AI-based companies being created and funded that influence decisions that have a real impact on humans’ lives. It can be challenging and time consuming for data science trained professionals to understand the validity of the tools that companies are creating, not to mention individuals who are less data science savvy which make up the majority of the workforce.

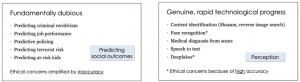

Arvind Narayanan, an Associate Professor of Computer Science at Princeton, recently gave a talk at MIT focused on “How to recognize an AI snake oil”. During this presentation, Professor Narayanan articulates the contrast between the hope of how AI can be successfully applied to certain domains to the reality around its effectiveness (or lack thereof). Professor Narayanan goes on to discuss domains where AI is making real, genuine progress and domains where AI is not only “fundamentally dubious”, but also results in ethical concerns due to inaccuracy. Furthermore, he claims that for predicting social outcomes, AI is no better than manually scoring using a few features.

While none of this is likely shocking to anyone in the field, it does beg the question of what is being done to protect society from negative consequences. With policy and regulations struggling to keep up with the pace of technological advancement, some have argued that self-regulation will be enough to combat the likes of “AI Snake Oil”. Neither seem to be progressing fast enough to protect people from poor decisions made by algorithms. Moreover, political turbulence (both in the U.S. and around the world) and potential for economic disruption across industries leave most people feeling both uneasy and hopeless.

Ethical frameworks and regulations have been proven ways to protect humans from harm. While the current situation is a daunting one, the data science community should challenge itself to stay committed to work grounded in values and ethics. While it can be tempting to reap the economic benefits from developing solutions that customers are willing to pay for, it is critical that we understand whether our solutions follow the basic principles from the Belmont Report that we started this class with:

- Respect of persons: Respect people’s autonomy and avoid deception

- Beneficence: “Do no harm”

- Justice: Fairly administer procedures and solutions

We can’t control everything that happens in the crazy world out there, but we can control how we apply our newly acquired data science toolkit. We should all choose wisely.

References

[1] Narayanan, A. “How to recognize AI snake oil”. https://www.cs.princeton.edu/~arvindn/talks/MIT-STS-AI-snakeoil.pdf

[2] Sagar, R. “The Snake Oil Merchants of AI: Princeton Professor Deflates the Hype.” https://analyticsindiamag.com/ai-hype-algorithms-bias-princeton-professor-talk-mi/

[3] “Belmont Report” https://www.hhs.gov/ohrp/regulations-and-policy/belmont-report/index.html