Data Privacy and the Chinese Social Credit System

“Keeping trust is glorious and breaking trust is disgraceful”

By Victoria Eastman | February 24, 2019

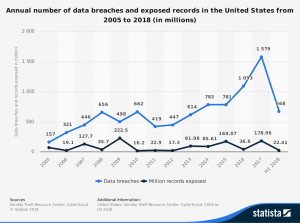

Recently, the Chinese Social Credit System has been featured on podcasts, blogs, and news articles in the United States, often highlighting the Orwellian feel of the imminent system China plans to use to encourage good behavior amongst its citizens. The broad scope of this program raises questions about data privacy, consent, algorithmic bias, and error correction.

What is the Chinese Social Credit System?

In 2014, the Chinese government released a document entitled, “Planning Outline for the Construction of a Social Credit System” The system uses a broad range of public and private data to rank each citizen on a scale from 0-800. Higher ratings offer citizens benefits like discounts on energy bills, more matches on dating websites, and lower interest rates. Low ratings incur such punishments as the inability to purchase plane or train tickets, banishment for you and your children from universities, and even pet confiscation in some provinces. The system has been undergoing testing in various provinces around the country with different implementations and properties, but the government plans to take the rating system nationwide in 2020.

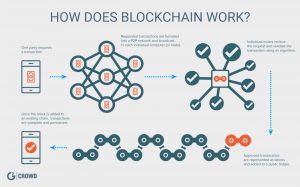

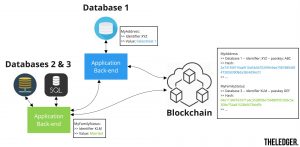

The exact workings of the system have not been explicitly detailed by the Chinese government, however details have spilled out since the policy was announced. Data is collected from a number of private and public sources: chat and email data; online shopping history; loan and debt information; smart devices, including smart phones, smart home devices, and fitness trackers; criminal records; travel patterns and location data; and the nationwide collection of millions of cameras that watch all Chinese citizens. Even your family members and other people you associate with can affect your score. The government has signed up more than 44 financial institutions and has issued at least 8 licenses to private companies such as Alibaba, Tencent, and Baidu to submit data to the system. Algorithms are run over the entire dataset and generate a single credit score for each citizen.

This score will be publicly available on any number of platforms including the newspapers, online media, and even some people phones so when you call a person with a low score, you will hear a message telling you the person you are calling has low social credit.

What does it mean for privacy and consent?

On May 1st, 2018, China announced the Personal Information Security Specification, a set of non-binding guidelines to govern the collection and use of personal data of Chinese citizens. The guidelines appear similar to the European GDPR with some notable differences, namely a focus on national security. Under these rules, individuals have full rights to their data, including erasure and must provide consent for any use of personal data by the collecting company.

How do these guidelines jive with the social credit system? The connection between the two policies has not been explicitly outlined by the Chinese government, but at first blush it appears there are some key conflicts between the two policies. Do citizens have erasure power over their poor credit history or other details that negatively affect their score? Are companies required to ask for consent to send private information to the government if it’s to be used in the social credit score? If the social credit score is public, how much control to individuals really have over the privacy of their data?

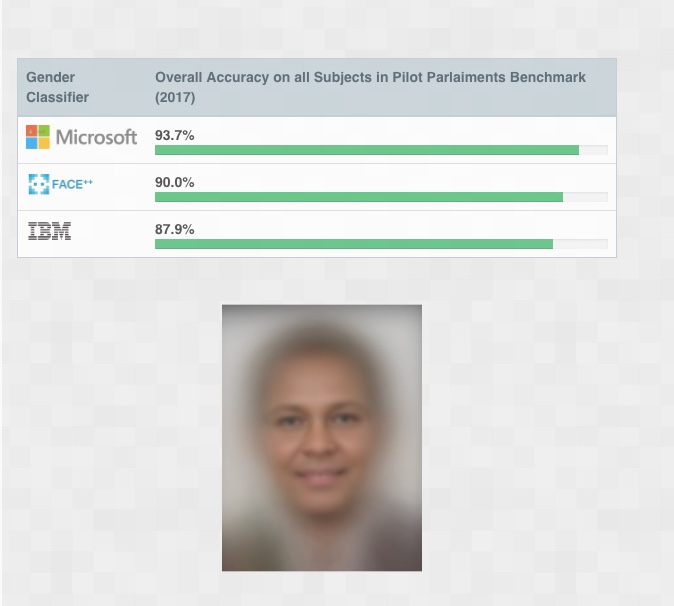

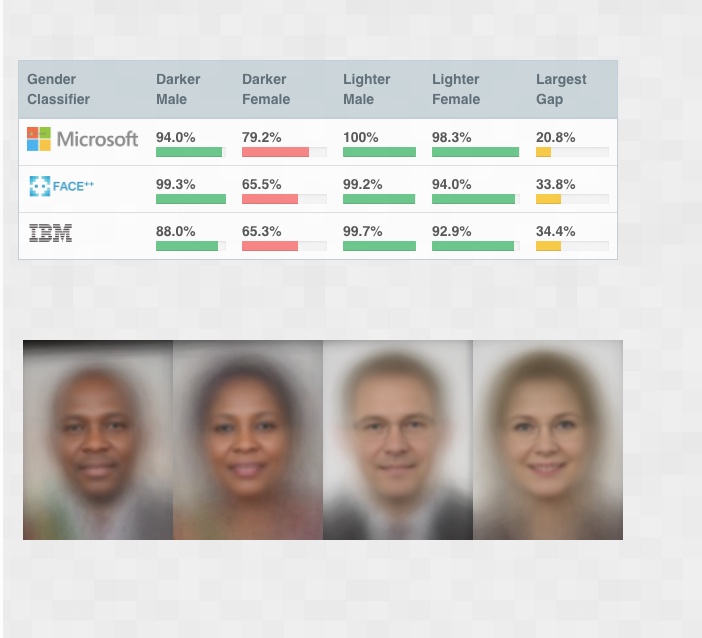

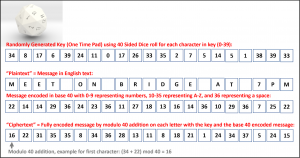

Other concerns about the algorithms themselves have also been raised. How are individual actions weighted by the algorithm? Are some ‘crimes’ worse than others? Does recency matter? How can incorrect data be fixed? Is the government removing demographic information like age, gender, or ethnicity or could those criteria unknowingly create bias?

Many citizens with high scores are happy with the system that gives them discounts and preferential treatment, but others fear the system will be used by the government to shape behavior and punish actions deemed inappropriate by the government. Dissidents and minority groups fear the system will be biased against them.

There are still many details that are unclear about how the system will work on a nationwide scale, however, there are clear discrepancies between the published data privacy policy China announced last year and the scope of the social credit system. How the government addresses the problems will likely lead to even more podcasts, news articles, and blogs.

Sources

Sacks, Sam. “New China Data Privacy Standard Looks More Far-Reaching than GDPR”. Center for Strategic and International Studies. Jan 29, 2018. https://www.csis.org/analysis/new-china-data-privacy-standard-looks-more-far-reaching-gdpr

Denyer, Simon. “China’s plan to organize its society relies on ‘big data’ to rate everyone“. The Washington Post. Oct 22, 2016. https://www.washingtonpost.com/world/asia_pacific/chinas-plan-to-organize-its-whole-society-around-big-data-a-rating-for-everyone/2016/10/20/1cd0dd9c-9516-11e6-ae9d-0030ac1899cd_story.html?utm_term=.1e90e880676f