Technology’s Hidden Biases

By Shalini Kunapuli | June 18, 2021

As a daughter of science fiction film enthusiasts, I grew up watching many futuristic movies including 2001: A Space Odyssey, Back to the Future, Her, Ex Machina, The Minority Report, and more recently Black Mirror. It was always fascinating to imagine what technology could look like in the future, it seemed like magic that would work at my every command. I wondered when society would reach that point in the future, where technology would aid us and exist alongside us. However, over the past few years I’ve realized that we are already living in that futuristic world. While we may not exactly be commuting to work in flying cars just yet, technology is deeply embedded in every aspect of our lives, and there are subtle evils to the fantasies of our technological world.

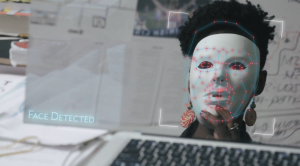

Coded Bias exists

We are constantly surrounded by technology – ranging from home assistants to surveillance technologies to health trackers. What a lot of people do not realize however is that many of these systems that are meant to serve us all are actually quite biased. Let’s take the story of Joy Buolamwini, from the documentary Coded Bias. Buolamwini, a PhD at the MIT Media Lab, noticed a major flaw in facial recognition software. As a Black woman, the software could not recognize her, however once she put a white mask on the software detected her. In general, “highly melanated” women have the lowest accuracy for being recognized by the system. As part of her research, Buolamwini discovered the data sets that the facial recognition software was trained on consisted mostly of white males. The people building the models are a certain demographic, and as a result they compile a dataset that looks primarily like them. In ways like this, bias is coded in the algorithms and models that are used.

Ex Machi-NO, there are more examples

The implications go far beyond the research walls at MIT. In London, the police intimidated and searched a 14 year old Black boy after using surveillance technology, only to realize later that the software had misidentified the boy. In the US, an algorithm meant to guide decision making in the health sector was created to predict which patients would need additional medical help in order to provide more tailored care. Even though the algorithm excluded race as a factor, it still resulted in prioritizing assistance to White patients over Black patients, even though the Black patients in the data were actually in more need.

Other minority groups are also negatively impacted by different technologies. Most notably, women tend to get the short end of the stick and have their accomplishments and experiences continually erased due to human history and gender politics. A book I read recently called “Invisible Women” by Caroline Criado Perez details several examples of gender data bias, some of which are so integrated into our normal lives that we do not usually think about it.

For example, women are 47% more likely to be seriously injured in a car crash. Why? Women are on average shorter than men, and thus tend to pull their seats more forward to reach the pedal on account of their on average shorter legs. However, this is not the “standard” car seating position. Even the airbag locations are in places that are typical for the size of an average male body. Crash test dummies are usually tested with male sized bodies as well, leading to higher risk for females in a car crash since they haven’t tested on female sized bodies.

Additionally, women are more likely to be misdiagnosed with a heart attack, because women don’t have the “typical” symptoms of a heart attack. Female pianists are more likely to suffer hand injuries because the piano key size is based around male handspan and the female handspan is smaller on average. The length of an average smartphone is 5.5 inches and is uncomfortable for many women because of their smaller handspans. Google Home is 70% more likely to recognize male speech, because it is trained on a male-dominated corpora of voice recordings.

The list goes on and on. All of these examples are centered around the “standard” being males. The “true norm” or the “baseline” condition is centered around male experiences. Furthermore, there is a lack of gender diversity within the tech field so the teams developing a majority of these technologies and algorithms are primarily male as well. This itself leads to gender data bias in different systems, because the teams building technologies implicitly focus on their own needs first without considering the needs of groups they may not have knowledge about.

Wherever there is data, there is bound to be an element of bias in it, because data is based on society and society in and of itself is inherently biased. The consequences of using biased data can compound upon existing issues. This unconscious bias in algorithms and models further widens the gap between different groups, causing more inequalities.

Back to the Past: Rethinking Models

Data doesn’t just describe the world nowadays, it is often used to shape it. There is more power and reliance being put on data and technology. The first step is recognizing that these systems are flawed. It may be easy to rely on a model especially when everything is a blackbox, as you can just get a quick and supposedly straightforward result out. We need to take a step back however and take the time to ask ourselves if we trust the result completely. Ultimately, the models are not making decisions that are ethical, they are only making decisions that are mathematical. This means that it is up to us as data scientists, as engineers, as humans, to realize that these models are biased because of the data that we provide to them. As a result of her research, Buolamwini started the Algorithmic Justice League to create laws and protect people’s rights. We can take a page out of her book and start to actively think about how the models we build or how the code we write has an effect on society. We need to advocate for more inclusive practices across the board, whether it is in schools, workplaces, hiring practices, government, etc. It is up to us to come up with solutions so we can protect the rights of groups that may not be able to protect themselves. We need to be voices of reason in a world where people rely more and more on technology everyday. Instead of dreaming about futuristic technology through movies, let us work together and build systems now for a more inclusive future — after all, we are living in our own version of a science fiction movie.

References

* https://www.wbur.org/artery/2020/11/18/documentary-coded-bias-review

* https://www.nytimes.com/2020/11/11/movies/coded-bias-review.html

* https://www.washingtonpost.com/health/2019/10/24/racial-bias-medical-algorithm-favors-white-patients-over-sicker-black-patients/

* https://www.mirror.co.uk/news/uk-news/everyday-gender-bias-makes-women-14195924

* https://www.netflix.com/title/81328723

* https://www.amazon.com/Invisible-Women-Data-World-Designed/dp/1419729071