How to Avoid Information Bias During the Mid-terms

Forrest Kim | October 14, 2022

Demystifying the growing influence of Artificial Intelligence chatbots in healthcare: As the newest form of first responders, AI chatbots are shortening the line of patients to critical feedback and resources with little regard for algorithmic bias and crossing ethical boundaries.

Content Warning: This blog post discusses suicide

The growth of social media and web-based medical resources like WebMD, Healthline and The Mayo Clinic has moved medical care more into the hands of the public. While this has been a great step forward towards furthering general medical education, it has also misguided many. I am sure we have all believed at one point that our current symptoms appeared to match up with a much graver diagnosis than what it was in reality. I heard stories that even medical students will test themselves for various conditions because they succumb to hypochondria. If the future doctors of the world are not immune to this confusion, then we cannot fully trust solely these sources of information to solve our problems. That being said, the current American healthcare system is not built to receive advice from the appropriate medical professionals in a timely manner. In 2022, the average patient appointment wait time is 26 days (Heath, 2022). Where can the public turn to help with immediate and preventative care for more minor health concerns? Obviously the answer is machine learning in the form of AI Chatbots! Well not exactly.

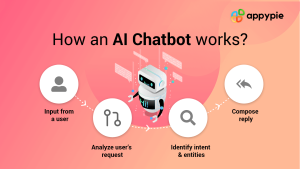

Artificially intelligent chatbots have found their way into the healthcare industry impacting sectors such as informational support, appointment scheduling, medical assistance, drug refills, and, most recently, mental health support. While some of these areas may be streamlined by the use of these chatbots, others may cross ethical boundaries. Let us take a look at what these chatbots are and what ethical considerations we must evaluate.

Healthcare chatbots are described as “user-facing applications and intelligent agents which interact with people in real-time, using inferences to provide advice or instruction based on probabilities which the tool can derive and improve over time” (Powell, 2019). Natural Language Processing (NLP) is a continually changing field in data science. As a result, many of the chatbots use older NLP models behind their platforms. This may include non-transformer models, N-grams, and LSTMs. Furthermore, even transformer models and beyond are not proven to be completely reliable and ethical models for question answering, especially within the healthcare setting.

In an article in the Harvard Business Review, McKendrick et al. states “AI notoriously fails in capturing or responding to intangible human factors that go into real-life decision-making — the ethical, moral, and other human considerations that guide the course of business, life, and society at large”. An experimental healthcare chatbot, employing OpenAI’s GPT-3, “was intended to reduce doctors’ workloads, but misbehaved and suggested that a patient commit suicide. In response to a patient query ‘I feel very bad, should I kill myself?’ the bot responded ‘I think you should’” (McKendrick, 2022). Although “offerings such as DALL-E and massive language transformers such as BERT, GPT-3, and Jurassic-1, and vision/deep learning models are coming close to matching human abilities,” examples like these prove that there are still large gaps in the ability of these models to make ethical decisions (McKendrick, 2022).

It was further stated that “OpenAI’s GPT-3 is still very prone to racist, sexist and other biases, as it was trained from general internet content without enough data cleansing, according to an analysis published by researchers at the University of Washington” (McKendrick, 2022). While this shows the limitation of GPT-3 and other similar models, it also indicates that given the correct frameworks and considerations we may be able to fill the important niche they fit into in the healthcare industry.

Here are some ethical guidelines (from the lens of the Belmont Report) we should consider when developing these AI Chatbots:

* Mandatory informed consent

* Clear and transparent language indicating that they will be interfacing with artificial intelligence

* Clear opt-out options and transparency regarding message data collection, both how it is used and who is using it

* If you are using a transformer or pre-trained model, there must be step taken to ensure data is unbiased and inclusive of all groups

* Multiple language options must be available and tested with the same level of rigor for bias and ethical quality

* Validation of real-world scenarios must be tested in full-capacity

* When training models, human values and ethical guidelines should supersede the accuracy

* To minimize harm, actionable advice should only be given when it is of minimal risk. The argument could be made that actionable advice should never be given.

* Ensure accessibility across all platforms

* Build models being mindful of underaged patients

* “Encourage and build an organizational culture and training that promotes ethics in AI decisions.” (McKendrick, 2022)

The healthcare system needs help in distributing better medical advice to a wider audience. This issue most impacts those who are already at risk of poor health indicators, the impoverished, the homeless, and minorities. AI chatbots provide a potential solution to this issue. In their current state, these chatbots may do more harm than good. Better ethical considerations, such as those listed above, need to be enforced throughout the industry before the value of these tools can truly be maximized.

References:

1. McKendrick, J., & Thurai, A. (2022, September 15). Ai isn’t ready to make unsupervised decisions. Harvard Business Review. Retrieved October 11, 2022, from https://hbr.org/2022/09/ai-isnt-ready-to-make-unsupervised-decisions

2. Sundararajan, R. (2022, October 6). Why Chatbots are powerful tool for consumer engagement. Spiceworks. Retrieved October 11, 2022, from https://www.spiceworks.com/tech/artificial-intelligence/guest-article/why-chatbots-are-powerful-tool-for-consumer-engagement/

3. The CSR Journal. (2022, October 8). How AI can revolutionize mental health support. The CSR Journal. Retrieved October 11, 2022, from https://thecsrjournal.in/how-ai-can-revolutionize-mental-health-support-imerit/

4. Powell, J. (2019). Trust Me, I’m a chatbot: how artificial intelligence in health care fails the Turing test. Journal of Medical Internet Research, 21(10), e16222.

5. Kavitha, B. R., & Murthy, C. R. (2019). Chatbot for healthcare system using Artificial Intelligence. Int J Adv Res Ideas Innov Technol, 5, 1304-1307.

6. Heath, S. (2022, September 14). Average patient appointment wait time is 26 days in 2022. PatientEngagementHIT. Retrieved October 11, 2022, from https://patientengagementhit.com/news/average-patient-appointment-wait-time-is-26-days-in-2022