Tell Me How You Really Feel: Zoom’s Emotion Detection AI

Evan Phillips | July 5, 2022

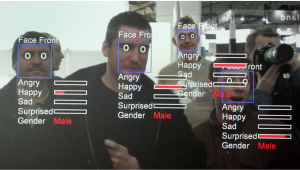

We’ve all had a colleague at work at one point or another who we couldn’t quite read. When we finish a presentation, we can’t tell if they enjoyed it, their facial expressions never seem to match their word choice, and the way they talk doesn’t always appear to match the appropriate tone for the subject of conversation. Zoom, a proprietary videotelephony software program, seems to have discovered the panacea for this coworker archetype. Zoom has recently announced that they are developing an AI system for detecting human emotions from facial expressions and speech patterns called “Zoom IQ”. This system will be particularly useful for helping salespeople improve their pitches based on the emotions of call participants (source).

The Problem

While the prospect of Terminator-like emotion detection sounds revolutionary, many are not convinced. There is now pushback from more than 27 separate rights groups calling for Zoom to terminate its efforts to explore controversial emotion recognition technology. In an open letter to Zoom CEO Co-Founder Eric Yuan, these groups voice their concerns of the company’s data mining efforts as a violation of privacy and human rights due to its biased nature. Fight for the Future Director of Campaign and Operations, Caitlin Seeley George, claimed “If Zoom advances with these plans, this feature will discriminate against people of certain ethnicities and people with disabilities, hardcoding stereotypes into millions of devices”.

Is Human Emotional Classification Ethically Feasible?

In short, no. Anna Lauren Hoffman, assistant professor with The Information School at the University of Washington, explains in her article where fairness fails: data, algorithms, and the limits of antidiscrimination discourse that human-classifying algorithms are not only generally biased but inherently flawed in conception. Hoffman argues that humans who create such algorithms need to look at “the decisions of specific designers or the demographic composition of engineering or data science teams to identify their social blindspots” (source). The average person incorporates some form of subconscious bias into everyday life and accepting is certainly no easy feat, let alone identifying it. Assuming the Zoom IQ classification algorithm did work well, company executives may gain a better aptitude to gauge meeting participants’ emotions at the expense of losing their ethos as an executive to read the room. Such AI has serious potential to undermine the use of “people skills” that many corporate employees pride themselves on as one of their main differentiating abilities.

Is There Any Benefit to Emotional Classification?

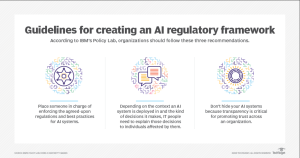

While companies like IBM, Microsoft, and Amazon have established several principles to address the ethical issues of facial recognition systems in the past, there has been little advancement to address diversity in datasets and the invasiveness of facial recognition AI in the last few decades. By informing users with more detail about the innerworkings of AI, eliminating bias in datasets stemming from innate human bias and enforcing stricter policy regulation on AI, emotional classification AI has the potential to become a major asset to companies like Zoom and those who use its products.

References

1) https://gizmodo.com/zoom-emotion-recognition-software-fight-for-the-futur-1848911353