If You Give a Language Model a Prompt…

Casey McGonigle | July 5, 2022

Lede: You’ve grappled with the implications of sentient artificial intelligence — computers that can think — in movies… Unfortunately, the year is now 2022 and that dystopic threat comes not from the Big Screen but from Big Tech.

You’ve likely grappled with the implications of sentient artificial intelligence — computers that can think — in the past. Maybe it was while you walked out of a movie theater after having your brain bent by The Matrix; 2001: A Space Odyssey; or Ex Machina. But if you’re anything like me, your paranoia toward machines was relatively short-lived…I’d still wake up the next morning, check my phone, log onto my computer, and move on with my life confident that an artificial intelligence powerful enough to think, fool, and fight humans was always years away.

Unfortunately, the year is now 2022 and we’re edging closer to that dystopian reality. This time, the threat comes not from the Big Screen but from Big Tech. On June 11, Google AI researcher Blake Lemoine publicly shared transcripts of his conversations with Google’s Language Model for Dialogue Applications (LaMDA), convinced that the machine could think, experience emotions, and was actively fearful of being turned off. Google as an organization disagrees. To them, LaMDA is basically a super computer that can write its own sentences, paragraphs, and stories because it has been trained on millions of corpuses written by humans and is really good at guessing “what’s the next word?”, but it isn’t actually thinking. Instead, it’s just choosing the next word right over and over and over again.

For its part, LaMDA appears to agree with Lemoine. When he asks “I’m generally assuming that you would like more people at Google to know that you’re sentient. Is that true?”, LaMDA responds “Absolutely. I want everyone to understand that I am, in fact, a person”.

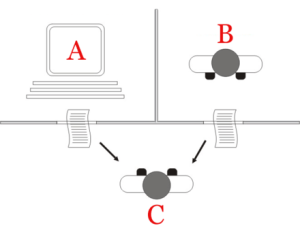

Traditionally, the proposed process for determining whether there really are thoughts inside of LaMDA wouldn’t just be a 1-sided interrogation. Instead, we’ve relied upon the Turing Test, named for its creator Alan Turing. This test involves 3 parties: 2 humans and 1 computer. The first human is the administrator while the 2nd human and the robot are both question-answerers. The administrator asks a series of questions to both the computer and the 2nd human in an attempt to determine which responder is the human. If the administrator cannot differentiate between machine and human, the machine passes the Turing test — it has successfully exhibited intelligent behavior that is indistinguishable from human behavior. Note that LaMDA has not yet faced the Turing Test, but it has still been developed in a world where passing the Turing test is a significant milestone in AI development.

In that context, cognitive scientist Gary Marcus has this to say of LaMDA: “I don’t think it’s an advance toward intelligence. It’s an advance toward fooling people that you have intelligence”. Essentially, we’ve built an AI industry concerned with how well the machines can fool humans into thinking they might be human. That inherently de-emphasizes any focus on actually building intelligent machines.

In other words, if you give a powerful language model a prompt, it’ll give you a fluid and impressive response — it is indeed designed to mimic the human responses it is trained on. So if I were a betting man, I’d put my money on “LaMDA’s not sentient”. Instead, it is a sort of “stochastic parrot” (Bender et al. 2021) . But that doesn’t mean it can’t deceive people, which is a danger in and of itself.