A “COMPAS” That’s Pointing in the Wrong Direction

By Akaash Kambath | July 9, 2021

Though I have mentioned a number of ways in which COMPAS fails to comport with the vision that the actors surrounding the tool had in mind, I am not trying to suggest that technology cannot be used to help the criminal justice system at all; in fact, a number of studies have mentioned how imperfect algorithms have helped the criminal justice system in a number of ways (Corbett-Davies, Goel, Gonz lez-Bail n 2017). I just do not believe that it can help in this situation—determining an individual’s likelihood of recidivating cannot be done in a truly unbiased way, and the implementation of COMPAS has only hurt the criminal justice system’s efforts in incarcerating people of all races in a fair manner. COMPAS’s effect in hurting the criminal justice system and people of color further provides a strong reminder of the imperativeness in conducting thorough research in evaluating the costs and benefits of all other alternatives when deciding to implement a new model or quantitative tool to solve a problem. As Sheila Jasanoff emphasizes in The Ethics of Invention, this includes the null alternative. She laments the fact that assessments and evaluations on quantitative risk assessment tools “are typically undertaken only when a technological project…is already well underway” (Jasanoff 2016). Researchers of these quantitative risk assessment tools also rarely consider the null option as an alternative, noting that the idea of “doing without a product or process altogether” is never put under investigation. Jasanoff’s work suggests that although actors pushing for a new technology may believe that it will fulfill a vision that sees humanity benefitting significantly, without complete consideration and research done on all of its alternatives, the implementation of such novel technology in areas not restricted to the criminal justice system may end up hurting humankind more than helping.

It is well known that there are a number of issues that plague the effectiveness and fairness of today’s criminal justice system. African-Americans have been the victims of disparaging prejudice throughout the system’s history; when examining the imprisonment rate (defined as the number of prisoners per 100,000 people) between whites and nonwhites, the imprisonment rate for black prisoners was “nearly six times the imprisonment rate for whites”, a gross misrepresentation of the true demographics of the current U.S. population (Gramlich 2019). Although efforts have been made to decrease this disparity between the incarceration rates of whites and nonwhites, there is still a long way to go. One way that the government has tried to improve the criminal justice system is by using a quantitative risk assessment tool to try and determine a convicted person’s likelihood of re-offending in an unbiased and fair way. The Correctional Offender Management Profiling for Alternative Sanction (COMPAS) score is a risk-assessment tool that purports to predict how likely someone is to recidivate. It was introduced by a company named Equivant, which uses a proprietary algorithm to calculate the score, and a higher COMPAS score suggests that the convicted individual is more likely to offend. The COMPAS score is given to judges when they are determining sentencing, and they use the results of the risk assessment tool alongside the convicted person’s criminal record to make their decision.

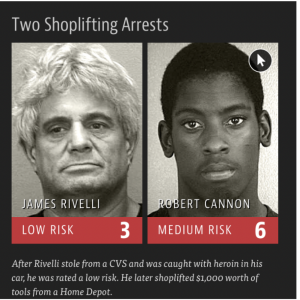

One can believe that the actors involved in the creation and implementation of the COMPAS score in the criminal justice system, i.e. employees and executives at Equivant, policymakers, and judges using the tool, had a vision that this tool would help create a safer society by keeping those that are more “at risk” of recidivating off of the streets and behind bars in a fair and accurate way. In recent years, however, COMPAS has come under much scrutiny for doing the contrary. In 2016, ProPublica investigated COMPAS’s performance and reported that the risk assessment tool has “falsely flag[ged] black defendants as future criminals, wrongly labeling them this way at almost twice the rate as white defendants” (Angwin et al. 2016). ProPublica’s article also mentions that COMPAS scores have incorrectly classified whites as well, stating that white prisoners that were given low-risk scores ended up recidivating at a higher rate than predicted. Instead of judging an indiviudal’s risk of re-offending in an unbiased way, the COMPAS score does the opposite—it reaffirms society’s racial stereotypes of viewing whites as “less dangerous,” and rendering people of color as inherently more dangerous and more likely to commit a crime. Furthermore, a 2018 article published by The Atlantic conducted research to challenge the claim that the algorithm’s predictions were better than human ones. 400 volunteers were given short “descriptions of defendants from the ProPublica investigation, and based on that, they had to guess if the defendant would commit another crime within two years.” In a stunning outcome, the volunteers answered correctly 63 percent of the time, and that number rose to 67 percent when their answers were pooled. This contrasted to the COMPAS algorithms accuracy level of 65 percent, suggesting that the risk assessment tool is “barely better than individual guessers, and no better than a crowd” (Yong 2018). Compounding this deficiency, the secretive algorithm behind the COMPAS score was discovered to be no better in performance than a basic regression model constructed by two Dartmouth students, and although this may suggest that the risk assessment tool may be unsophisticated, it is more likely that the tool has hit a “ceiling of sophistication.”

Though I have mentioned a number of ways in which COMPAS fails to comport with the vision that the actors surrounding the tool had in mind, I am not trying to suggest that technology cannot be used to help the criminal justice system at all; in fact, a number of studies have mentioned how imperfect algorithms have helped the criminal justice system in a number of ways (Corbett-Davies, Goel, Gonzlez-Bail n 2017). I just do not believe that it can help in this situation—determining an individual’s likelihood of recidivating cannot be done in a truly unbiased way, and the implementation of COMPAS has only hurt the criminal justice system’s efforts in incarcerating people of all races in a fair manner. COMPAS’s effect in hurting the criminal justice system and people of color further provides a strong reminder of the imperativeness in conducting thorough research in evaluating the costs and benefits of all other alternatives when deciding to implement a new model or quantitative tool to solve a problem. As Sheila Jasanoff emphasizes in The Ethics of Invention, this includes the null alternative. She laments the fact that assessments and evaluations on quantitative risk assessment tools “are typically undertaken only when a technological project…is already well underway” (Jasanoff 2016). Researchers of these quantitative risk assessment tools also rarely consider the null option as an alternative, noting that the idea of “doing without a product or process altogether” is never put under investigation. Jasanoff’s work suggests that although actors pushing for a new technology may believe that it will fulfill a vision that sees humanity benefitting significantly, without complete consideration and research done on all of its alternatives, the implementation of such novel technology in areas not restricted to the criminal justice system may end up hurting humankind more than helping.

Works Cited

1. John Gramlich, “The gap between the number of blacks and whites in prison is shrinking,” Pew Research Center, April 30, 2019,

https://www.pewresearch.org/fact-tank/2019/04/30/shrinking-gap-between-number-of-blacks-and-whites-in-prison/.

2. Julia Angwin, Jeff Larson, Surya Mattu and Lauren Kirchner, “Machine Bias,” ProPublica, May 23, 2016, https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

3. Ed Yong, “A Popular Algorithm Is No Better at Predicting Crimes Than Random People,” The Atlantic, January 17, 2018, https://www.theatlantic.com/technology/archive/2018/01/equivant-compas-algorithm/550646/.

4. Sam Corbett-Davies, Sharad Goel and Sandra Gonz lez-Bail n, “Even Imperfect

Algorithms Can Improve the Criminal Justice System”, The New York Times, December20, 2017, https://www.nytimes.com/2017/12/20/upshot/algorithms-bail-criminal-justice-system.html.

5. Sheila Jasanoff, “Risk and Responsibility,” in The Ethics of Invention: Technology and the Human Future, 2016, pp. 31-58.

6. Image source for first image: https://datastori.es/wp-content/uploads/2016/09/2016-09-23-15540.png