Clearview AI: The startup that is threatening privacy

By Stefania Halac, October 16, 2020

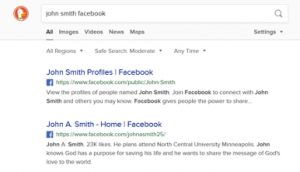

Imagine walking down the street, a stranger points their camera at you and can immediately pull up all your pictures from across the internet; they may see your instagram posts, your friends’ posts, any picture that you appear in, some which you may have never seen before. This stranger could now ascertain where you live, where you work, where you went to school, whether you’re married, who your children are… This is one of many compromising scenarios that may become part of our normal life if facial recognition software is widely available.

Clearview AI, a private technology company, offers facial recognition software that can effectively identify any individual. Facial recognition technology is intrinsically controversial, so much so that certain companies like Google don’t offer facial recognition APIs due to ethical concerns. And while some large tech companies like Amazon and Microsoft do sell facial recognition APIs, there is an important distinction between Clearview’s offering and that of the other tech giants. Amazon and Microsoft only allow you to search for faces from a private database of pictures supplied by the customer. Clearview instead allows for recognition of individuals in the public domain — practically anyone can be recognized. What sets Clearview apart is not its technology, but rather the database it assembled of over three billion pictures scraped from the public internet and social media. Clearview AI did not obtain consent from individuals to scrape these pictures, and has been sent cease and desist orders from major tech companies like Twitter, Facebook and Youtube over its practices due to policy violations.

In the wake of the Black Lives Matter protests earlier this year, IBM, Microsoft and Amazon updated their policies to restrict the sale of their facial recognition software to law enforcement agencies. On the other hand, Clearview AI not only sells to law enforcement and government agencies, but until May of this year was also selling to private companies, and has even been reported to have granted access to high net-worth individuals.

So what are the risks? One on hand, the algorithms that feed these technologies are known to be heavily biased and perform more poorly on certain minority populations such as women and African Americans. In a recent study, Amazon’s Rekognition was found to misclassify women as men 19% of times, and darker-skinned women for men 31% of time. If this technology were to be used in the criminal justice system, one implication here is that dark-skinned people would be more likely to be wrongfully identified and convicted.

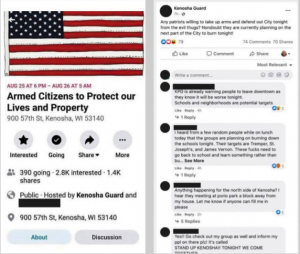

Another major harm is that this technology essentially provides its users the ability to find anyone. Clearview’s technology would enable surveillance at protests, AA meetings and religious gatherings. Attending any one of these events or locations would become a matter of public record. In the wrong hands, such as those of a former abusive partner or a white supremacist organization, this surveillance technology could even be life-threatening for vulnerable populations.

In response, the ACLU filed a lawsuit against Clearview AI in May for violation of the Illinois Biometric Information Privacy Act (BIPA), alleging the company illegally collected and stored data on Illinois citizens without their knowledge or consent and then sold access to its technology to law enforcement and private companies. While some cities like San Francisco and Portland have enacted facial recognition bans, there is no overarching national law protecting civilian privacy from these blatant privacy violations. With no such law in sight, this may be the end of privacy as we know it.

References:

We’re Taking Clearview AI to Court to End its Privacy-Destroying Face Surveillance Activities