China’s Scary But Robust Surveillance System

By Anonymous | June 18, 2021

Introducing the Problem

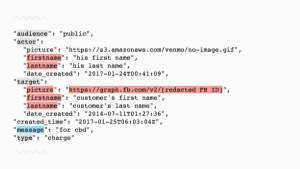

In 2014, the Chinese government introduced an idea that would allow them to keep track of their citizens and score their behavior. It seemed that the government wanted to have a world where their people were literally constantly monitored — whether it’s where people shop, how people are paying bills, and even the type of content they are watching. In many ways, it is what major companies in the US like Google, Facebook, etc. are doing with data collection, but on steroids and at least they are letting you know that your every move was being watched. On top of that, you are being judged and given a score based on your interactions and lifestyle. A high “citizen score” will grant people rewards such as faster internet service. Some instances where a person’s score may be decreased include posting on social media that contradicts the Chinese government. Private companies in China are constantly working with the government to gather data through social media and other behaviors on the internet.

A key potential issue is that the government will be technically capable of considering the behavior of a Chinese citizen’s friends and family in determining his or her score. For example, it is possible that your friend’s anti-government political post could lower your own score. There, this type of scoring mechanic can have implications on relationships between an individual’s friends and family. The Chinese government is taking this project seriously, and scores that one may take for granted in the US may be in jeopardy based on a person’s score. One example is accessing a visa to travel abroad or or even the right to travel by train or plane within the country. People understand the risks and dangers this poses and as one internet privacy expert says, “What China is doing here is selectively breeding its population to select against the trait of critical, independent thinking.” However, because lack of trust is a serious problem in China, many Chinese actually welcome this potential system. To relate this back to the US, I am wondering if this type of system could ever exist in our country and if so, what that would look like. Is it ethical for private companies to assist in massive surveillance and turn over their data to the government? Chinese companies are now required to assist in government spying while U.S. companies are not, but what happens when Amazon or Facebook are in the positions that Alibaba and Tencent are in now?

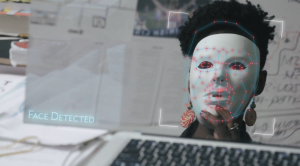

A key benefit for China to have so many cameras and surveillance set up throughout their major cities is that it helps to identify any criminals and helps with keeping track of crime. For example, in Chongqing, where there’s more surveillance cameras than any city in the world for its population, the surveillance system scans facial features of people on the streets from frames of video footage in real time. As a result, the scans can be compared against data that already exists within a police database, such as photos of criminals. If a match passes, typically 60% or higher, police officers are notified. One could make an argument for the massive surveillance system as being beneficial for society, but if law officials are not being transparent and enforcing good practices, then there is an issue.

References:

- https://www.theguardian.com/cities/2019/dec/02/big-brother-is-watching-chinese-city-with-26m-cameras-is-worlds-most-heavily-surveilled

- https://www.theatlantic.com/international/archive/2018/02/china-surveillance/552203/

- https://www.thesun.co.uk/news/14276151/china-plans-high-tech-streets-spy-cams-inform-neighbours/