WARNING: Your Filter Bubble Could Kill You

By Author: Anonymous | October 27, 2022

Filter bubbles can distort the health information accessible to you and result in misinformed medical decision making.

Filter bubbles are the talk of the town. Nowadays the political and social landscape seems to be increasingly polarized and it’s easy to point fingers at the social media sites and algorithms that are tailoring the information we all consume. Some of the filters we find ourselves in are harmlessly generating content we want to see – such as a new hat to go with the gloves we just bought. On the other hand, the dark side of filter bubbles can be dangerous in situations where personal health information is customized for us in a way that leads to misinformed decisions.

The ‘Filter Bubble’ is a term coined by Eli Parisor in his book The Filter Bubble: How the New Personalized Web Is Changing What We Read and How We Think. He describes our new “era of personalization” where social media platforms and search engines are using advanced models to craft content it predicts a user would like to see, based on past browsing history and site engagement. This virtually conjured world is intended to perfectly match a user’s preferences and opinions so that they’ll enjoy what they’re seeing and will keep coming back. All of this aims to increase user traffic and pad their bottom line.

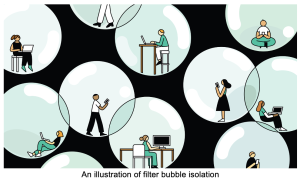

An illustration of filter bubble isolation

The negative implications of filter bubbles have been extensively researched, especially as they relate to political polarization. A more serious consequence of tailored content and search results is when the filter interferes with the quality of health information produced. In his article The filter bubble and its effect on online personal health information, Harald Holone describes how the technology can present serious new challenges for doctors and their patients. People are increasingly turning to ‘Doctor Google’ or other social forums to ask questions and become informed on decisions that will affect their health instead of their primary care, licensed professional. Holone describes how the relationship between doctors and patients is shifting because people are starting to draw their own conclusions before stepping foot in a doctor’s office, which can diminish their medical authority. The real issue occurs when people start using their personalized feeds or biased public forums for guidance and are still expecting objective results. As touched on before, the reality is that search results can be heavily skewed by prior browsing history and there’s no guarantee that what turns up is dependable for medical direction and the results could give only a distorted version of reality. An effective example he used is a scenario in which a person is deciding whether or not to have their child vaccinated. A publicized case of this is the 2014 measles outbreak in California; many blamed the root cause on a spread of misinformation leading to lower vaccination rates. Health misinformation can also transcend to other decisions relating to cancer treatment, diets or epidemic outbreaks.

There is no simple answer to combat this issue because challenges arise that prevent people from accessing more trustworthy medical information. One problem is the opaqueness of the filter algorithms can make people oblivious to the fact that what they are seeing is only a sliver of the real world and not objective. It is also not evident to people where they fall within the realm of filter bubbles and in what direction their information is biased, especially if they have been in a siloe for a long time. Another dilemma that Halone presents is our lack of control over the content we see. Even if we do become conscious of our bubble, many of the prevalent social media sites don’t offer a way to intentionally shift back to center.

So what can we do to become objectively informed, especially when it comes to medical information? Several solutions have been proposed to help us regain our power. A browser tool named Balancer was created by Munson et al that can track the sites you visit and provide an overview of your reading habits and biases to increase awareness. Another interesting tool that could help combat misinformation is a Chrome add-on called Rbutr that informs a user if what they are currently viewing has been previously disputed or contradicted elsewhere. A more simple place to start could be deleting your browser history or using DuckDuckGo when searching for information that will be used for health decisions.

The conversations surrounding filter bubbles seem to be mainly political in nature but a scary reality is that these bubbles have more grave implications. If you’re not paying attention they can be what’s sneakily driving your medical decisions, not you. Luckily there are steps that you can – and should – take so that you’re privy to all the information you need to make an informed decision within your own best interest.

Sources

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4937233/#R7

https://link.springer.com/article/10.1007/s10676-015-9380-y

https://www.wired.com/story/facebook-vortex-political-polarization/

https://fs.blog/filter-bubbles/