Emotional Surveillance: Music as Medicine

Anonymous | July 7, 2022

Can streaming platforms uphold the hippocratic oath? Spotify’s emotional surveillance patent exemplifies how prescriptive music could do more harm than good when it comes to consumers’ data privacy.

The pandemic changed the way we listen to music. In a period of constant uncertainty, many people turned to music. People also started to listen to more calming, meditative music. During this time, playlists started popping up on Apple Music specially curated with lofi, nature sounds. This category has been defined as ‘Chill’, but takes on many different names. The idea of music and sound therapy continues to be on the forefront of listener behavior today, with a trend on TikTok sharing brown noise sounds (brown noise has more deep, low sound waves compared to white noise, more similar to rain and storms). Brown noise can help alleviate symptoms of ADHD, and is being listened to as a sort of therapy for people who deal with anxiety.

The idea of listening to music as therapeutic is not new, however now there might be an AI tool feeding you the right diagnosis. While there is no cause for concern over someone being suggested a calming playlist, the bigger issue at hand is the direction this could take us in the future, and how surveillance audio driven recommendation systems dilute a user’s right to data privacy. Especially, when a platform wants to recommend music based on audio features that corresponded to the emotional state of the user. This was what was being considered following the patent that Spotify won back in 2021.

Spotify’s patent is a good case study for the direction which many streaming services are headed. Using this example, we can unpack the ways in which a user’s data and privacy is at risk.

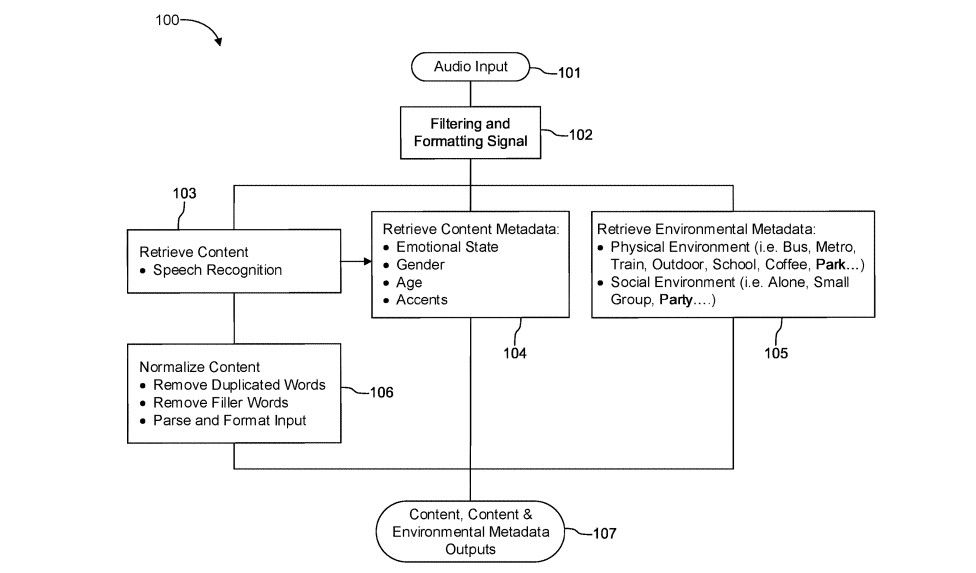

The specific language of the patent is as follows:

“There is retrieval of content metadata corresponding to the speech content, and environmental metadata corresponding to the background noise. There is a determination of preferences for media content corresponding to the content metadata and the environmental metadata, and an output is provided corresponding to the preferences.” [5]

Since this patent was granted, there was significant uproar over the potential impacts. In layman’s terms, Spotify was seeking to take advantage of AI to uncover tone, monitor your speech and background noise, and recommend music based on attributes its algorithm correlates to specific emotional states. For example, if you are alone, have been playing a lot of down tempo music and have been speaking to your mom about how you are feeling depressed, the system will categorize you as ‘sad’ and will feed you more sad music.

Since it won the patent, Spotify indicated it had no immediate intention to use the technology. This is a good sign, because there are a few ways that this idea could cause data privacy harm if it was used.

Users have a right to correct the data the app collects.

To meet regulatory standards, Spotify would need to provide the attribution of the emotions that it is categorizing you with based on its audio analysis. If it thinks you are depressed, but you are being sarcastic, how will you as a consumer correct that? Without the logistics to do so, Spotify is introducing a potential privacy harm for its users. Spotify is known to sell user data to third parties, where it could be aggregated and distorted, and you could end up being pushed ads for antidepressants.

Spotify could create harmful filter bubbles.

When a recommendation system is built to continually push content similar to what it thinks a user’s mood is, that is inherently prolonging potentially problematic emotional states. In this example scenario, continuing to listen to sad music when you are depressed can have a harmful impact on your emotional wellbeing, rather than to improve it. As with any scientific or algorithmic experimentation, we know from the Belmont Report that any features built that could affect a user or participants’ health must do no harm. The impact of a filter bubble (where you only get certain content) can mimic the harm done in YouTube’s recommendations, creating a feedback loop maintaining the negative emotional state.

Users have a right to know.

Part of Spotify’s argument for why this technology could benefit the user is that without collecting this data passively from audio, the user must click buttons to select mood traits and build playlists. According to the Fair Information Practice Principles guidelines, Spotify must be transparent and involve the individual in the collection of their data. While a user’s experience is extremely important, they still need to know that this data is being collected about them. Spotify should incorporate an opt-in consent mechanism if they were to move forward with this system.

Spotify still owns the patent for this technology, and other platforms are considering similar trajectories. While the music industry considers breaking into the next wave of how we interact with music and sound, streaming platforms should be careful if they plan on building a recommendation system that will leverage emotion metadata to curate content. This type of emotional surveillance dips into a realm of data privacy which has the potential to cause more harm than good. If any distributed service providers move in this direction, they should consider the implications on data privacy harm.

References

1 https://montrealethics.ai/discover-weekly-how-the-music-platform-spotify-collects-and-uses-your-data/

2 https://www.musicbusinessworldwide.com/spotifys-latest-invention-will-determine-your-emotional-state-from-your-speech-and-suggest-music-based-on-it/

3 https://www.stopspotifysurveillance.org/

4 https://www.soundofsleep.com/white-pink-brown-noise-whats-difference/

5 https://patents.justia.com/patent/10891948

6 https://georgetownlawtechreview.org/wp-content/uploads/2018/07/2.2-Mulligan-Griffin-pp-557-84.pdf

7 https://theartofhealing.com.au/2020/02/music-as-medicine-whats-your-recommended-daily-dose/

8 https://www.digitalmusicnews.com/2021/04/19/spotify-patent-response/

9 https://www.bbc.com/news/entertainment-arts-55839655