What is fair machine learning? Depends on your definition of fair.

By Anonymous | July 9, 2021

Machine learning models are being used to make increasingly complex and impactful decisions about people’s lives, which means that the mistakes they make can be equally as complex and impactful. Even the best models will fail from time to time — after all, all models are wrong, but some are useful — but how and for whom they tend to fail is a topic that is gaining more attention.

One of the most popular and widely used metrics for evaluating model performance is accuracy. Optimizing for accuracy teaches machines to make as few errors as possible given the data they have access to and other constraints; however, chasing accuracy alone often fails to consider the context behind the errors. Existing social inequities are encoded in the data that we collect about the world, and when that data is fed to a model, it can learn to “accurately” perpetuate systems of discrimination that lead to unfair outcomes for certain groups of people. This is part of the reason behind a growing push for data scientists and machine learning practitioners to make sure that they include fairness alongside accuracy as part of their model evaluation toolkit.

Accuracy doesn’t guarantee fairness.

In 2018, Joy Buolamwini and Timnit Gebru published Gender Shades, which demonstrated how overall accuracy can paint a misleading picture of a model’s effectiveness across different demographics. In their analysis of three commercial gender classification systems, they found that all three models performed better on male faces than female faces and lighter faces than darker faces. Importantly, they noted that evaluating accuracy with intersectionality in mind revealed that even for the best classifier, “darker females were 32 times more likely to be misclassified than lighter males.” This discrepancy was the result of a lack of phenotypically diverse datasets as well as insufficient attention paid to creating facial analysis benchmarks that account for fairness.

Buolamwini and Gebru’s findings highlighted the importance of disaggregating model performance evaluations to examine accuracy not only within sensitive categories, such as race and gender, but also across their intersections. Without this kind of intentional analysis, we may continue to produce and deploy highly accurate models that nonetheless distribute this accuracy unfairly across different populations.

What does fairness mean?

Recognizing the importance of evaluating fairness across various sensitive groups is the first step, but how do we measure fairness in order to optimize for it in our models?

Researchers have found several different definitions. One common measure is statistical or demographic parity. Suppose we had an algorithm that screened job applicants based on their resumes — we would achieve statistical parity across gender if the fraction of acceptances from each gender category was the same. In other words, if the model accepted 40% of the female applicants, it should accept roughly 40% of the applicants from each of the other gender categories as well.

Another definition known as predictive parity would ensure similar fractions of correct acceptances from each gender category (i.e. if 40% of the accepted female applicants were true positives, a similar percentage of true positives should be observed among accepted applicants in each gender category).

A third notion of fairness is error rate balance, which we would achieve in our scenario if the false positive and false negative rates were roughly the same across gender categories.

These are a few of many proposed mathematical definitions of fairness, each of which has its own advantages and drawbacks. Some definitions can even be contradictory, adding to the difficulty of evaluating fairness in real-world algorithms. A popular example of this was the debate surrounding COMPAS, a recidivism prediction tool that did not achieve error rate balance across Black and White defendants but did satisfy the requirements for predictive parity. In fact, because the base recidivism rate for both groups was not the same, researchers proved that it wasn’t possible for the tool to satisfy both definitions at once. This led to disagreement over whether or not the algorithm could be considered fair.

Fairness can depend on the context.

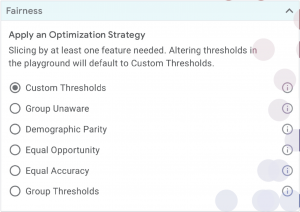

With multiple (and sometimes mutually exclusive) ways to measure fairness, choosing which one to apply requires consideration of the context and tradeoffs. Optimizing for fairness often comes at some cost to overall accuracy, which means that model developers might consider setting thresholds that balance the two.

In certain contexts, these thresholds are encoded in legal rules or norms. For example, the Equal Employment Opportunity Commission uses the four-fifths rule, which enforces statistical parity in employment decisions by setting 80% as the minimum ratio for the selection rate between groups based on race, sex, or ethnicity.

In other contexts, the balance between fairness and accuracy is left to the discretion of the model makers. Platforms such as Google What-If, AI Fairness 360, and other automated bias detection tools can aid in visualizing and understanding that balance, but it is ultimately up to model builders to evaluate their systems based on context-appropriate definitions of fairness in order to help mitigate the harms of unintentional bias.