Public Privacy: Is Digital Privacy Truly Attainable?

Shanie Hsieh | June 24, 2022

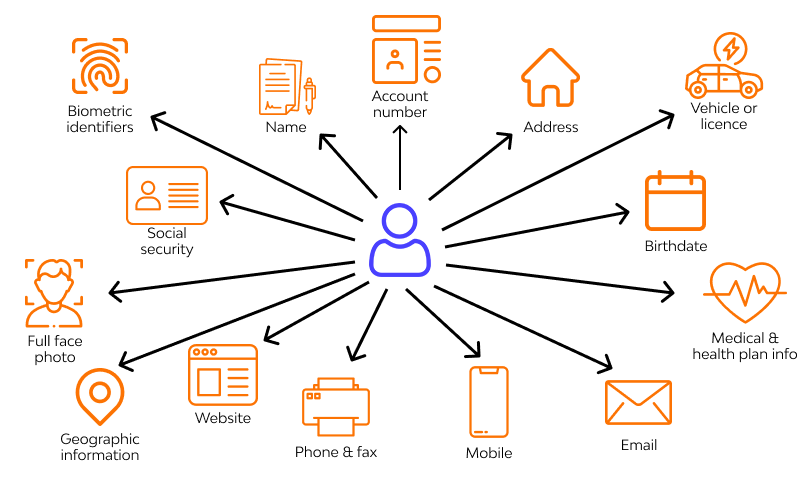

With the rise of the digital age, it becomes increasingly difficult to prevent digital footprints. In order to keep up with new technologies, users share more and more of their data to companies and products. The question is how can we properly protect data and privacy, while evolving new innovations.

Privacy Policies and Frameworks

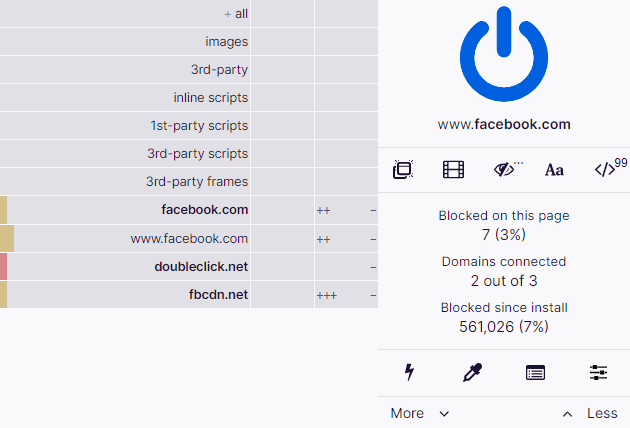

There are currently guidelines implemented to guide and protect privacy. The Federal Trade Commission Act (FTCA) [1] is one that is commonly referenced. Some important principles include what can be considered deceptive practices, consumer choice, and transparency in privacy policies. The General Data Protection Regulation (GDPR) [2] and California Consumer Privacy Act (CCPA) [3] serve a similar purpose in giving users the power over their data. There are multiple other frameworks that detail more into protecting user information, but these are meant as general guidelines where sites can easily bypass.

Who Reads Privacy Policies

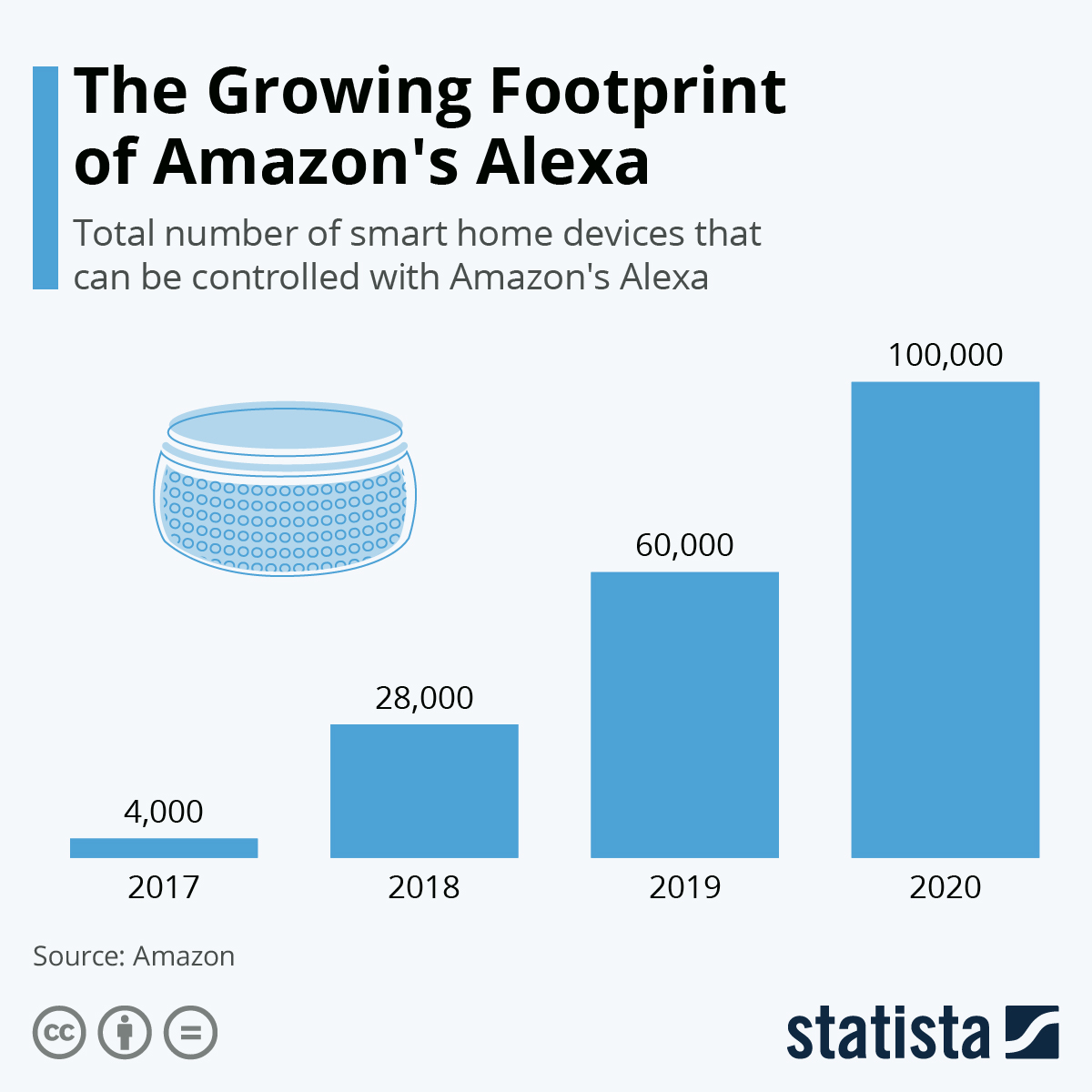

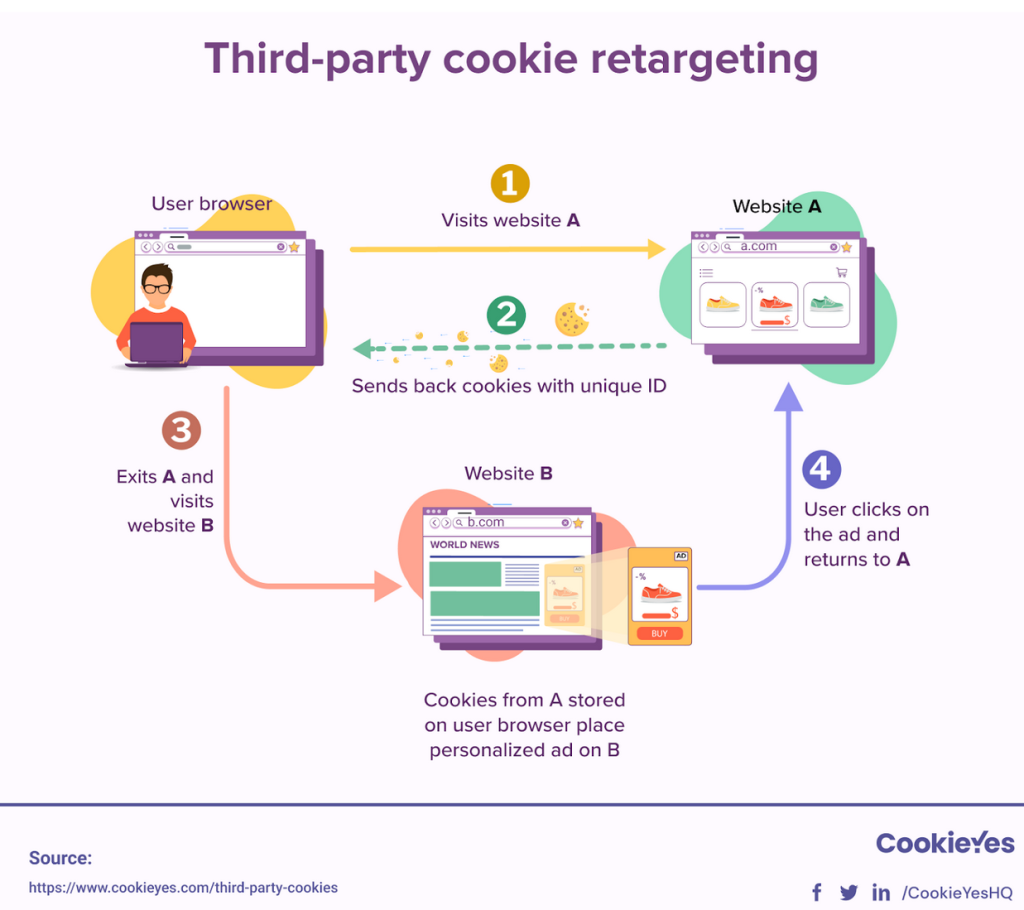

Every application, product, and website these days all have some sort of Terms of Service and Privacy Policy written up in order to gain a user’s trust and grow their platform. These documents are often thousands of words, ex. Twitter’s 4,500 word privacy policy. It is common knowledge, and even joked about, that most people click the “I accept” without even taking a second glance at what they are agreeing to. An article from The Washington Post [4] details this issue and offers a new approach to persuade companies to change the format of privacy policies in a way that can allow a user to better understand what they are consenting to. If consumers aren’t reading these policies that are put in place for their own protection, what purpose do these privacy policies truly hold?

Image 1: Pew Research shows how often do users read privacy policies. (From Pew Research Center)

Trust In The Digital-verse

An article published in Forbes [5] writes, “In the digital world, trust issues are often data issues.” This article goes on to advocate for companies to execute their work ethically so it does not breach any user’s trust, so in the long term, all users could trust in what they are agreeing to across the web. In an ideal world, this is our course of action to respect all people and their privacy. However, realistically, we have seen evidence of breaches in privacy and manipulation through the use of personal data. We cannot solely rely on respect and trust to enact effective privacy policies and protection.

The Balance Between Privacy And Innovation

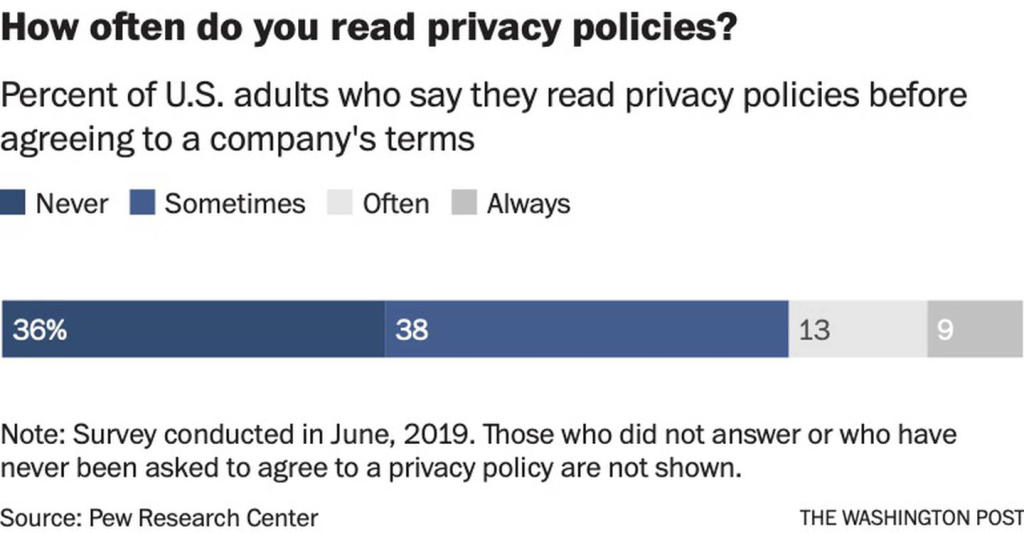

Based in algorithms and development, data is the backbone of technological advancements. Our biggest companies today, Google, Apple, Amazon, etc., have created some of the most influential products our world has ever seen, but at the cost of analyzing their users’ data, some of whomst had no idea they had consented to. A quote from an interview on CBN news [6] says, “We don’t realize how that data can be used to manipulate us, to control us, to shape our perception of truth and reality, which I think is some of the biggest questions in terms of technology and what it’s doing to us: is altering our perception of reality.” The leading question now is, “Is privacy… real?”

Image 2: Cartoon depicting a person who had unawarely consented to sharing personal information. (Created by Steve Sack on Cagle Cartoons)

What Can We Do?

As users, all we can do is read these policies carefully. If we assume that we can trust the companies who published their privacy policies, then it is our job to read what is written and not obliviously consent to something we may not truly agree to. As for companies, they should make it simpler to understand their policies. Twitter, for example, had tried to turn their privacy policy into a game in attempts to help their users understand the document. Overall, as we work towards a better future, we should share a mutual respect between users and the company in order to find this balance between privacy and technology.

References

[1] Federal Trade Commission Act Section 5: Unfair or Deceptive Acts or Practices

[2] GDPR

[3] California Consumer Privacy Act (CCPA)

[4] I tried to read all my app privacy policies. It was 1 million words.

[5] Trust In The Digital Age Requires Data Ethics And A Data Literate Workforce

[6] Is Privacy the Tradeoff for Convenience in the Age of Digital Worship? | CBN News

Images

[1] Pew Research

[2] Cartoon

[5]

[5] [6] An episode of “How I Met Your Mother” from 2007 was rerun in 2011 with a digitally inserted cover of “Zoo Keeper” to promote the movie’s release.

[6] An episode of “How I Met Your Mother” from 2007 was rerun in 2011 with a digitally inserted cover of “Zoo Keeper” to promote the movie’s release.