Privacy Concerns for Smart Speakers

By Anonymous | June 18, 2021

It is said that by the end of 2019, 60 million Americans own at least one smart speaker at home. Throughout the past 7 years, ever since the rise of Amazon’s Alexa in 2014, people have become more reliant on smart speakers to help out with mundane tasks such as answering your questions, making calls, or scheduling appointments without you opening your phone. With the same network, these smart devices are also able to connect to the core units of your home, such as the lighting, temperature, or even locks in your home. Although these devices have many benefits to everyday life, one can’t help but question some downsides, especially when it comes to privacy concerns.

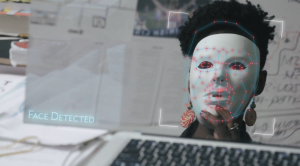

For those that do own a smart speaker such as Google Home or Alexa, how many times have you noticed that the system will respond to your conversation without actually calling on it? If your next question is: are smart devices always listening, I am sorry to inform you, yes, they are always listening.

Google Home

Even though Google Home is always listening in, it is not always recording your every conversation. Most of the time, it is on standby mode waiting for you to activate it by saying “Hey Google” or “Okay Google. However, you may notice that many times, Google Home can accidentally get activated without you saying the activation phrases. This is because sometimes in your conversation you might say things that sound similar which might trigger your device to start recording the conversation.

Based on a study done by researchers at Northwestern University and Imperial College London, they found that Google Home mini exhibited 0.95 average activations per hour while playing The West Wing through triggers of a potential wake word. This high occurrence can be problematic when it comes to privacy concerns, especially in the form of information collection and surveillance. User’s aren’t consenting to being watched, listened, or recorded while having conversations in the scope of their own home and can often make them feel inhibited or creeped out.

Alexa

Alexa on the other hand, has also had their fair share of privacy invasions. In 2018, an Amazon customer in Germany was mistakenly sent about 1,700 audio files from someone else’s Echo, providing enough information to name and locate the unfortunate user and his girlfriend. They attributed this to a human error without having any other explanation. It is also revealed that the top five smart home device companies have been using human contractors to analyse a small percent of voice-assistant recordings. Although the recordings are anonymised, they often contain enough information to identify the user, especially when the information is regarding medical conditions or other private conversations.

How to secure the privacy of your smart speaker

Based on some tips from Spy-Fy, here are some steps you can take to secure your Google Home device. Other devices should have a similar process.

● Check to see what the device has just recorded by visiting the Assistant Activity page.

● If you ever accidentally activate the Google Home, just say “Hey Google that wasn’t for you” and the assistant will delete what was recorded.

● You can set up automatic data deletion on your account or tell your assistant “Hey Google, delete what I said this week”.

● Turn off the device if you are ever having a private conversation with someone. This will ensure that the data is not being recorded without your permission.

Some other tips could be to limit what the smart speaker is connected to incase of a data breach. Best option would be to separate the smart devices with other sensitive information by using another Wi-Fi network.

References:

1. https://routenote.com/blog/smart-speakers-are-the-new-big-tech-battle-and-big-pri vacy-debate/

2. https://www.securityinfowatch.com/residential-technologies/smart-home/article/21 213914/the-benefits-and-security-concerns-of-smart-speakers-in-2021

3. https://spy-fy.com/google-home-and-your-privacy/#:~:text=Smart%20devices%20li ke%20Google%20Home%20are%20always%20listening.,security%20of%20their% 20Google%20Home.

4. https://moniotrlab.ccis.neu.edu/smart-speakers-study-pets20/

5. https://www.theguardian.com/technology/2019/oct/09/alexa-are-you-invading-myprivacy- the-dark-side-of-our-voice-assistants