Digital Data and Privacy Concerns

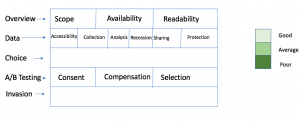

In a world where fast pace digital changes are happening every second, and new form services are being built using data in different ways. At the same time, more and more people are becoming concerned about their data privacy as more and more data about them are being collected and analyzed. However, are the privacy regulations able to catch up with the pace of data collection and usage? Are the existing efforts put into privacy notices effective in helping to communicate and to form agreements between services and the users?

An estimated 77% of websites now post a privacy policy.

These policies differ greatly from site to site, and often

address issues that are different from those that users care

about. They are in most cases the users’ only source of

information.

Policy Accessibility

According to a study done at The Georgia Institute of Technology that studied the online privacy notice format, out of the 64 sites offering a

privacy policy, 55 (86%) offer a link to it

from the bottom of their homepage.Three sites (5%)

offered it as a link in a left-hand menu, while two (3%)

offered it as a link at the top of the page. While there are regulations requiring the privacy notice be given to the users, there is no explicit regulation about how/where it should be communicated to the users. In the above mentioned study, we can see that most of the sites tend not to emphasis the privacy notice before users start accessing the data. Rather due to lack of incentives to make the notice accessible, it is pushed to the least viewed section of the home page ,since most users redirect out of home page before reaching the bottom.

In my final project I conducted a survey targeted to collect accessibility feedback directly from users who interact with new services regularly. The data supports the above observations from another perspective, which is the notice are designed with much lower priority than other contents presented to the users, which leads to very few percentage of users actually reads those notices.

Policy Readability

Another gap in the privacy regulations would be the readability of the notice. In the course of W231, one of the assignment we did was to read various privacy notices, and from the discussion we saw very different privacy notice structures/approaches from site to site. Particularly, a general pattern is that there is often use of strong and intimidating language

has strong legal backing, which makes the notice content not easily understood by the general population, but at the same time does abide with the regulations to a large extent.

Policy Content

According to the examinations of different privacy notices during the course of the W231, it is obvious that even among those service providers who intend to abide with privacy regulations, there is often use of vague languages and missing data. One commonly seen pattern is usage of languages like ‘may or may not’, ‘could’. In combination of the issue of accessibility, with users’ different mental state in different stages of using the services, few users actually seek clarifications from service providers before they virtually sign the privacy agreements. The lack of standard or control over the privacy policy content puts the users to an disadvantage when they encounter privacy related issues, as the content was already agreed on.

To summarize, the existing regulations on online privacy agreements are largely at a stage of getting from ‘zero’ to ‘one’, which is an important step as the digital data world evolve. However, a considerable amount of improvements are still needed to close the gaps between the existing policies to an ideal situation where services providers are incentivized to make the policy agreement accessible, readable and reliable.