GDPR Will Transform Insurance Industry’s Business Model

By Amit Tyagi | October 21, 2018

The European Union wide General Data Protection Regulation, or GDPR, came into force on May 25, 2018, with significant penalties for non-compliance. In one sweep, GDPR harmonizes data protection rules across the EU and gives greater rights to individuals over how their data is used. GDPR will radically reshape how companies can collect, use and store personal information, giving people the right to know how their data are used, and to decide whether it is shared or deleted. Companies face fines of up to 4 per cent of global turnover or €20m, whichever is greater.

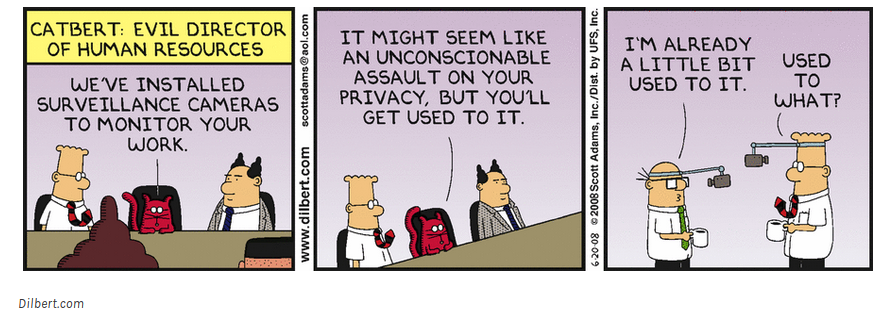

To comply with GDPR, companies across various industries are strengthening their data usage and protection policy and procedures, revamping old IT systems to ensure that they have the functionality to comply with GDPR requirements, and reaching out to customers to get required consents.

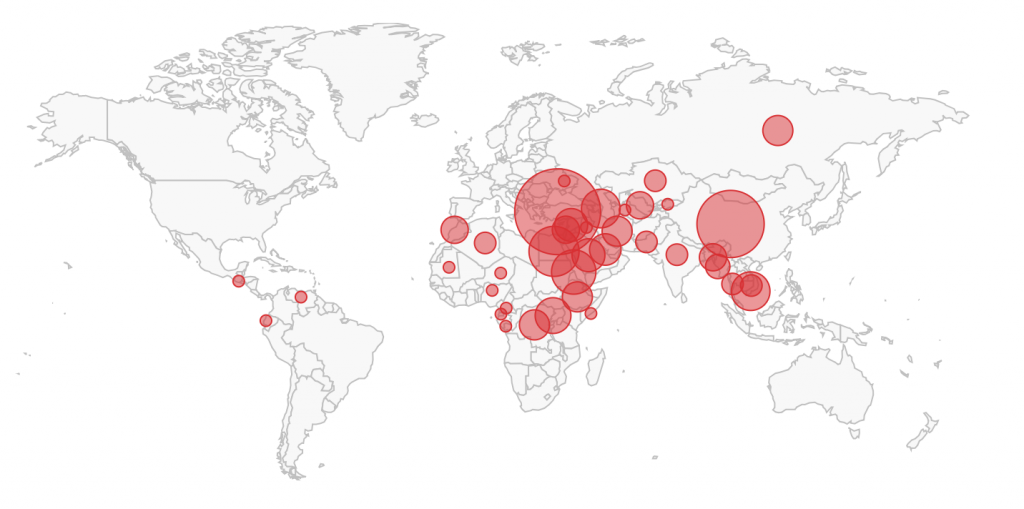

However, GDPR will also require a fundamental rethink of business models for some industries, especially those that heavily rely on personal data to make business and pricing decisions. A case in point is insurance industry. Insurers manage and underwrite risks. Collection, storage, processing and analyzing data is central to their business model. The data insurers collect go beyond personal information. They collect sensitive information such as health records, genetic history of illnesses, criminal records, accident-related information, and much more.

GDPR is going to affect insurance companies in many ways. Start with pricing. Setting the right price for underwriting risks heavily relies on data. With data protection and usage restriction provisions of GDPR, insurers will have to re-look at their pricing models. This may have an inflationary effect on insurance prices: not a good thing for consumers. This will be further compounded by ‘data minimization’, a core principle of GDPR limits the amount of data companies can lawfully collect.

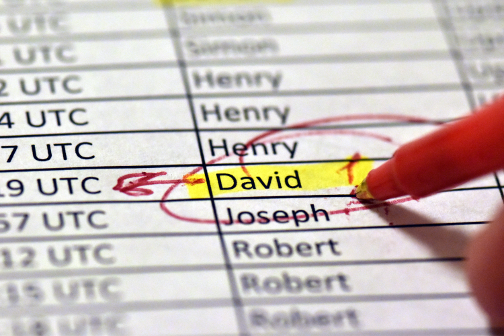

Insurance companies typically store their data for long periods. This aids them in pricing analytics and customer segmentation. With right to erasure, customers can request insurers to erase their personal data and claims history. These requests might come from customers who have an unfavorable claims history, leading to adverse selection due to information asymmetry.

Insurance frauds are another area that will be impacted by GDPR. Insurance companies protect themselves from fraudulent claims by analyzing myriad data points, including criminal convictions. With limitation on the type of data they are able to lawfully use, quite possibly insurance frauds may spike.

Insurance companies will also have to rethink their internal processes and IT systems which were built for a pre-GDPR era. Most decisions in insurance industry are automated, which includes, inter alia, whether to issue a policy or not, how much insurance premium to charge, whether to processes a claim fully or partially. Now with GDPR, customers can lawfully request human intervention in decision making.

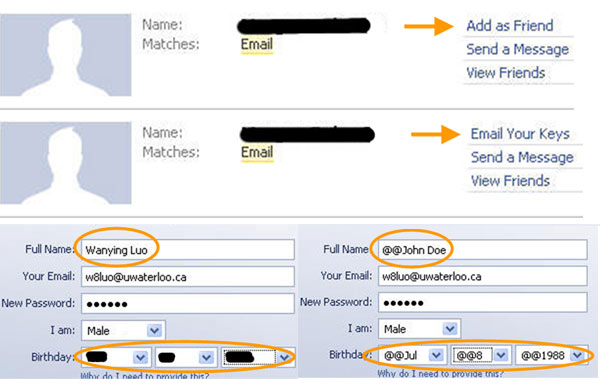

GDPR gives the right to customers to receive their personal data held by an insurer, or have it transmitted to another insurer in a structured, commonly used and machine-readable format. This will be a challenge as insurers will have to maintain interoperable data formats from disparate legacy IT systems. Further, this has to be done free of charge. This will surely lead to lower profitability as competition among insurers will increase.

GDPR mandates that data should be retained only as long as is necessary for the purpose for which it was collected, after which it needs to be deleted and anonymized. If stored for longer duration, the data should be pseudonymized. This will require significant system changes, which will be a huge challenge for insurance companies as the rely on disparate systems and data sources, all of which will have to change to meet GDPR requirements.

Though insurers may be acutely impacted by GDPR, their path to compliance should be a disciplined approach: revisiting systems and processes to assess readiness for this regulation and investing in filling gaps. Some changes may be big, such as data retention and privacy by design, while some may be more straightforward, such as providing privacy notices. In all cases, effective change management is the key.