Members: Andrew Chong, Owen Hsiao, Vivian Liu

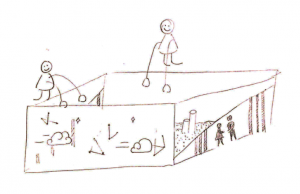

Our final project will be a music sandbox that allows users to engage with music haptically and visually. This is a scaled down, more interactive version of the installation chamber we had in mind for our midterm project.

Hands can make music and visuals by playing in the sand.

The interaction would be to have people stick their hands in the box and play around with the sand. Thus, the box would become an instrument that transduces hand motion into visuals (Processing, LED lights) and melody changes.

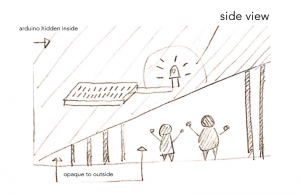

Only one wall will be augmented with a Processing display. The other two will be laser-cut with negative-space silhouettes. Through these holes, LED lights will shine through and change color. The point of the visuals is to invite people to engage with the box and to more demonstrably illustrate results.

This is the side view. The silhouettes represent negative space through which LED-colored lights would flow through. Perhaps they won’t be negative-space but could be made of some semi-opaque inspired by Cesar’s talk today.

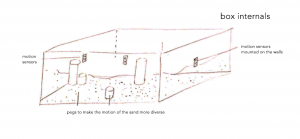

To track the motion, we will be using the same motion sensors we mentioned in our previous presentation. They will be mounted on each of the three non-Processing walls.

Within the box, besides sand there will also be pegs that will allow the sand to scatter when thrown down for a larger range of motion.

This is a view of the box ignoring the processing wall and the side walls.

The changes in music will be simple and will most likely be changes in speed. For example, if there is more motion in one wall, the melody will be played at a faster frequency (less time between each note).

Our game plan is to first iterate with a cardboard box and make sure that the motion can alter the music. We’ll be exploring PureData and linking things through FirmData. After getting that done (our MVP) we will work on the bells and whistles of visual output. Our thoughts are that the final product will be a laser cut box that we can set on the floor in the center of the exhibition.