Culpability in AI Incidents: Can I Have A Piece?

By Elda Pere | June 16, 2022

With so many entities deploying AI products, it is not difficult to distribute blame when things go wrong. As data scientists, we should keep the pressure on ourselves and welcome the responsibility to create better, fairer learning systems.

The question of who should take responsibility for technology-gone-wrong situations is a messy one. Take the case mentioned by Madeleine Clare Elish in her paper “Moral Crumple Zones: Cautionary Tales in Human-Robot Interaction”. If an autonomous car gets into an accident, is it the fault of the car owner that allowed this setting? Is it the fault of the engineer that built the autonomous functionality? The manufacturer that built the car? The city infrastructure’s unfriendliness towards autonomous vehicles? How about in the case when banks disproportionately deny loans to marginalized communities, is it the fault of the loan officer, who they buy information from, or the repercussions of a historically unjust system? The cases are endless, ranging from misgendering on social media platforms to misallocating resources on a national scale.

A good answer would be that there is a share of blame amongst all parties, but however true this may be, it does not prove useful in practice. It just makes it easier for each party to pass the baton and take away the pressure of doing something to resolve the issue. With this posting, in the name of all other data scientists I hereby take on the responsibility to resolve the issues that a data scientist is skilled to resolve. (I expect rioting on my lawn sometime soon, with logistic regressions in place of pitchforks.)

Why Should Data Scientists Take Responsibility?

Inequalities that come from discriminating against demographic features such as age, gender or race occur because the users are categorized into specific buckets and stereotyped as a group. The users are categorized in this way because the systems that make use of this information need buckets to function. Data scientists control these systems. They choose between a logistic regression and a clustering algorithm. They choose between a binary gender option, a categorical gender with more than two categories, or a free form text box where users do not need to select from a pre-curated list. While this last option most closely follows the user’s identity, the technologies that make use of this information need categories to function. This is why Facebook “did not change the site’s underlying algorithmic gender binary” despite giving the user a choice of over 50 different genders to identify with back in 2014.

So What Can You Do?

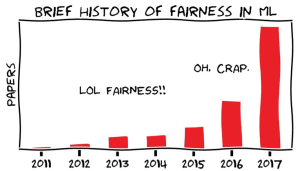

While there have been a number of efforts in the field of fair machine learning, many of them are still in the format of a scientific paper and have not been used in practice, especially with the growing interest demonstrated in Figure 1.

Figure 1: A Brief History of Fairness in ML (Source)

Here are a few methods and tools that are easy to use and that may help in practice.

- Metrics of fairness for classification models such as demographic parity, equal opportunity and equalized odds. “How to define fairness to detect and prevent discriminatory outcomes in Machine Learning” describes good use cases and potential things that could go wrong when using these metrics.

- Model explainability tools that increase transparency and make it easier to spot discrepancies. Popular options listed by “Eliminating AI Bias” include:

- LIME (Local Interpretable Model-Agnostic Explanations),

- Partial Dependence Plots (PDPs) to decipher how each feature influences the prediction.

- Accumulated Local Effects (ALE) plots to decipher individual predictions rather than aggregations as used in PDPs.

- Toolkits and fairness packages such as:

- The What-if Tool by Google,

- The FairML bias audit toolkit,

- The Fair Classification, Fair Regression or Scalable Fair Clustering Python packages.

Parting Words

My hope for these methods is that they inform data science practices that have sometimes gained too much inertia, and that they encourage practitioners to model beyond the ordinary and choose methods that could make the future just a little bit better for the people using their products. With this, I pass the baton to the remaining culprits to see what they may do to mitigate –.

This article ended abruptly due to data science related rioting near the author’s location.