Data Privacy and Shopping

By Joseph Issa | February 23, 2022

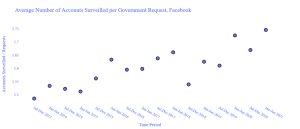

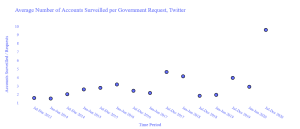

Data plays an essential role in our daily lives in the digital age. People shop online and provide several personal information such as email, name, address, and others. To be competitive in the data science world, we should take a deep look into users’ data privacy. For example, we are training a module on sensitive patient data to predict diabetes while keeping patients anonymous. Online social media websites (Facebook, Twitter, and others) are accustomed to collecting and sharing users’ data. In 2018, the European Union introduced the General Data Protection Regulation (GPPR), which includes a set of regulations to protect the data of European citizens. Any online service with servers in the EU must comply with this regulation. Several important key points from GDPR include having a private office in a company that serves more than 250 employees or dealing with sensitive data. Facebook faced massive penalties for not complying with GDPR.

Source: The Stateman

Everything about us, the users, is data; it is how we think, what we eat, dress, and own. Data protection laws are not going anywhere, and we will be seeing more laws in the coming years. The key is how to preserve users’ data while training the module on this sensitive data. For example, Apple started rolling out privacy techniques in their operating system. They can anonymously collect users’ data and train modules to improve users’ experience. Another example is Google, which collects anonymous data in chrome and in maps to help predict traffic jams. Numeria, for example, allows data scientist around the world to train their modules on encrypted financial data that keeps the client data private.

There are different techniques to develop prediction models while preserving users’ data privacy. Let’s first look at one of the most notorious examples of the potential of predictive analytics. It’s well known that every time you go shopping, retailers are taking note of what you buy and when you’re buying it. Your shopping habits are tracked and analyzed based on what time you go shopping if you use digital coupons vs. paper coupons, buy brand name or generic, and so much more. Your data is stored in internal databases where it’s being picked apart to find trends between your demographics and buying habits.

Stores keep the data for everything you buy; that is how registry stores know what coupons to send to customers. The shopping cart keeps a record of all the purchases made at a given shop. Target, for example, figures out when a teen was pregnant before her family even knew. Target sophisticated prediction algorithms were able to guess when a women shopper is pregnant based on a selection of 25 items that pregnant women buy, among the vitamins, zinc, magnesium, extra-large clothing, and others. Target can predict if a woman is pregnant before anyone else close to her based on this data. Target started targeting the lady with baby coupons at her home address, where she lived with her parents. Her father asked why his daughter was receiving baby coupons in the mail. It turned out that his daughter was pregnant and had told no one about it. The objective for Target store is to make future moms a third primary store, but in doing that, they violated the privacy of their customers.

Source: Youtube.com

The bottom line is target wants to figure out who is pregnant before they look pregnant, which is hard to distinguish them from other customers who are not pregnant. The reason behind that is that when someone is pregnant, they are potential goldmines in shopping for new items they don’t usually buy before they get pregnant. It is terrifying that a company knows what is going on inside your body or house without telling them. After this issue was broadcasted on different new media, Target decided to shut down the program, including the pregnancy prediction algorithm.

Target could have camouflaged the coupons with other regular coupons, so it won’t look clear to the person receiving the coupons in the house that their daughter is pregnant. Instead, they can include coupons or ads for wine-related products, for example, or other food items. This way, they purposely hide the baby-related coupons to slip the baby-related coupon to people’s homes in a way it is not suspected to them.

Another online shopping data breach incident happened with Amazon. Amazon’s technical error accidentally exposed users’ data, including names, emails, addresses, and payment data. The company denied that this incident was a breach or a hack, given the outcome is the same.

Conclusion

In a digital economy, data is of strategic importance. With many online activities such as social, governmental, economic, and shopping, the flow of personal data is expanding fast, raising the issue about data privacy and protection. Legal frameworks that include data protection, data gathering, and the use of data should be in place to protect users’ personal information and privacy.

Furthermore, companies should be held accountable for mishandling users’ data with confidentiality.

References:

Solove, Daniel J. 2006. “A Taxonomy of Privacy.” University of Pennsylvania Law Review 154:477–564. doi:10.2307/40041279

Castells, Manuel. 2010a. The Power of Identity, 2nd ed. Vol. 2, The Information Age: Economy, Society, and Culture. Malden, MA: Wiley-Blackwell.

Castells, Manuel. 2010b. The Rise of the Network Society, 2nd ed. Vol. 1, The Information Age: Economy, Society, and Culture. Malden, MA: Wiley-Blackwell.