Risk Governance as a Path Towards Accountability in Machine Learning

By Anonymous | October 8, 2021

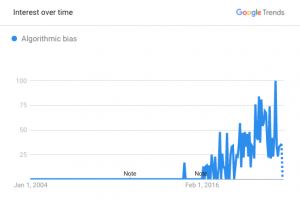

Over the past 5 years there has been a growing conversation in the public sphere about the impact of machine learning (ML) – systems that learn from historical examples rather than being hard-coded with rules – on society and individuals. Specifically, much of the coverage has focused on issues of bias in these systems – the propensity for social media feeds, news feeds, facial recognition and recommendations systems (like those that power YouTube and TikTok) to disproportionately harm historically marginalized or protected groups. From [categorizing African Americans as “gorillas”](https://www.theverge.com/2015/7/1/8880363/google-apologizes-photos-app-tags-two-black-people-gorillas), [denying them bail at higher rates](https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing) than comparable white offenders and [demonetizing LGBTQ+ content on YouTube](https://www.vox.com/culture/2019/10/10/20893258/youtube-lgbtq-censorship-demonetization-nerd-city-algorithm-report) ostensibly based on benign word choices in video descriptions. In the US, concern has also grown around the use of these systems by social media sites to [spread misinformation and radicalizing content](https://www.cbsnews.com/news/facebook-whistleblower-frances-haugen-misinformation-public-60-minutes-2021-10-03/) on their platforms, and the [safety of self-driving cars](https://www.nytimes.com/2018/03/19/technology/uber-driverless-fatality.html) continues to be of concern.

Along with this swell of public awareness has emerged a growing chorus of voices (such as [Joy Buolamwini](https://www.media.mit.edu/people/joyab/overview/), [Sandra Wachter](https://www.oii.ox.ac.uk/people/sandra-wachter/) and [Margaret Mitchell](http://m-mitchell.com/)) advocating for fairness, transparency and accountability in the use of machine learning. Corporations appear to be starting movement in this direction as well, though not without [false starts](https://www.bloomberg.com/news/articles/2021-02-18/google-to-reorganize-ai-teams-in-wake-of-researcher-s-departure), controversy and a lack of clarity on how to operationalize their often lofty, well-publicized AI principles.

From one corner of these conversations an interesting thought has begun to emerge: that these problems are [neither new, nor novel to ML](https://towardsdatascience.com/the-present-and-future-of-ai-regulation-afb889a562b7). And, in fact, institutions already have a well-honed tool to help them navigate this space in the form of organizational risk governance practices. Risk governance encompasses the “…institutions, rules conventions, processes and mechanisms by which decisions about risks are taken and implemented…” ([Wikipedia, 2021](https://en.wikipedia.org/wiki/Risk_governance)) and contemplates broadly all types of risk, including financial, environmental, legal and societal concerns. Practically speaking, these are often organizations within institutions whose goal it is to catalogue and prioritize risk (both to the company and that which the company poses to the wider world), while working with the business to ensure they are mitigated, monitored and/or managed appropriately.

It stands to reason then that this mechanism may also be leveraged to consider and actively manage the risks associated with deploying machine learning systems within an organization, helping to [close the current ML accountability gap](https://dl.acm.org/doi/pdf/10.1145/3351095.3372873). A goal which might seem more within reach when we consider that the broader risk management ecosystem (of which risk governance forms a foundational part) also includes standards (government regulations or principles-based compliance frameworks), corporate compliance teams that work directly with the business, and internal and external auditors that verify sound risk management practices for stakeholders as diverse as customers, partners, users, governments and corporate boards.

This also presents an opportunity for legacy risk management service providers, such as [PwC](https://www.pwc.com/gx/en/issues/data-and-analytics/artificial-intelligence/what-is-responsible-ai.html), as well as ML-focused risk management startups like [Monitaur](https://monitaur.ai/) and [Parity](https://www.getparity.ai/) to bring innovation and expertise into institutional risk management practices. As this ecosystem continues to evolve alongside data science, research and public policy, risk governance stands to help operationalize and make real organizational principles, and hopefully lead us into a new era of accountability in machine learning.