Rise of Voice Assistants

by Lucas Lam | April 2, 2021

Many horror stories have surfaced as a result of the rise of Voice Assistants. From Alexa giving a couple some unwarranted advice to Alexa threatening someone with Chuck Norris, many creepy, perhaps crazy have surfaced. Without questioning the validity of these stories and getting deep into conspiracy theories, we recognize that the rise of voice assistants like the Echo from Amazon and Google Home from Google, has and will continue to give way to more privacy concerns. Yet, as it is getting harder and harder to get away from them going forward, we must understand what kind of data they are collecting, how we can take measures to protect our privacy, and how we can have peace of mind when using the product.

What are they collecting?

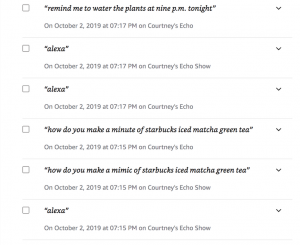

In the words of this “How-to Geek” article: “Alexa is always listening but not continually recording.” Voice Assistants are triggered by wake words. For Amazon’s device, the wake word is “Alexa”. When the blue ring light appears, the device captures audio input, sends it to the cloud to process the request, and a response gets sent back to the device. Anything said after a wake word is fair game for virtual assistants to record audio input. Every command that is given is stored, sent to the cloud for processing, and a response is sent back to the device to perform the task necessary. In Alexa’s Privacy Hub, it mentions that “all interactions with Alexa are encrypted in transit to Amazon’s cloud where they are securely stored,” explaining that the recording of audio input getting sent to the cloud and back is a secure process. Once a request is processed, the encounter is stored and collected, but users also have the ability to choose to delete the recordings once stored.

When users don’t actively delete their recordings, that’s information that these companies can harness to “understand” you better, give more targeted and customized responses, make more precise recommendations, etc. Though this can be considered creepy, the real threats don’t come when the virtual assistance understands your preferences better, it comes when that understanding gets into the hands of other people.

Potential Threats

What are some of the real threats when it comes to virtual assistants?

Any mishaps in triggering the wake word will lead to unwelcomed eavesdropping. Again, these voice assistants are always listening for their wake words, so a word that is mistaken for “Alexa” will inadvertently record audio input and return a response. That is why it is of upmost importance that companies optimize their algorithms so that they mitigate the false positives and increases the precision of detecting wake words. One major threat is that these recordings can land on the hands of people working on the product, from the machine learning engineers to the transcribers who work with this kind of data to improve the services of the device. Though personally identifiable information should be encrypted, an article in Bloomberg revealed that transcribers potentially have access to first names, device serial numbers, and account numbers.

Hacking is another possible threat. According to an article from Popular Mechanics, a German Sercuity consulting firm found that voice data can be hacked into through third-party apps. Hackers can attempt phishing by getting these virtual assistance to ask for a password or sensitive information in order for a request to be processed. Active security measures must be implemented in place to prevent such activity.

**What to do?**

There are some possible threats, and it’s consequences can escalate. Odds of something like this happening to an average joe is rare, but even if one is fearful of the consequences, many things can be done to protect one’s data privacy, from setting up automatic voice deletions to going file by file to delete the recordings. Careful use and careful investigation on your ability to protect your own privacy can give you a greater peace of mind every time you go home and talk to Alexa.

References:

https://www.bloomberg.com/news/articles/2019-04-10/is-anyone-listening-to-you-on-alexa-a-global-team-reviews-audio

https://tiphero.com/creepiest-things-alexa-has-done

https://www.amazon.com/Alexa-Privacy-Hub/b?ie=UTF8&node=19149155011

https://www.washingtonpost.com/technology/2019/05/06/alexa-has-been-eavesdropping-you-this-whole-time

https://www.howtogeek.com/427686/how-alexa-listens-for-wake-words/