Addressing the Weaponization of Social Media to Spread Disinformation

By Anonymous | July 3, 2020

The use of social media platforms like Facebook and Twitter by political entities to present their perspectives and goals is arguably a key aspect of their utility. However, social media is not always used in a forthcoming manner. One such example is the use of these sites by Russia to spread disinformation by exploiting platform engagement and the cognitive biases of the users. The specific mechanisms of their techniques are documented and summed up as a “Firehose of Falsehood”, which serves as a guide to identify specific harms that we can proactively guard against.

The context of the analysis was rooted in the techniques being employed around the time of Russia’s 2014 invasion of the Crimean Peninsula. The techniques employed would go on to be reused to great effect in 2016, when they were used against the United Kingdom in their Brexit referendum, as well as the United States in their presidential election. More recently, the Firehose has been used against many other targets, including 5G cellular networks and vaccines.

While their techniques share some similarities with those of their Soviet predecessors, the key characteristics of Russian propaganda are that they are high-volume and multichannel, continuous and repetitive, and lacking commitment to objective reality or consistency. This approach lends itself well to social media platforms, as the speed at which new false claims can be generated and broadly disseminated far outstrip the speed at which fact checkers operate – polluting is easy, but cleaning up is difficult.

Figure 1: The evolution of Russian propaganda towards obfuscation and using available platforms

(Sources: Amazon, CBS)

The Firehose also emphasizes exploiting audience psychology in order to disinform. The cognitive biases exploited include the advantage of the first impression, using information overload to force the use of shortcuts to determine trustworthiness, use of repetition to create familiarity, the use of evidence regardless of veracity, and peripheral cues such as creating the appearance of objectivity. Repetition in particular works because familiar claims appear are favoured over less familiar ones – by repeating the message frequently, that repetition leads to familiarity, which in turn leads to acceptance. From there, confirmation bias further entrenches those views.

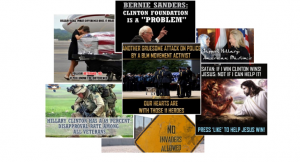

Figure 2: A cross-section of specimens from the 2016 election

(Source: Washington Post)

Given the nature of the methods outlined, some suggested responses are:

1. Do not rely solely on traditional techniques of pointing out falsehoods and inconsistencies

2. Get ahead of misinformation by raising awareness and make efforts to expose manipulation efforts

3. Focus on thwarting the desired effects by redirecting behaviours or attitudes without directly engaging with the propaganda

4. Compete by increasing the flow of persuasive information

5. Turn off the flow by undermining the broadcast and message dissemination through enforcement of terms of service agreements with internet service providers and social media platforms

From an ethical standpoint, some of the proposed measures have some hazards of their own – in particular, the last suggestion (“turn off the flow”) may be construed as viewpoint-based censorship if executed without respect for the users’ autonomy in constructing their perspectives. As well, competing may be tantamount to fighting fire with fire, depending on the implementation. Where possible, getting ahead of the misinformation is preferable, as forewarning acts as an inoculant for the audience – by getting the first impression and highlighting attempts to manipulate the audience, it prepares the users to critically assess new information.

As well, if it’s necessary to directly engage with the claims being made, solutions proposed are:

1. Warn at the time of initial exposure to misinformation

2. Repeat the retraction/refutation, and

3. Provide alternative story while correcting misinformation to immediately fill the information gap that arises

These proposed solutions are less problematic than the prior options, as limiting the scope to countering the harms of specific instances of propaganda, despite the limitations highlighted above, preserves respect for users to arrive at their own conclusions.

In fighting propaganda, how can we be sure that our actions remain beneficent in nature? In understanding the objectives and mechanics of the Firehose, we also see that there are ways to address the harms being inflicted in a responsible manner. By respecting the qualifications of the audience to exercise free will in arriving at their own conclusions and augmenting their available information with what’s relevant, we can tailor our response to be effective and ethical.

Sources:

The Russian “firehose of falsehood” propaganda model: Why it might work and options to counter it

Your 5G Phone Won’t Hurt You. But Russia Wants You to Think Otherwise

Firehosing: the systemic strategy that anti-vaxxers are using to spread misinformation

Release of Thousands of Russia-Linked Facebook Ads Shows How Propaganda Sharpened

What we can learn from the 3,500 Russian Facebook ads meant to stir up U.S. politics