Freudian Slips

by Anonymous | December 4, 2018

In the ongoing battleground in the use and abuse of personal data in the age of big data, we often see competing forces of companies wanting to create new innovation and regulators who want to enforce limits in consideration of privacy or sociological harms that could arise from unmitigated usage. Often we will see companies or organizations who want as much data as possible, unfettered by considerations of regulation or other restrictions.

An interesting way to think about the underlying dynamic is to consider superimposing psychological models of human behavior on the cultural forces at play. Ruth Fulton Benedict wrote, Culture is “Personality Writ Large” in her book Patterns of Culture (htt1). One model for understanding the forces underlying human behavior is the Freudian one of the Id, Superego and Ego. In this model of explaining human behavior, Freud identified the Id as the primal underlying driving forces of human gratification, whether they be to satiate hunger or sexual in nature. It is an entirely selfish want or need for gratification. The Superego is the element of the brain that adheres to social norms, morality and is aware of the inherent social contract, the force of mutually agreed upon rules that enable individuals to co-exist. In his model, the resultant Ego was the output of these two forces that was the observed behavior presented to the world. An unhealthy balance of either Id or Superego, in his model, would result in diseased behavior. Consider a person who feels hungry and just takes things off other people’s plates without hesitation. This Id imbalance, without sufficient Superego restriction would be considered unhealthy behavior.

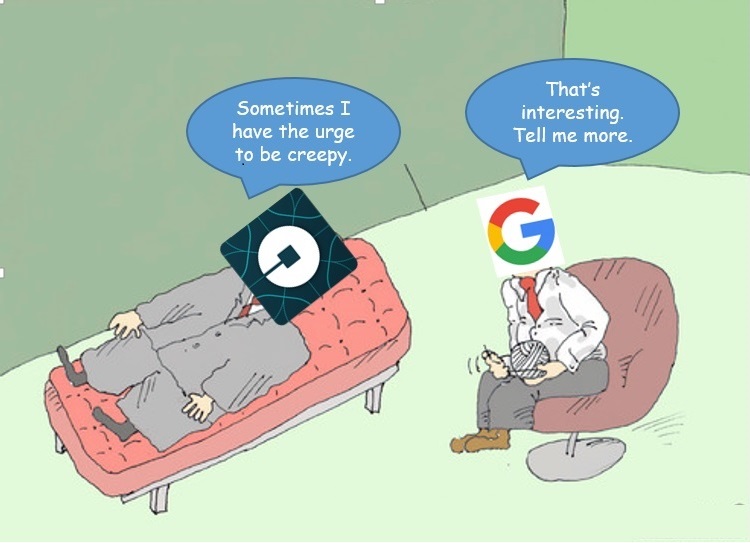

Drawing an analogy to behavior in the world of big data, we can see the Id as the urges of companies for innovation or profit. It’s what drives people to search for new answers, create innovative products, make money, to dive into solving a problem regardless of the consequences. However, unchecked by consideration or adherence to any social contract consideration, or the Superego, unhealthy behavior begins to leak out – privacy and data breaches, unethical use of people’s data, and even abusive workplace environments. Consider Uber, an extraordinarily innovative company with rapid growth. Led by Trevor Kalanick, there was a strong Id component to their rapid growth in a take no prisoners approach. In the process, people’s privacy was often overlooked. They often flaunted city regulations or cease and desist orders. They created an application to evade law enforcement (htt2). They also used data analytics to analyze if passengers were having one night stands. (htt4)

Of course, an inherent lack of trust results from some of these unchecked forces. But, without that driving Id, that drive to create, innovate, make money, it is unlikely Uber would have grown so rapidly. It is also likely no coincidence that some of the downfall of this unchecked Id, resulted in similar Id-like behavior leaking into the workplace, resulting in rampant sexual harassment and misconduct allegations and the eventual resignation of their CEO. Google, which has quickly grown to one of the biggest companies in the world, has also been recently accused of similar rampant sexual misconduct allegations.

Similarly, this is why on the flip side, a heavily Superego organization, one overly protective and regulatory, always considering stringent rules, might also be considered unhealthy. Consider the amount of innovation coming out of governmental organizations and institutions. This Freudian perspective superimposed on the dynamics of forces in the battles of big data organizations and government regulation is one perspective of how to interpret the different roles groups are playing. Neither could exist without each other, and the balance between the two is what creates a healthy growth and environment. There is a necessary amount of regulation, or reflection of social consequences, as well as a corresponding primal urges of recognition or power, that can create they type of growth that actually serves both to create healthy organizations.

References

(n.d.). Retrieved from http://companyculture.com/113-culture-is-personality-writ-large/

(n.d.). Retrieved from https://thehill.com/policy/technology/368560-uber-built-secret-program-to-evade-law-enforcement-report

(n.d.). Retrieved from https://boingboing.net/2014/11/19/uber-can-track-your-one-night.html