Credit-Score Algorithm Bias: Big Data vs. Human

By Anonymous | October 27, 2022

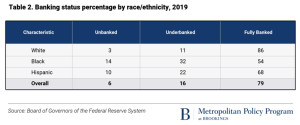

Can Big Data eliminate human bias? The U.S. credit market shows minority groups disproportionately receiving unfavorable terms or outright denials on their loans, mortgages, or credit cards applications. Often, these groups tend to be subject to higher interest rates as opposed to their peer groups. Such decisions rely on the data available to lenders as well as their discretion, thus inserting human bias into the mix.

The reality is a stark contrast in access to credit for minorities, especially for African Americans on interests on business loans, bank branch density, fewer banking location in predominantly Black neighborhoods, and finally a stunted growth of local businesses in those areas. Several solutions were proposed to tackle this issue. The U.S. government signed the Community Reinvestment Act into law in 1977. Initiatives such as the African American minority depository institutions were put in place to increase access to banking for those underserved.

The ever-growing role of Big Data is an opportunity to remove prevalent selection biases in making lending decisions. Nonetheless, the limitations of Big Data are becoming apparent as these minority groups are still largely marginalized. Specifically, much of the existing machine learning models place a heavy emphasis on certain traits of the population to determine their credit worthiness. Demographic characteristics such as education, location, and income are closely intertwined with a population’s profile for instance. In some way, the human features translate into the data a specific way, which would then be validated on some outdated premise, scoring different groups various weights.

Big Data could eliminate human biases by assigning different weights to different population categories. Credit-Score Algorithms ensuring fair and non-biased decisions should put in place. In fact, demographic features used to calculate credit worthiness such as FICO scores may be beneficial if the algorithm used is fair, unbiased, and undergone a strict regulatory review. The key point is that there should not be a single standard that is applied to populations of such differing makeup.

A credit score is a mathematical model comparing an individual’s credit to millions of other people. It is an indication of credit worthiness based on review of past and current relationships with lenders, aiming to provide insight on how an individual manages their debts. Currently, credit scores algorithms leverage information on payment history (35%), credit utilization ratio (30%), length of credit history (15%), new credit (10%), and credit mix or types of credit (10%). The resulting number is then assigned into categories of very bad credit (300-499), bad credit (500–600), fair credit (601–660), good credit (661–780), and excellent credit (781–850).

Error in credit reporting for instance could lead to a long lasting and negative credit worthiness. This is particularly damaging to vulnerable groups since the repercussion can last years.

References:

- https://www.gsb.stanford.edu/insights/big-data-racial-bias-can-ghost-be-removed-machine

- https://www.brookings.edu/research/an-analysis-of-financial-institutions-in-black-majority-communities-black-borrowers-and-depositors-face-considerable-challenges-in-accessing-banking-services/

- https://www.creditninja.com/credit-score-algorithm/