The Tip of the ICE-berg: How Immigration and Customs Enforcement uses your data for deportation

By Anonymous | June 16, 2022

How Immigration and Customs Enforcement uses your data for deportation

Photo by SIMON LEE on Unsplash

The United States is a country made of immigrants. It is a great social experiment in anti-tribalism, meaning that there is no single homogenous group. Everyone came from somewhere with different ideals, ancestry, religion, and life goals that have all blended to make this country the multifaceted melting pot it is today. But for a country made of immigrants, the U.S. certainly goes the extra mile to find and punish those who would immigrate now by any means available to them.

Before Immigration and Customs Enforcement (ICE), the U.S. created the Immigration and Naturalization Service (INS) in 1933 which handled the country’s immigration process, border patrol, and enforcement.[1] During the Great Depression, immigration rates dropped, and the INS shifted its focus to law enforcement.[2] From the 1950s through the 1990s INS answered the public outcry of illegal aliens working in the U.S. by cracking down on immigration and enforcing deportation. However, the INS did not have a meaningful way of tracking those already in the United States and lacked a border exit system for those who had entered the country on visas. INS’s shortcomings were further highlighted in the aftermath of 9/11, as it was uncovered that at least two of the hijackers were in the U.S. on expired visas.[3] In response, the Homeland Security Act dissolved the INS and created ICE, Customs and Border Patrol (CBP), and U.S. Citizenship and Immigration Services (USCIS). ICE wasted no time acquiring data to fulfill its mission “[T]o protect America from the cross-border crime and illegal immigration that threaten national security and public safety.”[4]

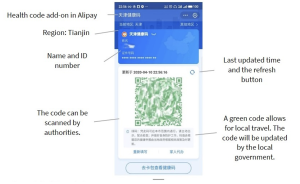

According to a recent report by Wang et al., (2022)., ICE has contracts to increase its surveillance abilities by collecting and using data in the following categories:

- Biometric Data– Fingerprint, face recognition programs, and DNA

- Data Analysis – Combining of different data sources and management

- Data Brokers – Private companies’ databases and those that sell third party data such as Thomson Reuters and Lexis Nexis that sell information such as utility data, credit data, DMV records

- Geolocation Data – GPS tracking, license plate readers, and CCTV

- Government databases – Access to government agencies databases not under the Department of Homeland Security

- Telecom Interception – wiretapping, Wi-Fi interception, and translation services[5]

This practice violates the five basic principles (transparency, individual participation, limitations of purpose, data accuracy, and data integrity) set forth by the HEW Report to safeguard personal information contained in data systems and of which is the framework for the Fair Information Practice Principles (FIPPs).[6] The Department of Homeland Security (DHS) which oversees ICE, uses a formulation of FIPPs in the treatment of Personally Identifiable Information (PII).[7] However, ICE has been able to blur these ethical lines in the pursuit of finding and deporting illegal immigrants.

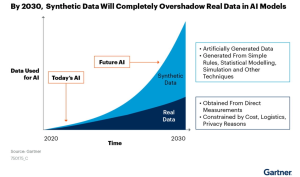

ICE can access information on individuals who are American citizens, legal residents, and legal visa holders in addition to what they have classified as “criminal aliens” all without consent from the individual. ICE has purchased, collected, and used your information to enforce and deport immigrants (some with no criminal record) warrantlessly and without legislative, judicial, or public oversight (Wang et al., 2022). Unfortunately, this may be by design because purchasing data and combining it to identify individuals is not illegal and provides a way for government organizations to get around legal requirements for things such as warrants or reasonable cause.

Setting up basic utilities such as power, water, and internet or being detained, not convicted of a crime, where biometric data is taken (fingerprints, DNA) should not be counted as consent to have your data accessible to ICE.[8] Your trust in these essential services, state, and federal government agencies to safeguard and use your PII data for their originally intended purpose is being abused. ICE continues to spend $388 million per year of taxpayer dollars to purchase as much data as possible to build an unrestricted domestic surveillance system (Wang et al., 2022). While ICE’s stated focus is illegal immigration, what or whom is to stop them from targeting other forms of immigrants, legal resident aliens, dual citizens, or foreign-born American citizens?

This process gives Solove’s Taxonomy of Privacy[9] whiplash as it is rife with opportunity for surveillance, identification, insecurity, blackmail, increased accessibility among other government agencies, invasion, and intrusion for what should be a mundane piece of data they collect or purchase about you – repeatedly.

Photo by ThisisEngineering RAEng on Unsplash

As a nation of immigrants, we owe it to the newest arrivals, no matter how they got here, the basic expectation of privacy, respect, and protection. In contextualizing the exploitation of this data based on Nissenbaum’s[10] approach there is expectation of privacy, US Citizen’s believe they have a right to privacy including from the government, why should this be different based on your immigration status? When you apply for a basic service such as water and power you should not be fearful that your home could be raided at any moment. As an individual will only generate more data as technology and digitalization progresses. U.S. laws and policies with severe penalties for companies and the government need to evolve to provide protection of PII before you become a target of ICE or any other government agency looking to play the role of law enforcement.

Positionality and Reflexivity Statement:

I am an American biracial, able-bodied, cisgender, woman, wife, and mother. I belong to the upper-middle class, non-religious, unaffiliated voter block. I am the product of growing up among a family made up of newly arrived immigrants from all parts of the globe. Some came here through visa programs, some came sponsored and some overstayed the tourist visas, fell in love, and married Americans. Regardless of their path to legal status to live and work in the United States, nothing can describe the weight lifted and relief once granted approval to remain in this country to not only the individual being reviewed but the entire community of family and friends, they have created around them. I tell your this from experience but with limited information so that I don’t give ICE another data point or a reason to come looking.

[1] USCIS. (n.d.). USCIS. USCIS. Retrieved 2022, from https://www.uscis.gov/about-us/our-history

[2] USCIS History Office and Library. (n.d.). Overview of INS History. Https://Www.Uscis.Gov/Sites/Default/Files/Document/Fact-Sheets/INSHistory.Pdf. Retrieved May 19, 2022, from https://www.uscis.gov/sites/default/files/document/fact-sheets/INSHistory.pdf

[3] 9/11 Commission Report: Staff Monographs. (2004). Monograph on 9/11 and Terrorist Travel. National Commission on Terrorist Attacks Upon the United States. Retrieved May 19, 2022, from https://www.9-11commission.gov/staff_statements/911_TerrTrav_Ch1.pdf

[4] ICE. (n.d.). ICE. U.S. Immigration and Customs Enforcement. Retrieved May 19, 2022, from https://www.ice.gov

[5] Wang, N., McDonald, A., Bateyko, D., & Tucker, E. (2022, May). American Dragnet, Data-Driven Deportation in the 21st Century. Center on Privacy & Technology at Georgetown Law. https://americandragnet.org

[6] U.S. Department of Health, Education and Welfare. (July 1973) Records, Computers and the Rights of Citizens, Retrieved May 19, 2022, from https://aspe.hhs.gov/reports/records-computers-rights-citizens

[7] Fair Information Practice Principles (FIPPs) in the Information Sharing Environment. https://pspdata.blob.core.windows.net/webinarsandpodcasts/The_Fair_Information_Practice_Principles_in_the_Information_Sharing_Environment.pdf

[8] Department of Homeland Security. (2020, July). Privacy Impact Assessment for CBP and ICE DNA Collection, DHS/ALL/PIA-080. Retrieved May 19, 2022, from https://www.dhs.gov/sites/default/files/publications/privacy-pia-dhs080-detaineedna-october2020.pdf

[9] Solove, Daniel J. (2006). A Taxonomy of Privacy. University of Pennsylvania Law Review, 154:3 (January 2006), p. 477. https://ssrn.com/abstract=667622

[10] Nissenbaum, Helen F. (2011). A Contextual Approach to Privacy Online. Daedalus 140:4 (Fall 2011), 32-48.