Making AI “Explainable”: Easier Said than… Explained

By Julia Buffinton | October 21, 2018

Technological advances in machine learning and artificial intelligence (AI) have opened up many applications for these computational techniques in a range of industries. AI is now used in facial recognition for TSA pre-check, reviewing resumes for large companies, and determining criminal sentencing. In all of these examples, however, these algorithms have received attention for being biased. Biased predictions can have grave consequences, and determining how biases end up in the algorithm is key to preventing them.

Understanding how algorithms reach their conclusions is crucial to their adoption in industries such as insurance, medicine, finance, security, legal, and military. Unfortunately, the majority of the population is not trained to understand these models, viewing them as opaque and non-intuitive. This is challenging when accounting for the ethical considerations that surround these algorithms – it’s difficult to understand their bias if we don’t understand how they work in general. Thus, seeking AI solutions that are explainable is key to making sure that end users of these approaches will “understand, appropriately trust, and effectively manage an emerging generation of artificially intelligent machine partners.”

How can we do that?

Developing AI and ML systems are resource-intensive, and being thorough in managing ethical implications and safety adds to that. The federal government has recognized not only the importance of AI but also ethical AI and has increased its attention and budget for both.

In 2016, the Obama administration formed the new National Science and Technology Council (NSTC) Subcommittee on Machine Learning and Artificial Intelligence to coordinate federal activity relating to AI. Additionally, the Subcommittee on Networking and Information Technology Research and Development (NITRD) created a National Artificial Intelligence Research and Development Strategic Plan to recommended roadmaps for AI research and development investments by the federal government.

This report identifies seven priorities:

- Make long-term investments in AI research

- Develop effective methods for human-AI collaboration

- Understand and address the ethical, legal, and societal implications of AI

- Ensure the safety and security of AI systems

- Develop shared public datasets and environments for AI training and testing

- Measure and evaluate AI technologies through standards and benchmarks

- Better understand the national AI R&D workforce needs

Three of the seven priorities focus on the minimizing the negative impacts of AI. The plan indicates that “researchers must strive to develop algorithms and architectures that are verifiably consistent with, or conform to, existing laws, social norms and ethics,” and to achieve this, they must “develop systems that are transparent, and intrinsically capable of explaining the reasons for their results to users.”

Is this actually happening?

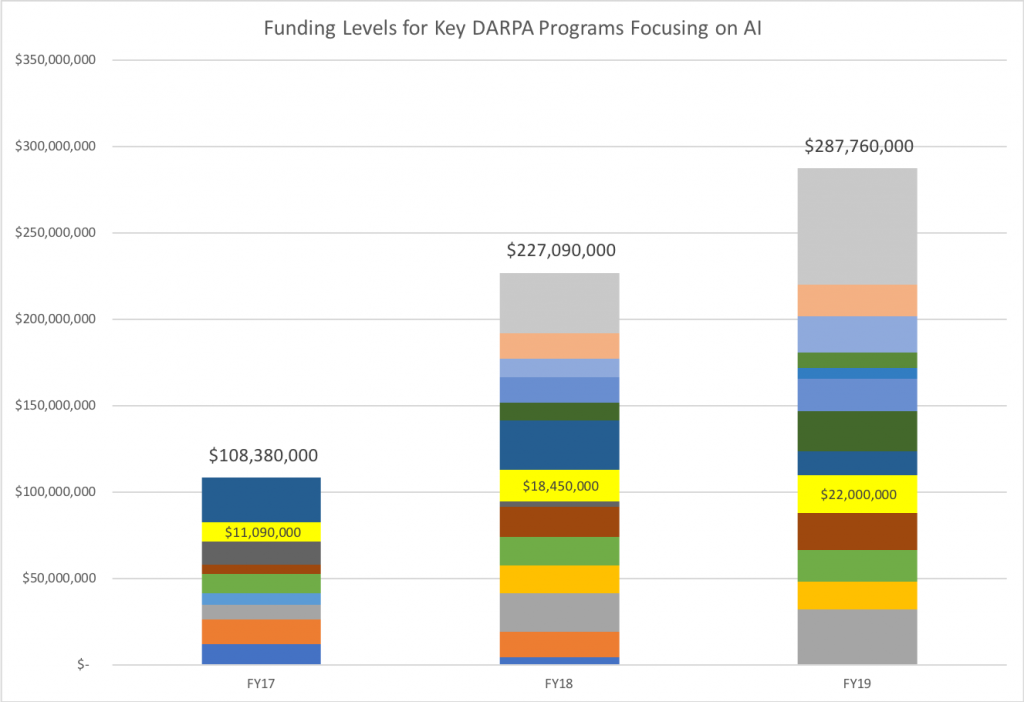

Even though topics related to security, ethics, and policy of AI comprise almost half of the federal government’s funding priorities for AI, this has not translated directly into funding levels for programs. A brief survey of the budget for the Defense Advanced Research Project Agency (DARPA) shows an overall increase in funding for 18 existing programs that focus on advancing basic and applied AI research, almost doubling each year. However, only one of these programs fits into the ethical and security priorities.

Funding Levels for DARPA AI Programs

The yellow bar on the table represents the Explainable AI program, which aims to generate machine learning techniques that produce more explainable yet still accurate models and enable human users to understand. trust, and manage these models. Target results from this program include a library of “machine learning and human-computer interface software modules that could be used to develop future explainable AI systems” that would be available for “refinement and transition into defense or commercial applications.” While funding for Explainable AI increases, it is not at rate proportional to the overall spending increases for DARPA AI programs.

What’s the big deal?

This issue will become more prevalent as the national investment in AI grows. Recently, predictions have been made that China will close the AI gap by the end of this year. As US organizations in industry and academia strive to compete with their international counterparts, careful consideration must be given not only to improving technical capabilities but also developing an ethical framework to evaluate these approaches. This not only affects US industry and economy, but it has big consequences for national security. Reps. Will Hurd (TX) and Robin Kelly (IL) argue that, “The loss of American leadership in AI could also pose a risk to ensuring any potential use of AI in weapons systems by nation-states comports with international humanitarian laws. In general, authoritarian regimes like Russia and China have not been focused on the ethical implications of AI in warfare.” These AI tools give us great power, but with great power comes great responsibility, and we have a responsibility to ensure that the systems we build are fair and ethical.