Update: Video Link! https://goo.gl/photos/qU17V29jDkocHKya6

Description:

Our instrument is a group of five robots that each have their own percussive abilities. They are 1.) a four armed tap-bot that has four servos that tap tap tap on things, 2.) a two-armed spinning bot that hits things with it’s metal hands to make noise, 3.) a rolling pinecone that makes a rumbling noise on it’s surface, 4.) a shepard with a tap-tapping staff, and 5.) a scraper-bot that uses a bristly brush to scrape things and make noise.

We mounted 4/5 of them on a turning lazy susan, with the intention of making customization possible by changing the things on which the robots are tapping. (We could rotate the lazy susan to change what object each robot was tapping on.)

Our robots are controlled by a control board with 5 pots. They control: 1.) the tempo of the music that our bots make, 2.) the pattern with which the pine cone rolls, 3.) the pattern with which the scraper scrapes, 4.) the pattern with which the shepard taps, and 5.) the speed with which the spinny bot spins.

Challenges included: 1.) Getting the robots to tap with similar patterns // with some semblance of coherent synchrony, 2.) getting the different settings of the pots to have noticeably different sounds.

Materials Used:

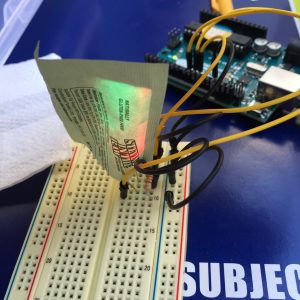

– 2 Arduinos

– 4 Micro-servos

– 3 normal servos

– 3D printed plastic

– lots! of jumper wires

– machine screws / nuts

– beer bottle

– 3 soda cans

– pine cone

– chopsticks

– 5 pots

– laser cut control board, pinecone eyes, lazy susan parts

– construction paper

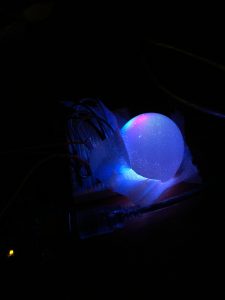

– foam ball

– clay

– DC motor

– metal wire

– metal bolts/nuts from Dan’s bed

– wire brush

– metal marbles

– chipotle tin

– cardboard scrapey surface w/ packaging material

– diode

– resistors

– breadboard

– 3 battery packs

– rubber bands

Code:

#include <Servo.h>

Servo myservoR;

Servo myservoRp;

Servo myservoL;

Servo myservoLp;

Servo servoLeah;

Servo servoAndrew;

Servo servoJake;

int deltaPot = 0;

int leahPot = 1;

int leahBeat = 0;

int andrewPot = 2;

int andrewBeat = 0;

int danielPot = 3;

int danielBeat = 0;

int jakePot = 4;

int jakeBeat = 0;

int pos = 0; // variable to store servo position

void setup()

{

Serial.begin(9600); // setup serial

myservoR.attach(4); //Rightmost arm from point of view of the crab

myservoRp.attach(5); //Right-sub-prime (right arm of the left crab)

myservoL.attach(6); //Leftmost arm from point of view of the crab

myservoLp.attach(7);// "Left-sub-prime" (left arm of the right crab)

servoLeah.attach(8);

servoAndrew.attach(9);

servoJake.attach(10);

}

void loop() {

int delta = potCipher(analogRead(deltaPot))*2; //speed of the hammering

Serial.print("delta: ");

Serial.println(delta);

servoAndrew.write(80); //ARMS UP!!!

servoJake.write(80);

servoLeah.write(80);

myservoR.write(80);

myservoL.write(100);

myservoLp.write(100);

myservoRp.write(80);

delay(1000);

//PLAY!

andrewBeat = potCipher(analogRead(andrewPot));

Serial.print("andrewBeat: ");

Serial.println(andrewBeat);

danielBeat = potCipher(analogRead(danielPot));

Serial.print("danielBeat: ");

Serial.println(danielBeat);

jakeBeat = potCipher(analogRead(jakePot));

Serial.print("jakeBeat: ");

Serial.println(jakeBeat);

leahBeat = potCipher(analogRead(leahPot));

Serial.print("leahBeat: ");

Serial.println(leahBeat);

for (int i=0; i <= 400; i++){

servoAndrew.write(getArmLoc(pos, andrewBeat));

servoLeah.write(getArmLoc(pos, leahBeat));

servoJake.write(getArmLoc(pos, jakeBeat));

myservoR.write(abs(abs(80-pos)-80)); //This series SHOULD do 16th-notes, approximately... but it sounds a bit off, so my math might be wrong

myservoL.write(abs(abs(80-(abs(pos-60)))+100));

myservoLp.write(abs(abs(80-(abs(pos-80)))+100));

myservoRp.write(abs(abs(40-pos)-80));

pos += delta;

if (pos >= 160) pos=0;

delay(35);

}

delay(0);

}

int getArmLoc(int pos, int beatType) {

if (beatType == 1) {

return abs(abs(80-pos)-80);

}

else if (beatType == 2) {

return abs(abs(40-pos)-80);

}

else if (beatType == 3) {

return abs(abs(80-(abs(pos-60)))+100);

}

else if (beatType == 4) {

return abs(abs(80-(abs(pos-80)))+100);

}

}

// returns a potSection value based on the position of the pot

int potCipher(int potVal) {

int potSection;

if (potVal >= 0 && potVal <= 205) {

potSection = 0;

}

else if (potVal >= 206 && potVal <= 410) {

potSection = 1;

}

else if (potVal >= 411 && potVal <= 615) {

potSection = 2;

}

else if (potVal >= 615 && potVal <= 820) {

potSection = 3;

}

else {

potSection = 4;

}

return potSection;

}