Members:

Yifei Liu, Reema Naqvi, Olivia Ting

Components:

1 Walker

Arduino

LEDs

FSRs

Piezo speaker

DC motor

Some wireless mechanism for controlling the sensors

Idea:

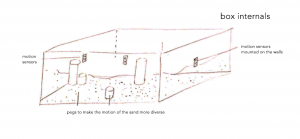

For our final project, we decided to do something different from our mid term presentation while staying in the same space i.e. building something to benefit the elderly. We will build a system around a walker that through light, sound and vibration signals, enables the correct and safe use of walkers.

A large number of the elderly population uses a walker at some point, whether on a temporary basis following a fall/surgery or permanently when need be. But using a walker correctly is not easy or instinctive, and has a learning curve itself. Where to hold the handle, how fast to walk, how close it is to one’s body are all critical in avoiding falls or injuries. Having such a system is important not just to help people learn using a walker for the first time, but also so they don’t forget the fundamentals later on.

We will place pressure sensors on the handles as well as on a light mitt-like glove. These sensors will detect whether the person got up by putting their weight on the walker right away, or by pushing themselves off the chair by using their hands against the chair. If they grasp the walker and try to use it to pull themselves up, they can potentially slip and fall. So, DC motor vibrations along with the Piezo speakers will buzz and alert the user if they are getting up incorrectly. Similarly, placing LEDs on the handle will signal the correct place to hold the handle.

We are in the process of deciding on a wireless technology so that we don’t have wires sticking out of the walker which would be aesthetically unappealing, as well as dangerous in case they get tangled around the wheels or the user.